💬 Editorial: Precision adjuvant therapy for stage III ColonCancer may be enhanced through molecular profiling for ctDNA status and PIK3CA mutation, informing use of celecoxib or aspirin alongside standard treatment.

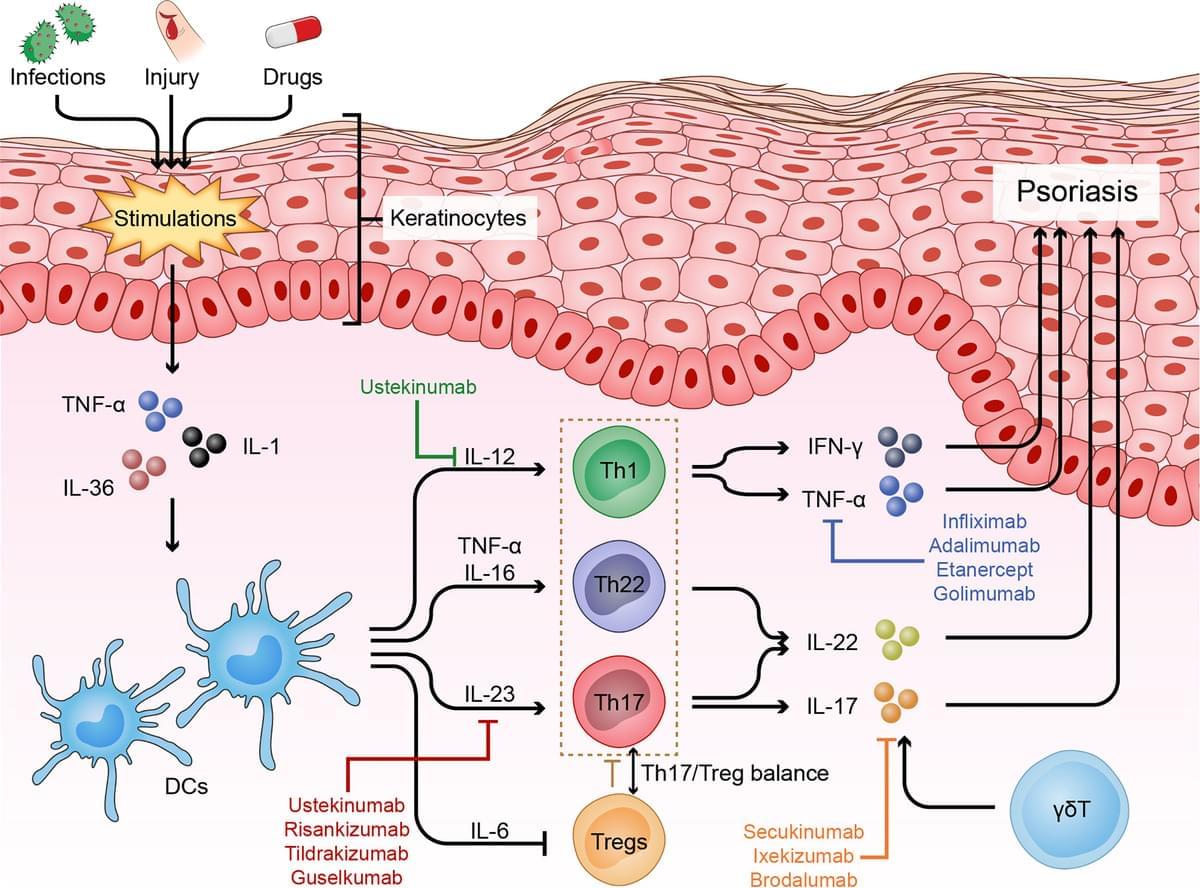

CALGB/SWOG 80,702 Alliance was a placebo-controlled randomized clinical trial (RCT) of daily celecoxib (400 mg/d vs placebo) as an adjuvant therapy to fluorouracil, leucovorin, and oxaliplatin (FOLFOX) toward improving disease-free survival (DFS) of minimal residual localized (stage III) metastatic colon cancer.1 The rationale for the trial was a preponderance of evidence from RCTs and observational studies showing that selective cyclooxygenase 2 (COX-2 or prostaglandin-endoperoxide synthase 2 [PTGS2]) inhibitors, nonsteroidal anti-inflammatory drugs (NSAIDs) such as celecoxib and rofecoxib, reduce the incidence of premalignant colorectal polyps and colorectal cancer (CRC). Although the primary trial results did not show daily celecoxib to be statistically significantly associated with improvement in DFS or overall survival (OS),1 the results raised the possibility that yet-to-be-determined subgroups may experience a significant benefit. Indeed, Nowak et al2 reported in 2024 that a significant protective effect was observed among patients with tumors harboring mutations to exons 9 or 20 of the PIK3CA gene within the subset of the Alliance trial population with available whole-exome tumor sequencing data.

The possibility for molecular selection for NSAID adjuvant therapy of CRC, specifically on the basis of PIK3CA mutation was first raised in a prospective observational study by Liao and colleagues3 in 2012 for aspirin—a less selective COX-2 inhibitor. This finding for aspirin was later corroborated with post hoc observational follow-up of the VICTOR RCT of daily rofecoxib (20 mg vs placebo),4 which, like the Alliance trial, did not demonstrate a significant protective benefit for rofecoxib among unselected patients.5 Most recently, 2 RCTs of daily low-dose aspirin, ALASSCA6 and SAKK41/13,7 showed that aspirin, among patients enrolled using molecular selection for tumor PIK3CA mutation, led to a similar survival benefit of approximately 50% compared to placebo.