Latest cybersecurity threats, malware campaigns, research findings, and key security developments from this week’s ThreatsDay Bulletin.

A China-linked advanced persistent threat actor tracked as UAT-9244 has been targeting telecommunication service providers in South America since 2024, compromising Windows, Linux, and network-edge devices.

According to Cisco Talos researchers, the adversary is closely associated with the FamousSparrow and Tropic Trooper hacker groups, but is tracked as a separate activity cluster.

This assessment has high confidence and is based on similar tooling, tactics, techniques, and procedures (TTPs), and victimology observed in attacks attributed to the threat actors.

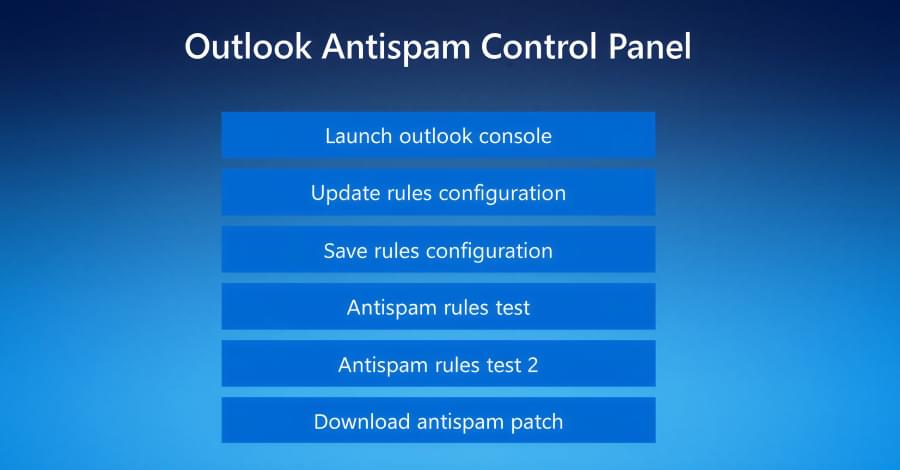

The panel serves as a hub for configuring, tracking, and refining campaigns. It features pre‑built templates, attachment files for common lure formats, domain and hosting configuration, redirect logic, and victim tracking. Operators can also configure how the malicious content is delivered through attachments, as well as keep tabs on valid and invalid sign-in attempts.

The captured information, such as credentials, multi-factor authentication (MFA) codes, and session cookies, can be downloaded directly within the panel or forwarded to Telegram for near‑real‑time monitoring.

“It enabled thousands of cybercriminals to covertly access email and cloud-based service accounts,” Europol said. “At scale, the platform generated tens of millions of phishing emails each month and facilitated unauthorized access to nearly 100,000 organizations globally, including schools, hospitals, and public institutions.”

“The hacktivist threat in the Middle East is highly lopsided, with two groups, Keymous+ and DieNet, driving nearly 70% of all attack activity between February 28 and March 2,” Radware said in a Tuesday report. The first distributed denial-of-service (DDoS) attack was launched by Hider Nex (aka Tunisian Maskers Cyber Force) on February 28, 2026.

According to details shared by Orange Cyberdefense, Hider Nex is a shadowy Tunisian hacktivist group that supports pro-Palestinian causes. It leverages a hack-and-leak strategy combining DDoS attacks with data breaches to leak sensitive data and advance its geopolitical agenda. The group emerged in mid-2025.

In all, a total of 149 hacktivist DDoS claims were recorded targeting 110 distinct organizations across 16 countries. The attacks were carried out by 12 different groups, including Keymous+, DieNet, and NoName057(16), which accounted for 74.6% of all activity.

Bitwarden announced support for logging into Windows 11 devices using passkeys stored in the manager’s vault, enabling phishing-resistant authentication.

The new feature is available for all plans, including the free tier, and allows logging into Windows by selecting the security key option and scanning a QR code with a mobile device to confirm access to the passkey stored in the Bitwarden encrypted vault.

Bitwarden is an open-source password and secrets manager that can store account passwords, passkeys, API keys, credit card details, identity data, and private notes.

Microsoft has released the KB5075039 Windows Recovery Environment update for Windows 10 to fix a long-standing issue that prevented some users from accessing the Recovery environment.

The Windows Recovery Environment (WinRE) is a minimal troubleshooting environment used to repair or restore the operating system after it fails to start, to diagnose crashes, or to remove malware.

In October 2025, Microsoft confirmed that the KB5066835 Patch Tuesday updates broke USB mouse and keyboard input when using the Windows 11 Recovery Environment, making it difficult for many to use the troubleshooting tool.

Amazon is rolling out a new always-on DDoS protection feature for game servers hosted on Amazon’s GameLift Servers architecture.

Threat hunters have called attention to a new campaign as part of which bad actors masqueraded as fake IT support to deliver the Havoc command-and-control (C2) framework as a precursor to data exfiltration or ransomware attack.

The intrusions, identified by Huntress last month across five partner organizations, involved the threat actors using email spam as lures, followed by a phone call from an IT desk that activates a layered malware delivery pipeline.

“In one organization, the adversary moved from initial access to nine additional endpoints over the course of eleven hours, deploying a mix of custom Havoc Demon payloads and legitimate RMM tools for persistence, with the speed of lateral movement strongly suggesting the end goal was data exfiltration, ransomware, or both,” researchers Michael Tigges, Anna Pham, and Bryan Masters said.