A new breakthrough in orbitronics reveals that atomic vibrations can control the orbital motion of electrons.

Are you considering using AI to help improve your organization? Here’s how AI is accelerating innovation.

Breakthrough improvements to Microsoft’s glass-based data-storage technology mean ordinary glassware, such as that used in cookware and oven doors, can store terabytes of data, with the information lasting 10,000 years.

The technology, which has been in development under the “Project Silica” banner since 2019, has seen steady improvements, and scientists outlined the latest innovations today (Feb. 18) in the journal Nature.

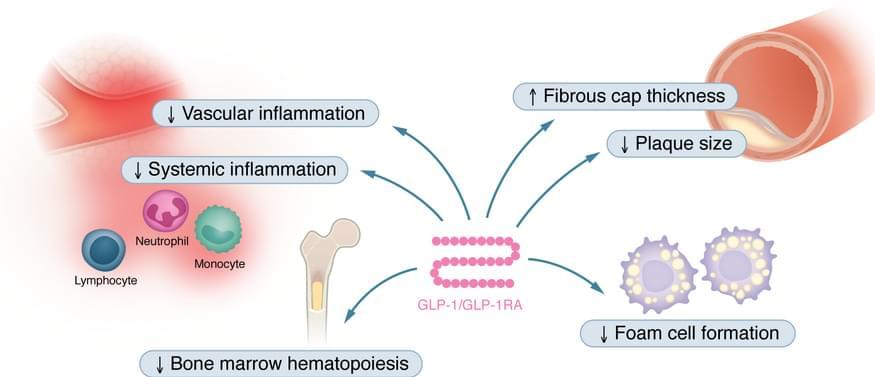

As part of JCI’s Review Series on Clinical Innovation and Scientific Progress in GLP-1 Medicine Florian Kahles, Andreas L. Birkenfeld, & Nikolaus Marx summarize the effects of GLP-1 and GLP-1RAs in the cardiovascular system as well as clinical data of GLP-1RAs in individuals with cardiovascular disease or in those at high risk.

1Department of Internal Medicine I, University Hospital Aachen, RWTH Aachen, Aachen, Germany.

2German Center for Diabetes Research (DZD), Neuherberg, Germany.

3Department of Internal Medicine IV, Diabetology, Endocrinology and Nephrology, Eberhard-Karls University Tübingen, Tübingen, Germany.

Over 50 years ago, the classic Levi’s® Trucker jacket was introduced. But we are not one to rest on past accomplishments.

Now, the brand is turning to futuristic modes of innovation in manufacturing, pioneering a new approach in denim design.

Fast Company joined Levi’s® Head of Global Product Innovation, Paul Dillinger, at the Autodesk Pier 9 Workshop in San Francisco to witness how Levi’s® has been experimenting with 3D printing, creating digital renderings of the denim jacket which is essentially a shell of what the “real” thing could look like.