Physicists in China have uncovered new evidence that chiral phonons and magnons can interact strongly inside magnetic crystals. Using neutron spectroscopy, a team led by Song Bao at Nanjing University mapped magnetic signatures linked to chiral phonons in a ferrimagnetic material, revealing a previously elusive relationship between lattice vibrations and magnetic excitations. Reported in Physical Review Letters, the results could help researchers better understand how heat, sound and spin interact in quantum materials.

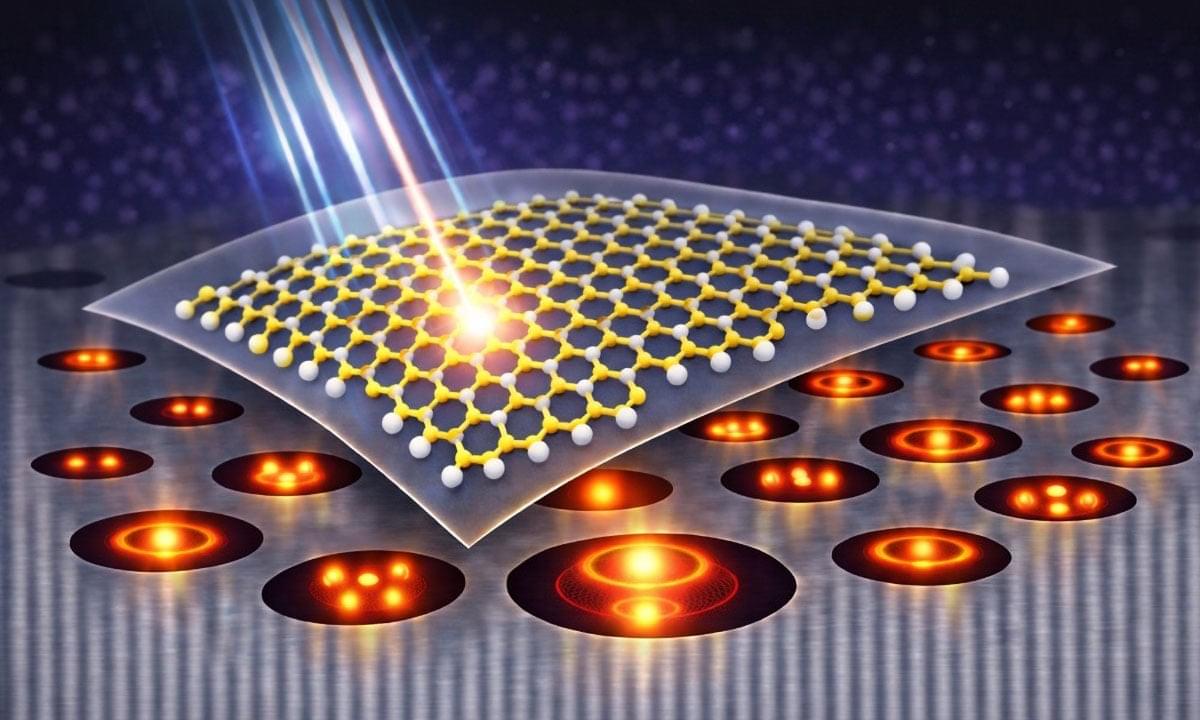

Phonons are collective vibrations of atoms in a crystal lattice which carry quantized packets of sound and heat through a solid. As quasiparticles, they behave somewhat like particles moving through the material and can interact with other excitations. In some cases, phonons also exhibit chirality: where some property of a particle differs from its mirror image.

For phonons, chirality arises when ions move in circular motions as the lattice vibrates, which imparts both an angular momentum and a tiny magnetic moment, which rotates in a plane perpendicular to the phonon’s direction of travel. Crucially, however, the phonon’s properties will vary depending on whether this rotation is clockwise or anticlockwise.