Cybersecurity researchers have discovered a fresh set of malicious packages across npm and the Python Package Index (PyPI) repository linked to a fake recruitment-themed campaign orchestrated by the North Korea-linked Lazarus Group.

The coordinated campaign has been codenamed graphalgo in reference to the first package published in the npm registry. It’s assessed to be active since May 2025.

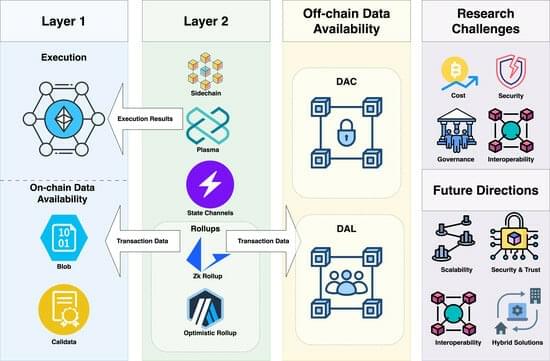

“Developers are approached via social platforms like LinkedIn and Facebook, or through job offerings on forums like Reddit,” ReversingLabs researcher Karlo Zanki said in a report. “The campaign includes a well-orchestrated story around a company involved in blockchain and cryptocurrency exchanges.”