“CO2 capture with post-modified nitrile-and styrene-butadiene-styrene rubbers”

Chem.

This website uses a security service to protect against malicious bots. This page is displayed while the website verifies you are not a bot.

Microsoft is rolling out new Windows 11 Insider Preview builds that improve security and performance during batch file or CMD script execution.

As Microsoft explained today, IT administrators can now enable a more secure processing mode that prevents batch files from being modified while they run by adding the LockBatchFilesInUse registry value under HKEY_LOCAL_MACHINE\Software\Microsoft\Command Processor.

Policy authors can also enable this mode using the LockBatchFilesWhenInUse application manifest control.

Nishada Ramphal, Ari Waisman et al. (Johannes Gutenberg-Universität Mainz) reveal that NIK drives neuroantigen-specific T cell priming by regulating antigen presentation and IL-23 production, identifying NIK as a key orchestrator of myeloid-driven CNS autoimmunity.

Neuroinflammation.

This website uses a security service to protect against malicious bots. This page is displayed while the website verifies you are not a bot.

Ease see my latest Forbes article and have a great weekend! Chuck Brooks by Chuck Brooks.

#artificialIntelligence #ai #future #tech Forbes

AI is redefining power, productivity, security, and sovereignty. Dual-use, convergent, and autonomous AI is the 21st-century force multiplier. Not only is technology advancing, but civilization is about to change.

The 1956 Dartmouth Conference invented the term “artificial intelligence.” Alan Turing and other pioneers shaped the conceptualization of AI. The first systems used symbolic logic and determinism. Certain expert systems excelled but struggled in dynamic, uncertain environments. Fragility, computational capacity, and data accessibility caused “AI winters.”

A previously undocumented threat activity cluster has been attributed to an ongoing malicious campaign targeting education and healthcare sectors in the U.S. since at least December 2025.

The campaign is being tracked by Cisco Talos under the moniker UAT-10027. The end goal of the attacks is to deliver a never-before-seen backdoor codenamed Dohdoor.

“Dohdoor utilizes the DNS-over-HTTPS (DoH) technique for command-and-control (C2) communications and has the ability to download and execute other payload binaries reflectively,” security researchers Alex Karkins and Chetan Raghuprasad said in a technical report shared with The Hacker News.

Samantha K. Dziurdzik, Vaishnavi Sridhar, Elizabeth Conibear et al. (University of British Columbia) identify a conserved adaptor that recruits BLTP2-like proteins to ER–plasma membrane contacts by binding helical projections on their lipid transfer channel to maintain lipid homeostasis.

MembraneContactSites.

This website uses a security service to protect against malicious bots. This page is displayed while the website verifies you are not a bot.

Indrajyoti Indra, Sergey M. Troyanovsky et al. (Northwestern University Feinberg School of Medicine) show that two δ-catenins, p120 and plakophilin-4, promote distinct cadherin clustering modes, α-catenin–dependent and α-catenin–independent, respectfully, thereby generating different types of adherens junctions.

This website uses a security service to protect against malicious bots. This page is displayed while the website verifies you are not a bot.

Researchers at IMDEA Networks Institute, together with European partners, have found that tire pressure sensors in modern cars can unintentionally expose drivers to tracking. Over a ten-week study, they collected signals from more than 20,000 vehicles, revealing a hidden privacy risk and highlighting the need for stronger security measures in future vehicle sensor systems.

Most modern cars are equipped with a Tire Pressure Monitoring System (TPMS), mandatory since the late 2000s in many countries for their contribution to road safety. This system uses small sensors in each wheel to monitor tire pressure and sends wireless signals to the car’s computer to alert the driver if a tire is underinflated.

However, the researchers found that these tire sensors also send a unique ID number in clear, unencrypted wireless signals, meaning that anyone nearby with a simple radio receiver can capture the signal, and recognize the same car again later. Most vehicle tracking today uses cameras that need clear visibility and line-of-sight to a car. TPMS tracking is different: tire sensors automatically send radio signals that pass through walls and vehicles, allowing small hidden wireless receivers to capture them without being seen.

Accessible minimal requirements for reproducible light microscopy. This viewpoint from Paula Montero Llopis, Chloë van Oostende-Triplet, the QUAREP-LiMi consortium and colleagues presents a community-endorsed checklist defining minimal light microscopy metadata to improve rigor, reproducibility, and transparency in research.

This website uses a security service to protect against malicious bots. This page is displayed while the website verifies you are not a bot.

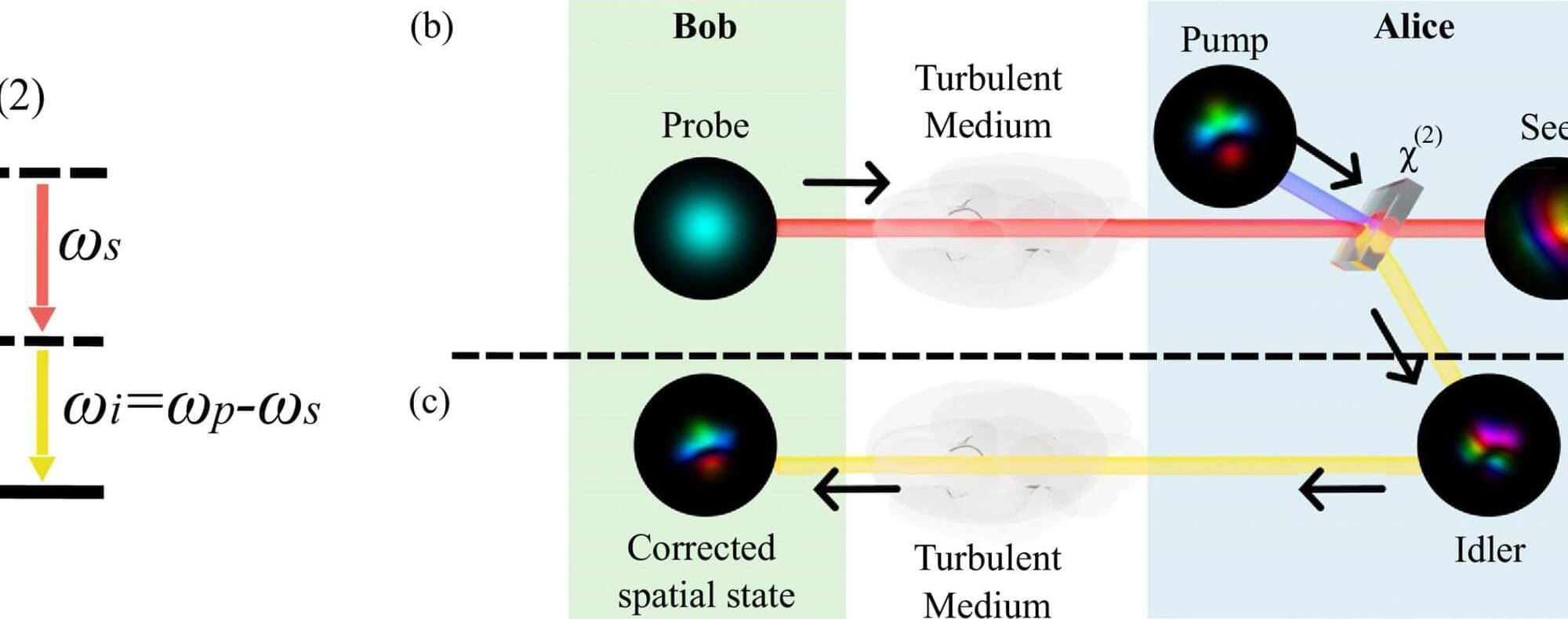

A University of Ottawa team has developed a new way to protect free-space quantum key distribution (QKD) from atmospheric turbulence, one of the main causes of distortion and errors when sending quantum information through air. Their paper, “All-optical turbulence mitigation for free-space quantum key distribution using stimulated parametric down-conversion,” appears in the journal Optica.

Instead of relying on complex, expensive digital adaptive optics, the researchers use a nonlinear optical process called “stimulated parametric down-conversion (StimPDC).” The technique leverages StimPDC’s phase-conjugation property to correct spatial-mode distortions dynamically without requiring prior knowledge of the turbulent channel.

“We found the idea of using a fundamental optical process to correct the effects of turbulence in real time to be both innovative and largely unexplored,” said Aarón Cardoso, lead author and Quantum Optics Student Researcher at uOttawa. “Our results show we can reduce quantum error rates below the security threshold even under strong turbulence.”