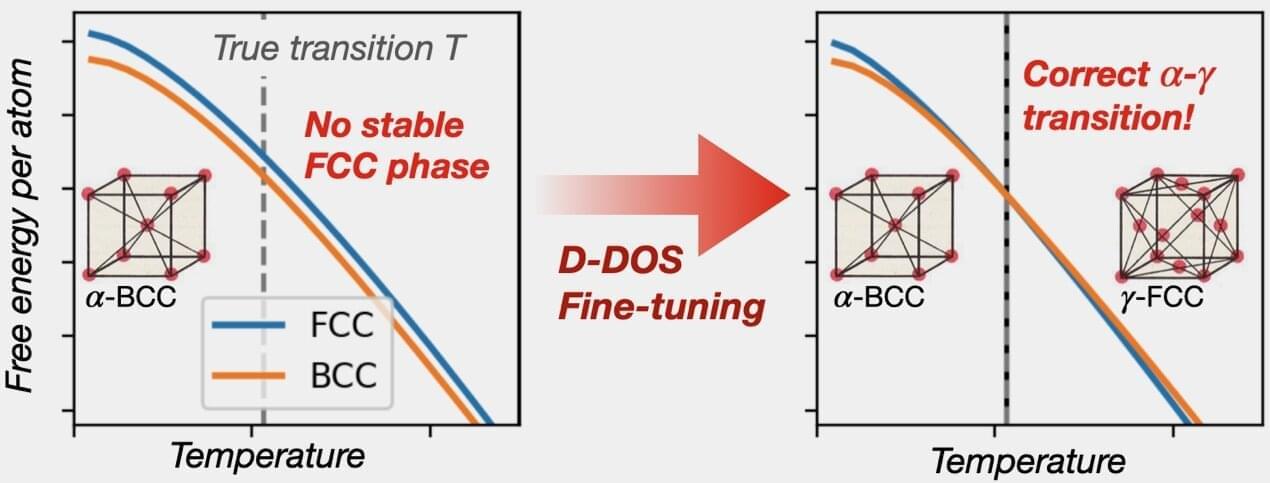

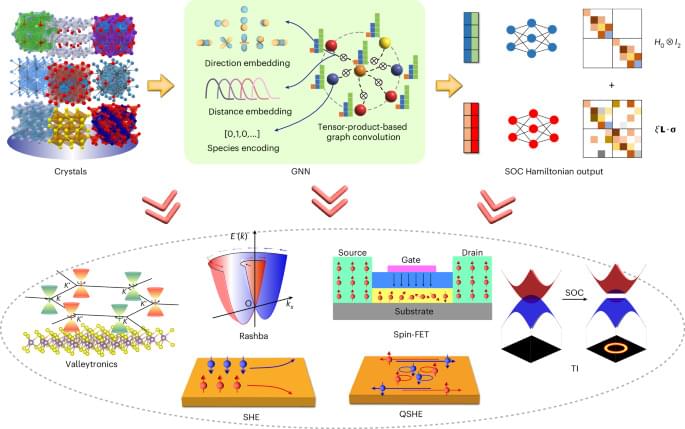

A new computational method allows modern atomic models to learn from experimental thermodynamic data, according to a University of Michigan Engineering and Université Paris-Saclay study published in Nature Communications. Leveraging a machine learning technique called score matching, the method expresses the thermodynamic free energy of atomic systems as a function of the underlying atomic interaction model, unlike standard schemes where the interaction model is fixed.

By returning thermodynamic predictions as functions rather than static numbers, the method, which is also over 10 times more efficient than previous approaches, can easily quantify and help accelerate computational materials discovery by opening up new inverse design capabilities. The method is called “descriptor density of states” and is abbreviated D-DOS.

“The D-DOS method provides a two-way connection between the latest generation of atomic simulations and the classical resource of phase diagrams, exposing these datasets to machine learning-driven computer models,” said Thomas Swinburne, an assistant professor of mechanical engineering at U-M and co-corresponding author of the study.