An international team of scientists from IBM, The University of Manchester, Oxford University, ETH Zurich, EPFL and the University of Regensburg have created and characterized a molecule unlike any previously known—one whose electrons travel through its structure in a corkscrew-like pattern that fundamentally alters its chemical behavior. The work appears in Science.

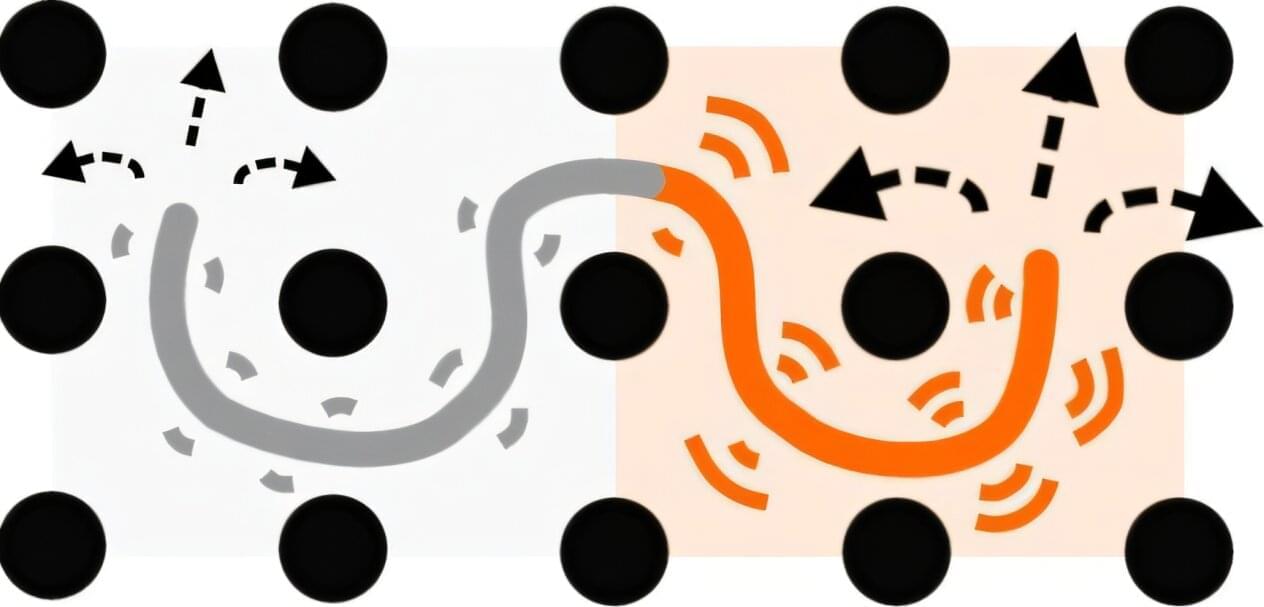

This is the first experimental observation of a half-Möbius electronic topology in a single molecule. To the scientists’ knowledge, a molecule with such topology has never before been synthesized, observed, or even formally predicted.

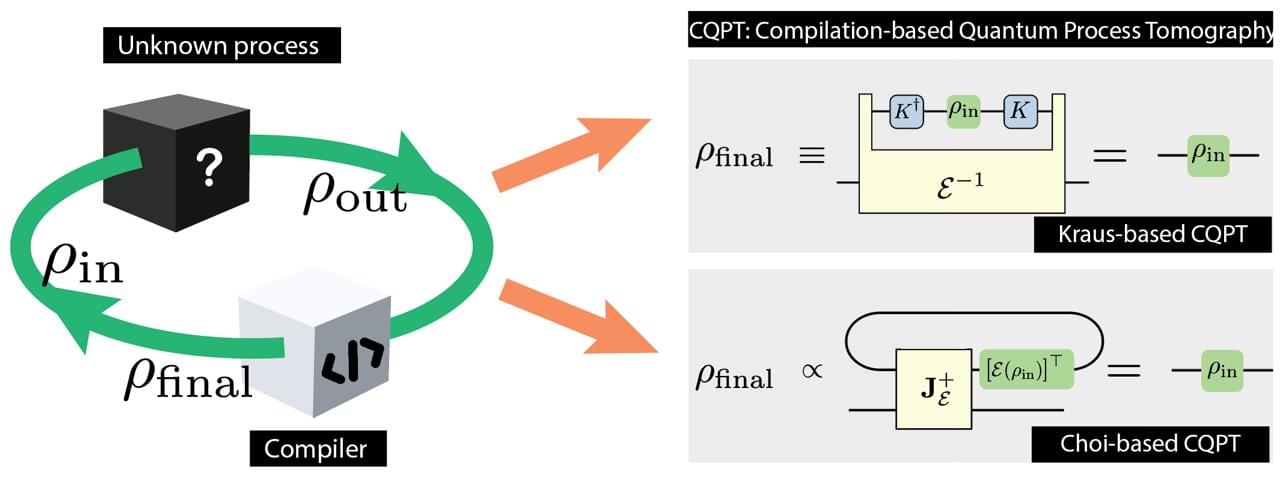

Understanding this molecule’s behavior at the electronic structure level required something equally fundamental: a high-fidelity quantum computing simulation. The discovery advances science on two fronts. For chemistry, it demonstrates that electronic topology—the property governing how electrons move through a molecule—can be deliberately engineered, not merely found in nature.