Virtual reality (VR) and augmented reality (AR) are technologies that allow users to immerse themselves in digital worlds or enhance their surroundings with computer-generated filters or images, respectively. Both these technologies are now widely used worldwide, whether to experience video games and media content in more engaging ways or improve specific training and assist professionals in their daily tasks.

To date, VR and AR have primarily focused on what users see and hear, primarily improving the quality of digital experiences from a visual and auditory standpoint. The sense of touch, on the other hand, has been in great part overlooked.

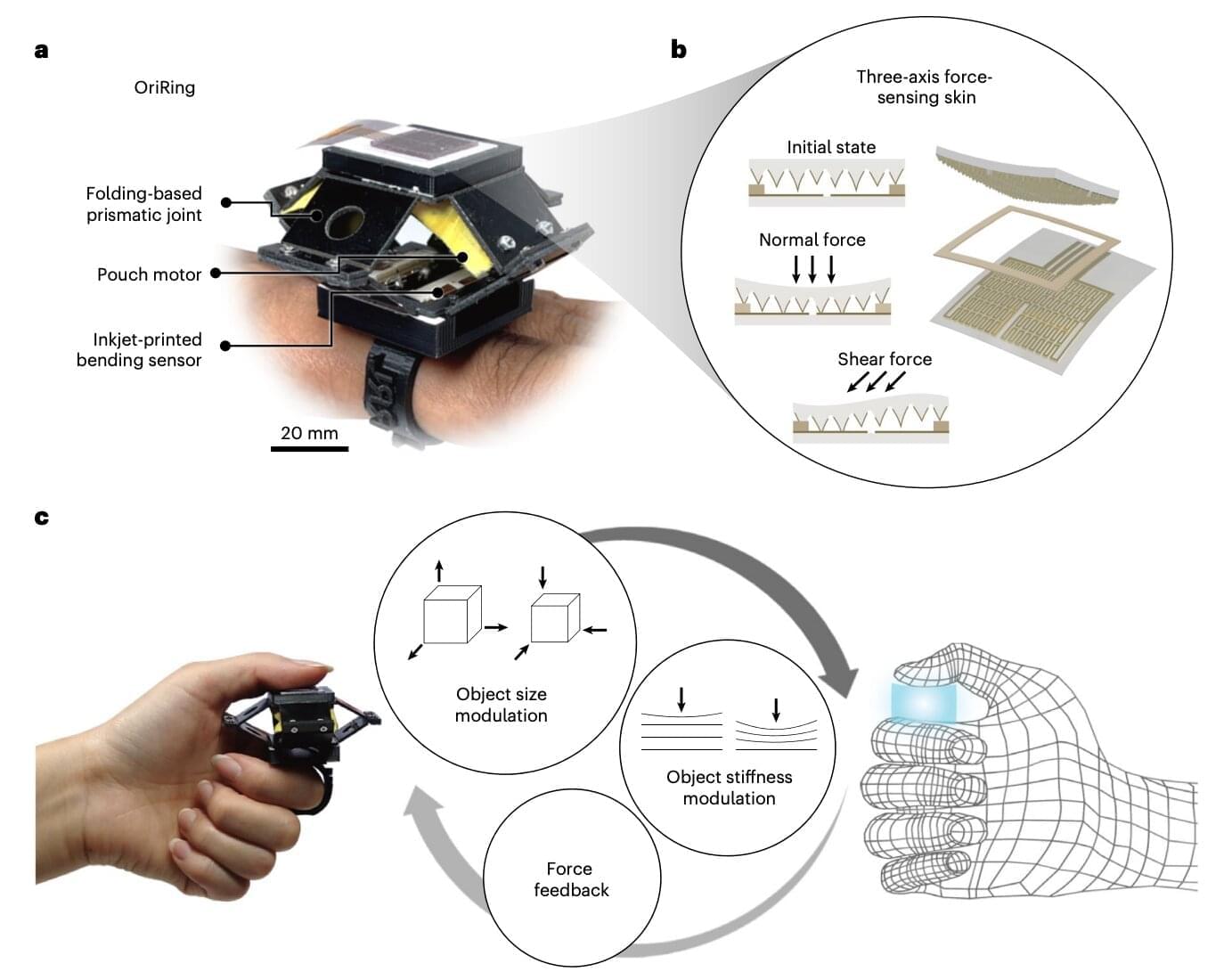

Researchers at Sungkyunkwan University, École Polytechnique Fédérale de Lausanne and Istanbul Technical University recently developed a new wearable device that could allow users to also realistically “feel” tactile sensations aligned with what they are experiencing in a virtual world. This device, introduced in a paper published in Nature Electronics, is an origami-inspired ring that measures forces on a user’s skin, pushing back onto the finger to produce specific sensations.