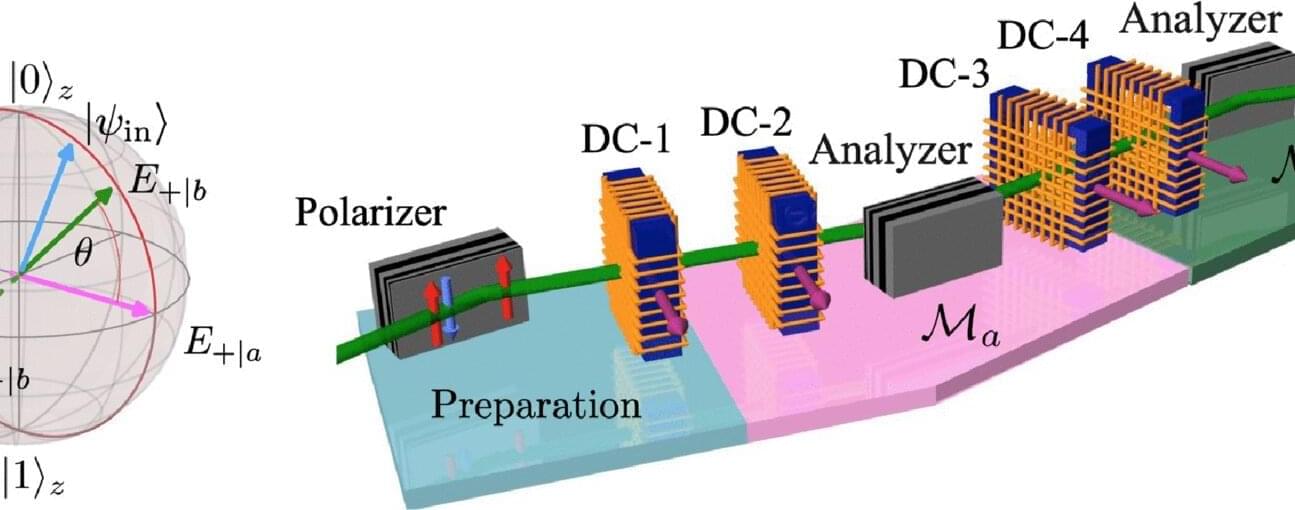

Researchers at the University of Innsbruck, together with partners from Sydney and Waterloo, have presented a new diagnostic method for quantum computers. It makes errors in individual quantum bits visible during logical calculation and evaluates them. The new method was demonstrated on an ion trap quantum processor in Innsbruck. It can be used to identify critical error sources—a key to developing more robust, fault-tolerant quantum processors.

In Physical Review X, the researchers present a scalable method that can be used to reliably characterize logical quantum operations at the level of the underlying quantum bits. Cycle error reconstruction identifies which physical errors influence the performance of logically encoded gates.

“With cycle error reconstruction, we can quantitatively capture the error structure and clearly distinguish between correctable and uncorrectable contributions,” says first author Robert Freund from the Department of Experimental Physics.