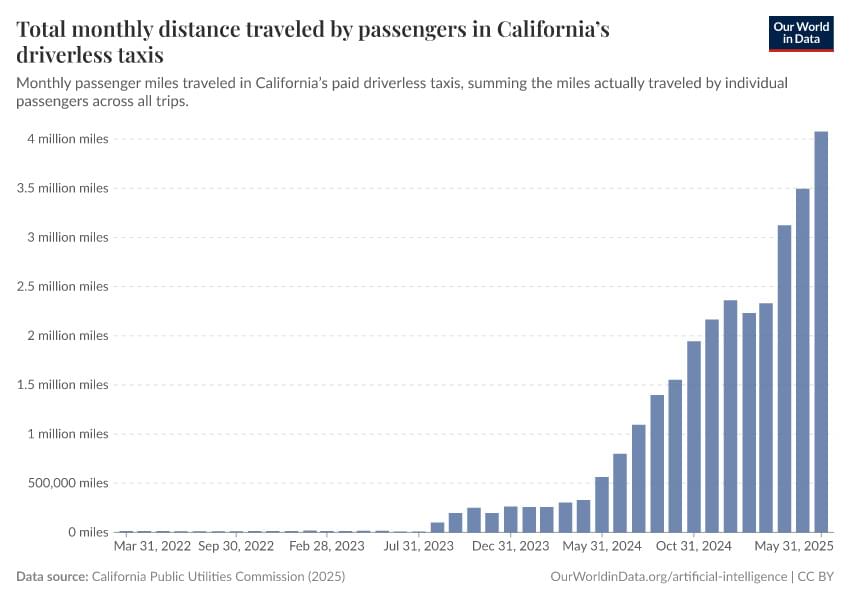

One chart defines the next decade of innovation and jobs.

Gemini 2.5 Deep Think achieves breakthrough performance at the world’s most prestigious computer programming competition, demonstrating a profound leap in abstract problem solving.

An advanced version of Gemini 2.5 Deep Think has achieved gold-medal level performance at the 2025 International Collegiate Programming Contest (ICPC) World Finals.

This milestone builds directly on Gemini 2.5 Deep Think’s gold-medal win at the International Mathematical Olympiad (IMO) just two months ago. Innovations from these efforts will continue to be integrated into future versions of Gemini Deep Think, expanding the frontier of advanced AI capabilities accessible to students and researchers.

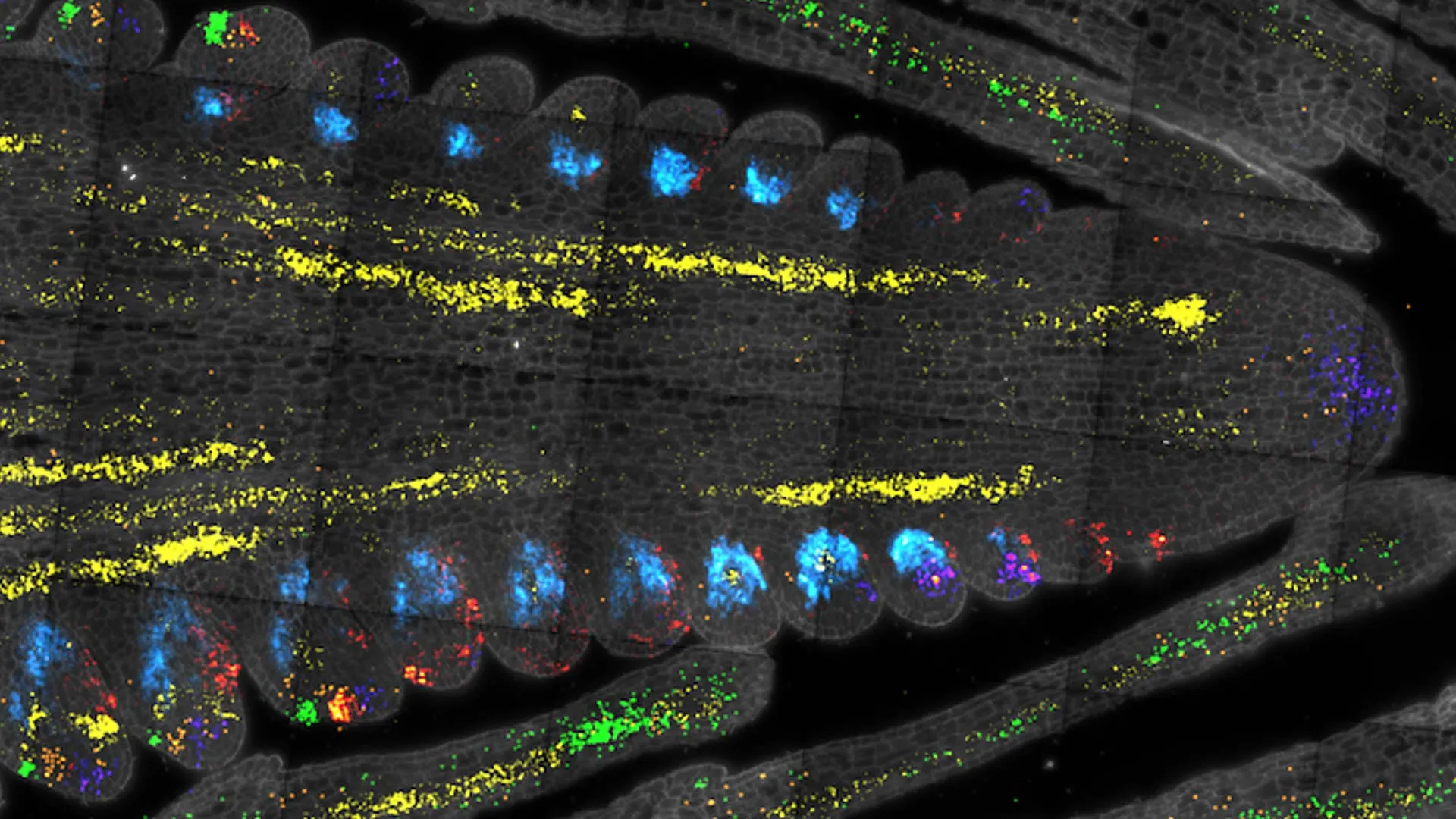

Scientists at Cold Spring Harbor Laboratory have cracked open the secrets of plant stem cells, mapping key genetic regulators in maize and Arabidopsis. By using single-cell RNA sequencing, they created a gene expression atlas that identifies rare stem cell regulators, links them to crop size and productivity, and offers a new roadmap for breeding resilient, high-yield plants.

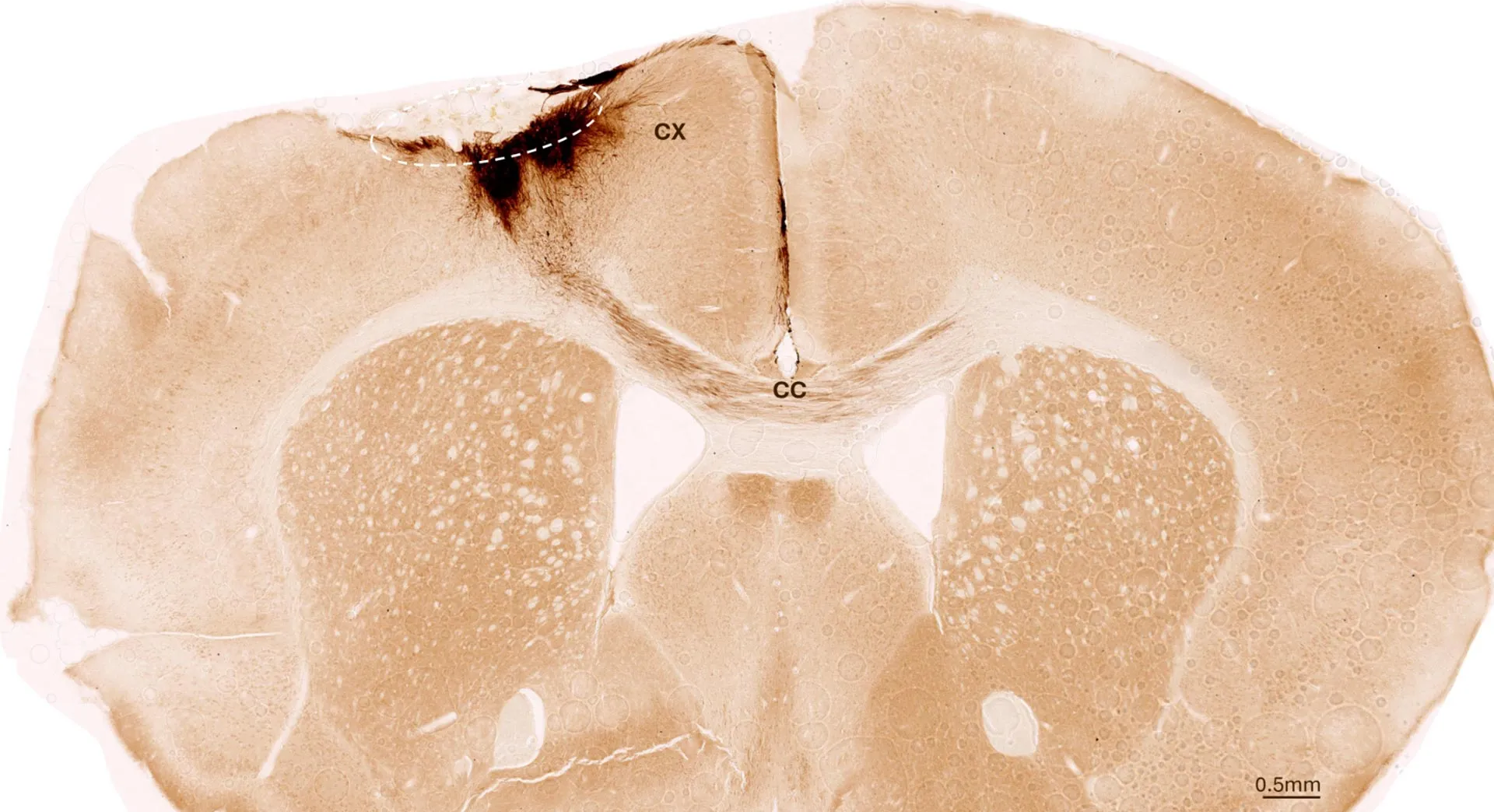

Scientists in Zurich have shown that stem cell transplants can reverse stroke damage by regenerating neurons, restoring motor functions, and even repairing blood vessels. The breakthrough not only healed mice with stroke-related impairments but also suggested that treatments could soon be adapted for humans, marking a hopeful step toward tackling one of the world’s most devastating conditions.

Imagine a battery that you never have to recharge, we are not talking about a power bank or a battery that does not need to be charged for days, but something capable of producing electricity for the next 5,700 years… We know it is an exaggeration (and that we are not going to be able to check if it is true), but they are creating a diamond battery with carbon-14 that could be the solution for all those coworkers who arrive at the office with 30% battery on their phones.

The project comes from researchers at the University of Bristol together with the California startup NDB Inc. The idea seems very simple as a concept (and a dream for a few), although it is something huge if we stop to think, taking advantage of nuclear waste, encapsulating it in synthetic diamond (generated in a laboratory) and turning it into a safe, stable and practically eternal source of energy.

Australian researchers have made a major breakthrough in HIV research by repurposing the same mRNA delivery system used in COVID-19 vaccines, not to prevent infection, but as a potential strategy to find a cure.

Nearly 40 million people live with HIV worldwide. While antiretroviral therapy can suppress the virus to undetectable levels, it cannot eliminate it. HIV has a unique ability to hide in a type of white blood cells, resting CD4+ T cells, ready to re-emerge if treatment is stopped. This HIV “reservoir” has long been one of the greatest challenges in the search for a cure.

Using the same technology behind mRNA COVID-19 vaccines, researchers, led by the Peter Doherty Institute for Infection and Immunity (Doherty Institute), have discovered a new way to deliver mRNA to the elusive HIV reservoir and coax HIV out of hiding. In a laboratory-based study published in Nature Communications, the team packaged mRNA inside an entirely novel microscopic fat-like bubbles, known as lipid nanoparticles, and successfully transported it into HIV-infected cells, where it prompted the cells to expose the dormant virus.

Researchers from Professor Sharon Lewin’s laboratory at the Doherty Institute have made a major breakthrough in HIV research by repurposing the same mRNA delivery system used in COVID-19 vaccines, not to prevent infection, but as a potential strategy to find a cure.

Japanese startup KG Motors is building a stylish, single-seat electric car for just $7,000, and has pre-sold 3,300 that it plans to deliver to customers by March 2027.

That’s more than the 2,000 EVs Toyota sold across Japan in all of 2024, according to Bloomberg. Called the “mibot,” KG’s car has a limited 62-mile range and a 37mph top speed.

Could the single-seat ‘mibot’ ignite an all-electric revolution in hybrid-heavy Japan, or even inspire more openness to small, affordable vehicles in the US?