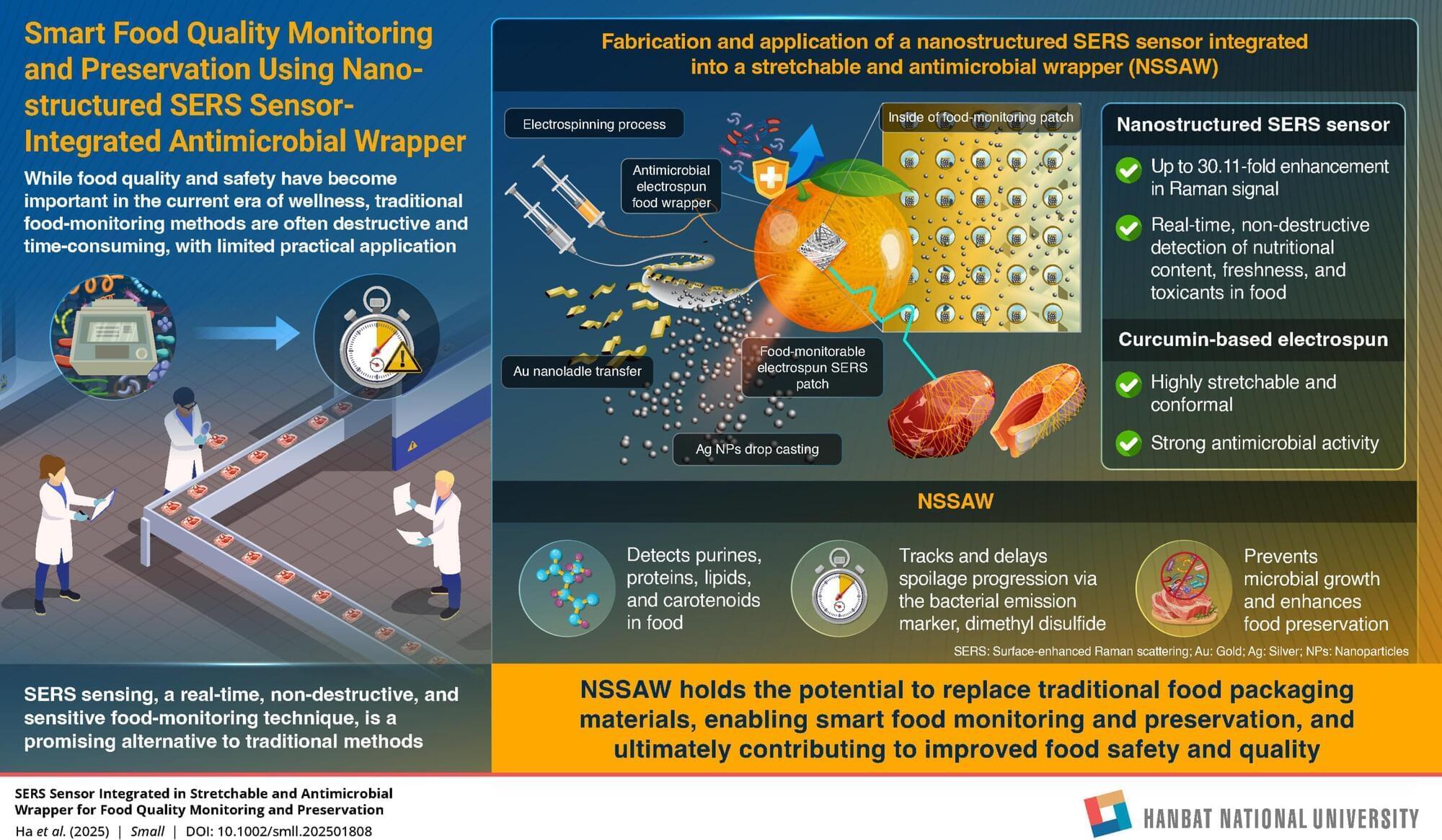

Food quality and safety are crucial. However, conventional food-monitoring methods, including ribotyping and polymerase chain reaction, tend to be destructive and lengthy. These shortcomings limit their potential for broad applications. In this regard, surface-enhanced Raman scattering (SERS) sensing, with real-time, non-destructive, and high sensitivity capabilities, is a highly promising alternative.

In a new breakthrough, a team of researchers, led by Associate Professor Ji-Hwan Ha from the Department of Mechanical Engineering, Hanbat National University, Republic of Korea, has developed a two-in-one nanostructured SERS sensor integrated into a stretchable and antimicrobial wrapper (NSSAW) that not only monitors food directly on the surface but also actively preserves it.

Their novel findings are published in the journal Small.