Targeting the Root Causes of Aging Demonstrates Powerful Anti-Cancer Activity Across Multiple Tumor Models MIAMI BEACH, Fla., Nov. 25, 2025

Statins have transformed heart health, saving millions of lives by lowering cholesterol and reducing the risk of heart attacks and strokes. But for many patients, these drugs come with a troubling downside: muscle pain, weakness and, in rare cases, severe muscle breakdown that can lead to kidney failure.

University of British Columbia researchers and their collaborators at the University of Wisconsin-Madison have now pinpointed the cause. Their findings, published last week in Nature Communications, could pave the way for a new generation of statins without these side effects.

A recent study points to a key bone-strengthening mechanism at work in the body, which could be targeted to treat the bone-weakening disease, osteoporosis.

Led by researchers from the University of Leipzig in Germany and Shandong University in China, the study identified the cell receptor GPR133 (also known as ADGRD1) as being crucial to bone density, via bone-building cells called osteoblasts.

Variations in the GPR133 gene had previously been linked to bone density, leading scientists to turn their attention to the protein it encoded.

Introduction: Imaging surveillance of contrast-enhancing lesions after the treatment of malignant brain tumors with radiation is plagued by an inability to reliably distinguish between tumor recurrence and treatment effects. Magnetic resonance perfusion-weighted imaging (PWI)—among other advanced brain tumor imaging modalities—is a useful adjunctive tool for distinguishing between these two entities but can be clinically unreliable, leading to the need for tissue sampling to confirm diagnosis. This may be partially because clinical PWI interpretation is non-standardized and no grading criteria are used for assessment, leading to interpretation discrepancies. This variance in the interpretation of PWI and its subsequent effect on the predictive value has not been studied. Our objective is to propose structured perfusion scoring criteria and determine their effect on the clinical value of PWI. Methods: Patients treated at a single institution between 2012 and 2022 who had prior irradiated malignant brain tumors and subsequent progression of contrast-enhancing lesions determined by PWI were retrospectively studied from CTORE (CNS Tumor Outcomes Registry at Emory). PWI was given two separate qualitative scores (high, intermediate, or low perfusion). The first (control) was assigned by a neuroradiologist in the radiology report in the course of interpretation with no additional instruction. The second (experimental) was assigned by a neuroradiologist with additional experience in brain tumor interpretation using a novel perfusion scoring rubric. The perfusion assessments were divided into three categories, each directly corresponding to the pathology-reported classification of residual tumor content. The interpretation accuracy in predicting the true tumor percentage, our primary outcome, was assessed through Chi-squared analysis, and inter-rater reliability was assessed using Cohen’s Kappa. Results: Our 55-patient cohort had a mean age of 53.5 ± 12.2 years. The percentage agreement between the two scores was 57.4% (κ: 0.271). Upon conducting the Chi-squared analysis, we found an association with the experimental group reads (p-value: 0.014) but no association with the control group reads (p-value: 0.734) in predicting tumor recurrence versus treatment effects. Conclusions: With our study, we showed that having an objective perfusion scoring rubric aids in improved PWI interpretation. Although PWI is a powerful tool for CNS lesion diagnosis, methodological radiology evaluation greatly improves the accurate assessment and characterization of tumor recurrence versus treatment effects by all neuroradiologists. Further work should focus on standardizing and validating scoring rubrics for PWI evaluation in tumor patients to improve diagnostic accuracy.

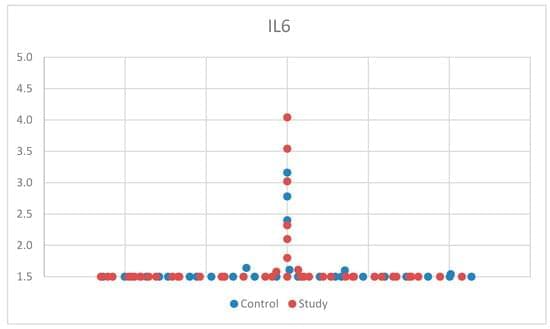

Background: Autism spectrum disorder (ASD) has seen a rise in prevalence, and the immune system’s role in brain development is increasingly recognized. This study investigates the relationship between immune dysregulation and ASD by examining serum concentrations of interleukin 6 (IL-6), interleukin 8 (CXCL8), and tumor necrosis factor alpha (TNF-alpha) in children. Methods: Serum samples from 45 children with ASD and 30 controls, aged 2 to 12 years, were analyzed using electrochemiluminescence, chemiluminescent microparticle immunoassay, and chemiluminescent immunoassay. ASD symptoms were assessed using the Autism Spectrum Rating Scale (ASRS) and Social Communication Questionnaire (SCQ). Results: No significant correlation was observed between CXCL8 levels and ASD. IL-6 levels showed a trend toward elevation in boys with ASD.

An international team of researchers has unveiled a spacecraft attitude control system that can guarantee precise stabilization and maneuvering within a predefined time, even under extreme and unpredictable space disturbances.

Published in IEEE Transactions on Industrial Electronics, the study titled “Predefined-Time Disturbance Observer-Based Attitude Tracking Control for Spacecraft: A Solution for Arbitrary Disturbances” was led by Dr. Nguyen Xuan-Mung of Sejong University (South Korea), alongside colleagues from China and Taiwan.

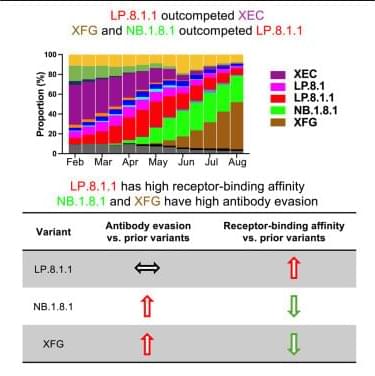

Mellis et al. show that the SARS-CoV-2 JN.1 sublineage has different virological features associated with its recently dominant subvariants. LP.8.1 has greater receptor-binding affinity than earlier strains but not increased antibody evasion, while the more recently dominant XFG and NB.1.8.1 have greater antibody evasion than LP.8.1.1.

1 John P. Hussman Institute for Human Genomics and.

2Department of Molecular and Cellular Pharmacology, University of Miami Miller School of Medicine, Miami, Florida, USA.

3Department of Neurology, University of Chicago, Chicago, Illinois, USA.

4Division of Pediatric Genetics, Department of Pediatrics, School of Medicine, Ege University, Izmir, Turkey.