While solid-state spin qubits show promise as quantum information platforms, their qubit-to-qubit interactions extend over too short a distance to connect many of them together, posing a problem for complex computations. Now Frankie Fung and colleagues from Harvard University have devised a mechanical method—involving a vibrating nanobeam—to connect distant spin qubits, potentially overcoming this issue [1].

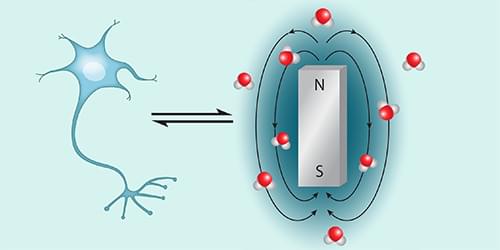

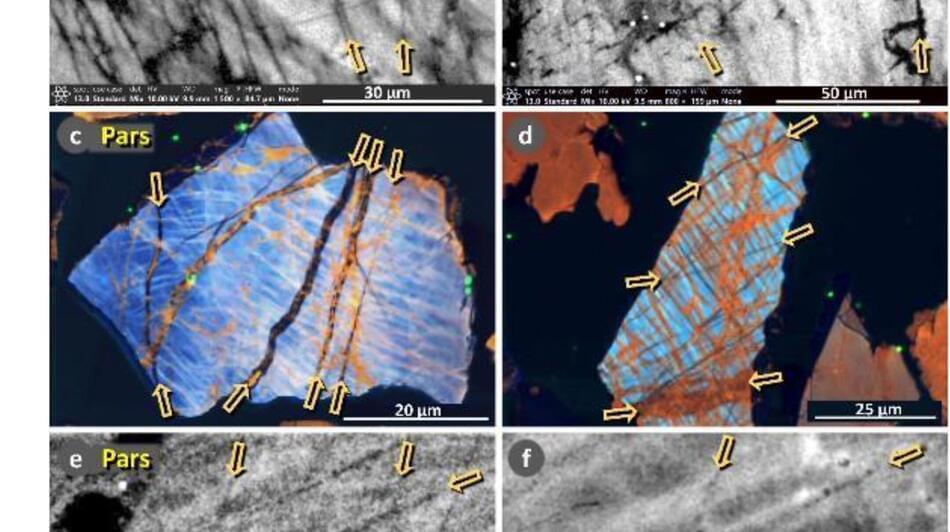

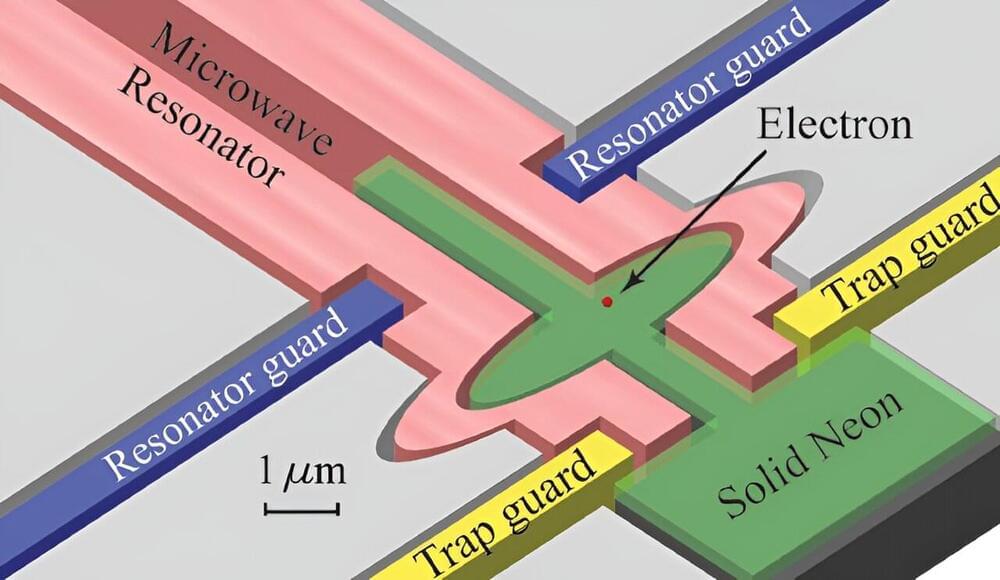

A popular solid-state spin qubit is the nitrogen-vacancy (NV) center, a single-atom defect in a diamond crystal. This system is attractive for quantum information applications, as it has both a light-sensitive electron spin state (which offers a knob for controlling the qubit) and a long-lived nuclear spin state (which acts as memory). But direct interactions between NV centers are limited to a few nanometers.

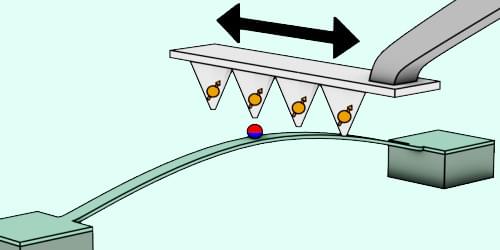

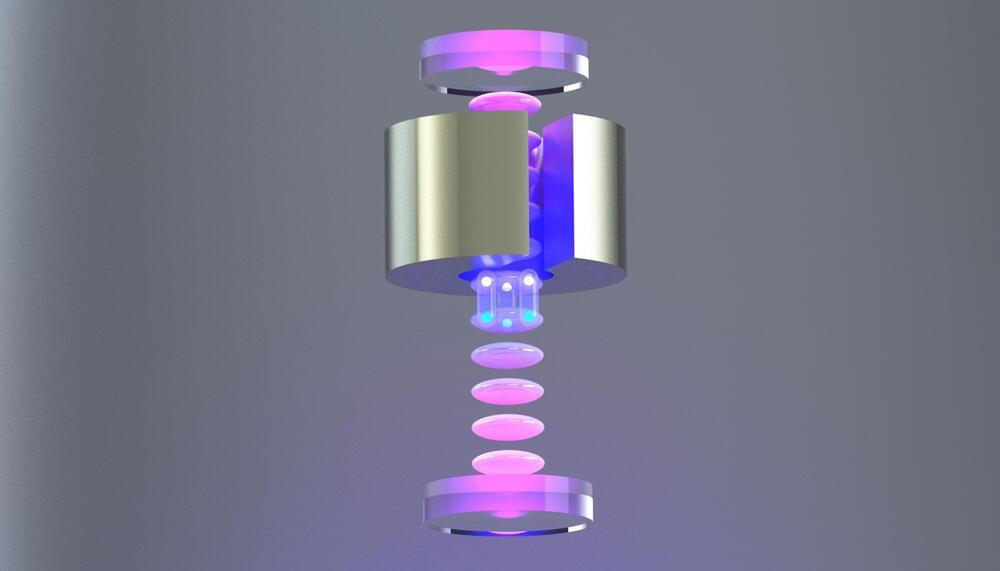

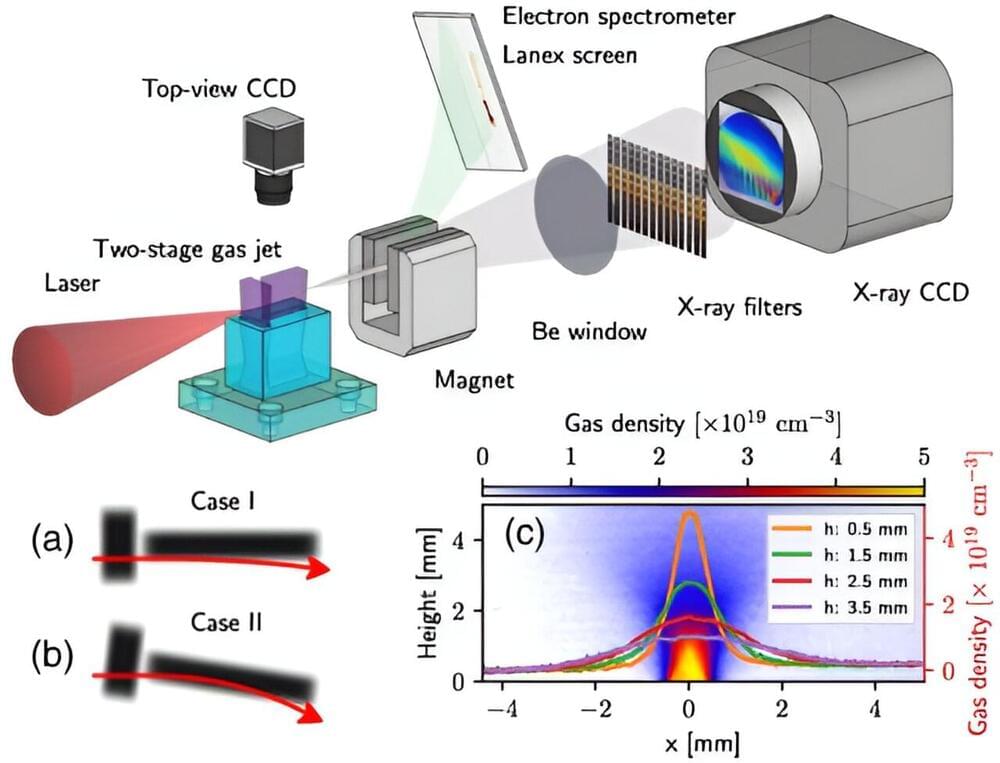

To lengthen the connections, Fung and his colleagues propose using a nanobeam fitted with a micromagnet as an intermediary between distant NV centers. The idea is then to place a line of NV centers along the length of a scanning-probe-microscope tip and move the tip over the micromagnet. When a particular NV center comes close to the micromagnet, the magnetic-field interaction should entangle the vibrational state of the nanobeam with the spin state of that NV center. This quantum information is then shared with the next NV center along the line.