Artificial intelligence (AI) systems are computational models that can learn to identify patterns in data, make accurate predictions or generate content (e.g., texts, images, videos or sound recordings). These models can reliably complete various tasks and are now also used to carry out research rooted in different fields.

Over the past few decades, some AI models have proved promising for the early diagnosis and study of specific diseases or neuropsychiatric conditions. For instance, by analyzing large amounts of brain scans collected using a noninvasive technique known as magnetic resonance imaging (MRI), AI could uncover patterns associated with tumors, strokes and neurodegenerative diseases, which could help to diagnose these conditions.

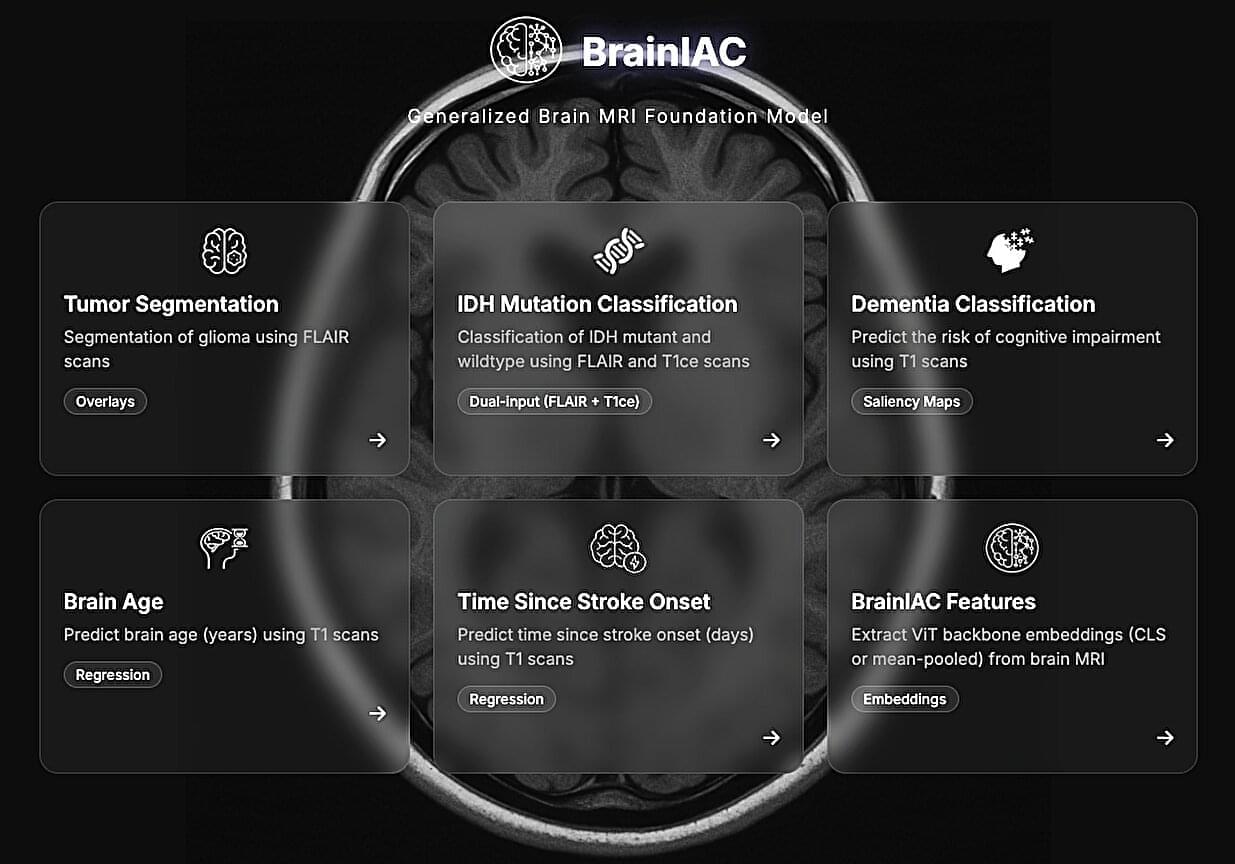

Researchers at Mass General Brigham, Harvard Medical School and other institutes recently developed Brain Imaging Adaptive Core (BrainIAC), a large AI system pre-trained on a vast pool of MRI data that could be adapted to tackle different tasks. This foundation model, presented in a paper published in Nature Neuroscience, was found to outperform many models that were trained to complete specific medical or neuroscience-related tasks.