A high-altitude balloon telescope has revealed new patterns in X-rays emitted near the black hole Cygnus X-1. These signals give scientists a clearer picture of how gas and radiation behave in the most extreme environments known.

Researchers found persistent microclot and NET structures in Long COVID blood that may explain long-lasting symptoms.

Researchers examining Long COVID have identified a structural connection between circulating microclots and neutrophil extracellular traps (NETs). The discovery indicates that the two may interact in the body in ways that could lead to harmful effects when these processes become unregulated.

Understanding Microclots

The Mexican government will build a supercomputer with a processing capacity seven times greater than the current most powerful computer in Latin America, officials responsible for the project said Wednesday.

Named Coatlicue, after a goddess in Aztec mythology representing the source of power and life, the computer will have a processing capacity of 314 petaflops.

“We want it to be a public supercomputer, a supercomputer for the people,” President Claudia Sheinbaum told reporters.

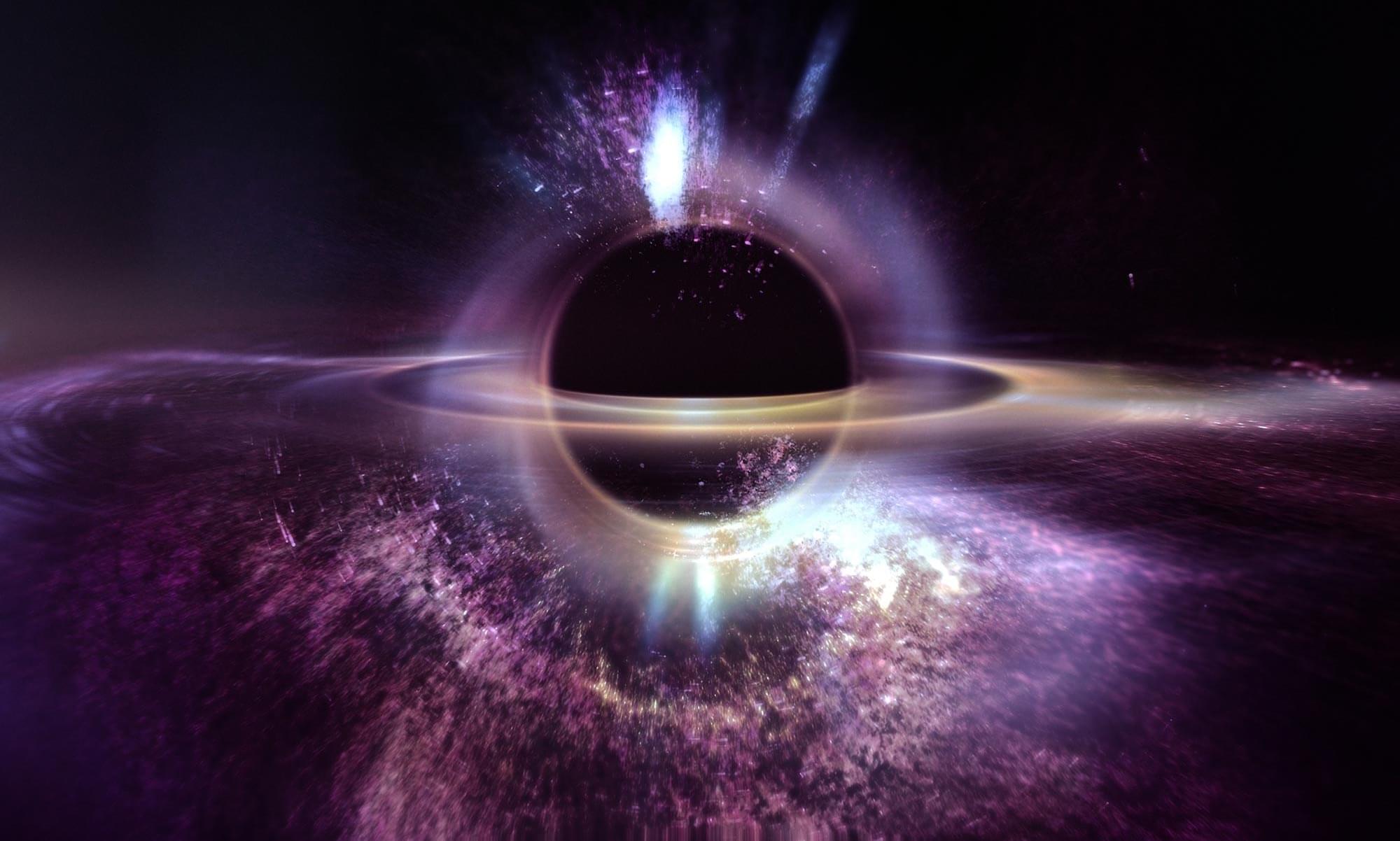

It’s not every day that biologists announce an entirely new branch of life, and this one has been hiding under their noses for years.

It was discovered hiding in a lab sample of marine ciliates scientists had been tending to since collecting them from Croatian waters in 2011. But it wasn’t until the ciliates suddenly died that this new, tiny creature, which scientists have named Solarion arienae, came into view.

“This organism allows us to look into a very ancient chapter of cellular evolution that we previously could reconstruct only indirectly,” say protistologists Ivan Čepička and Marek Valt, from Charles University in the Czech Republic, lead authors of the study.

Cybersecurity researchers have shed light on a cross-tenant blind spot that allows attackers to bypass Microsoft Defender for Office 365 protections via the guest access feature in Teams.

“When users operate as guests in another tenant, their protections are determined entirely by that hosting environment, not by their home organization,” Ontinue security researcher Rhys Downing said in a report.

“These advancements increase collaboration opportunities, but they also widen the responsibility for ensuring those external environments are trustworthy and properly secured.”

A 44-year-old man was sentenced to seven years and four months in prison for operating an “evil twin” WiFi network to steal the data of unsuspecting travelers during flights and at various airports across Australia.

The man, an Australian national, was charged in July 2024 after Australian authorities had confiscated his equipment in April and confirmed that he was engaging in malicious activities during domestic flights and at airports in Perth, Melbourne, and Adelaide.

Specifically, the man was setting up an access point with a ‘WiFi Pineapple’ portable wireless access device and used the same name (SSID) for the rogue wireless network as the legitimate ones in airports.

GreyNoise Labs has launched a free tool called GreyNoise IP Check that lets users check if their IP address has been observed in malicious scanning operations, like botnet and residential proxy networks.

The threat monitoring firm that tracks internet-wide activity via a global sensor network says this problem has grown significantly over the past year, with many users unknowingly helping malicious online activity.

“Over the past year, residential proxy networks have exploded and have been turning home internet connections into exit points for other people’s traffic,” explains GreyNoise.