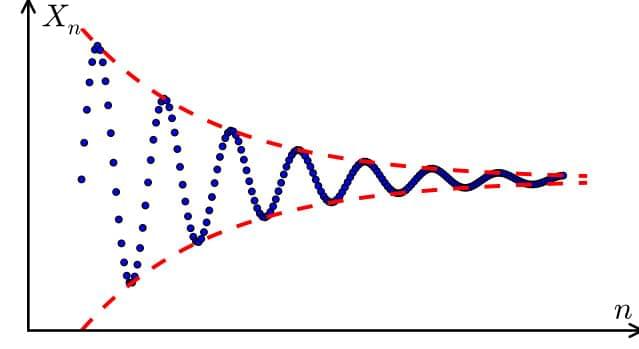

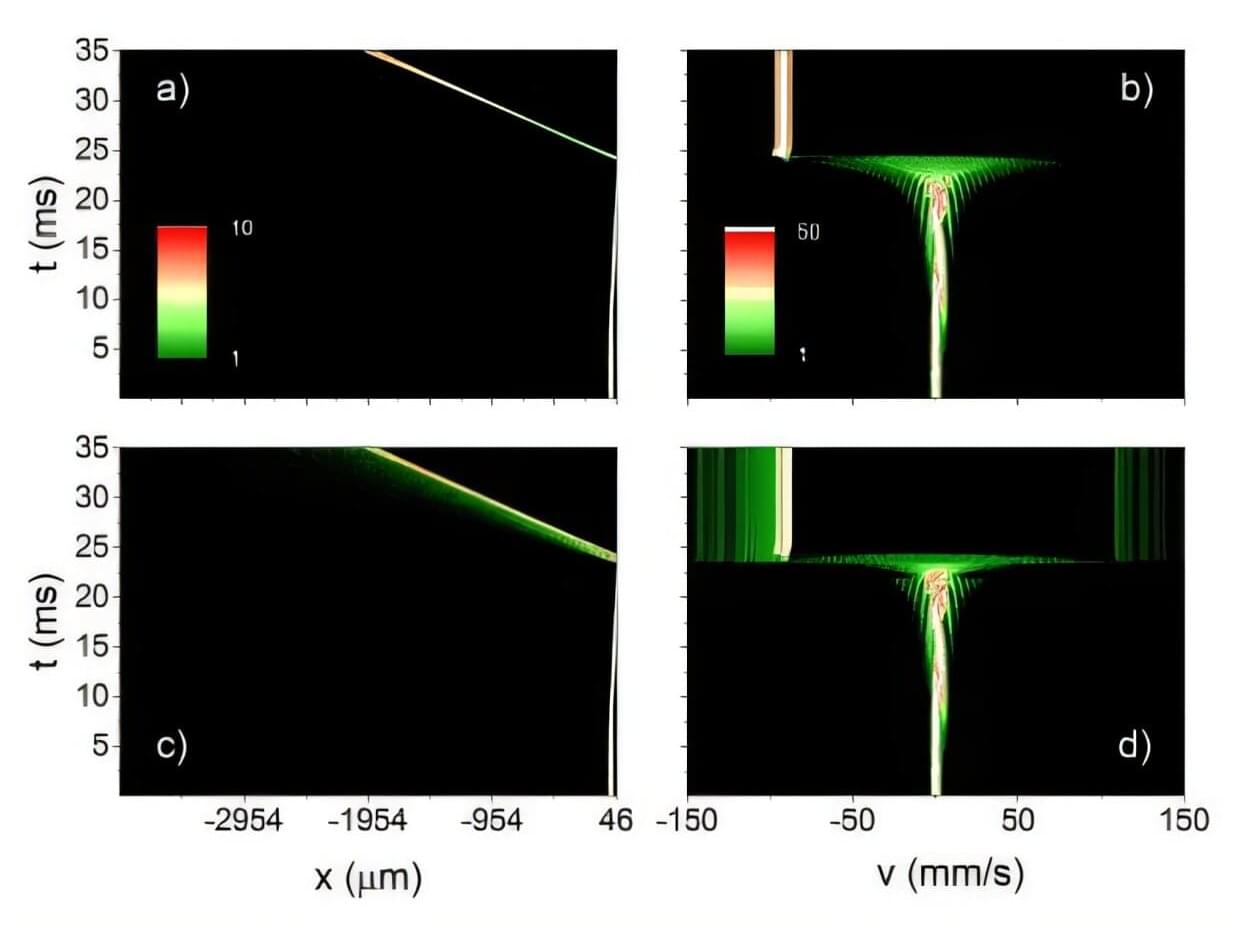

Comparing our findings across species with those across growth led us to a final question. If the purpose of sleep shifts from being about neural reorganisation as children to being about repair once we’re grown, when exactly does that transition occur, and how sudden is it? Armed with our new theory plus human developmental data, we could answer this question with surprising accuracy: the transition occurs when we’re extremely young – at about 2.5 years of age – and it happens extremely abruptly, like water freezing at 0°C.

We were delighted with this stunning result. First, it gave us an even greater appreciation for the critical importance of sleep: never again would we underestimate its importance for our children, especially in their first few years of life when their sleep is doing something so fundamentally different and extraordinarily important, something that seemingly can’t be made up for later in life. Second, we had discovered that these two states of sleep, while they looked remarkably similar from the outside, are actually analogous to completely different states of matter before and after the stark dividing line of 2.5 years of age. Before 2.5 years, our brains are more fluid and plastic, enabling us to learn and adapt quickly, similar to the state of water flowing around obstacles. After 2.5 years, our brains are much more crystalline and frozen, still capable of learning and adapting but more like glaciers slowly pushing across a landscape.

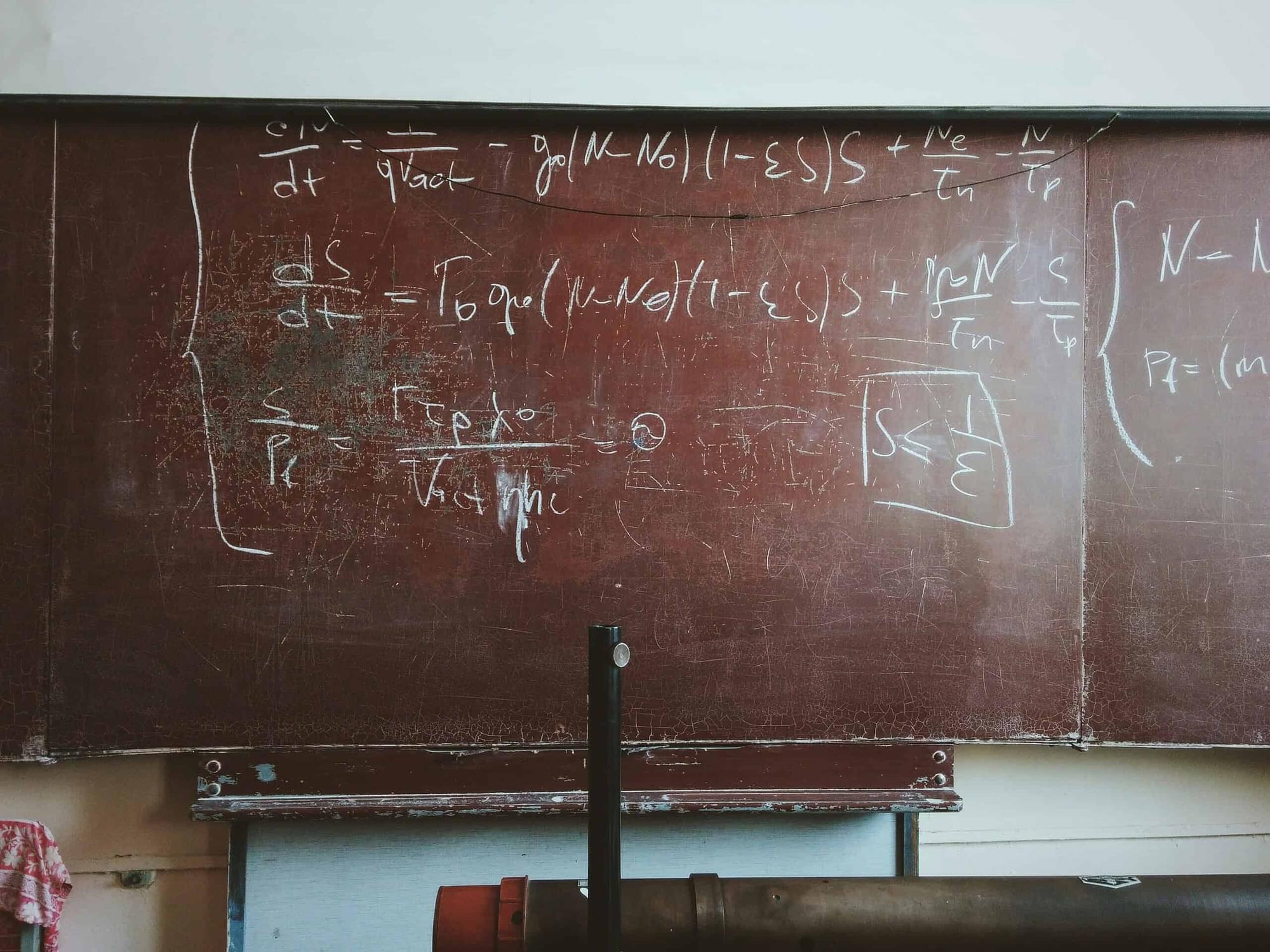

Many questions still remain. How much does sleep vary across humans and across species? Can this early fluid phase of sleep be extended? Is this phase already extended or shortened in some individuals, and what costs or benefits are associated with that? What other functions of sleep have piggybacked on to the primary functions of repair and neural reorganisation? How do the different reasons for sleep compete for or share sleep time, either across ages or even within a single night? It will take much more work to fully unravel the mysteries of sleep, but our recent insights – about age-based shifts in the purpose of sleep and the mathematical, predictive theories that quantify them – represent an essential tool to plumb these depths even further.