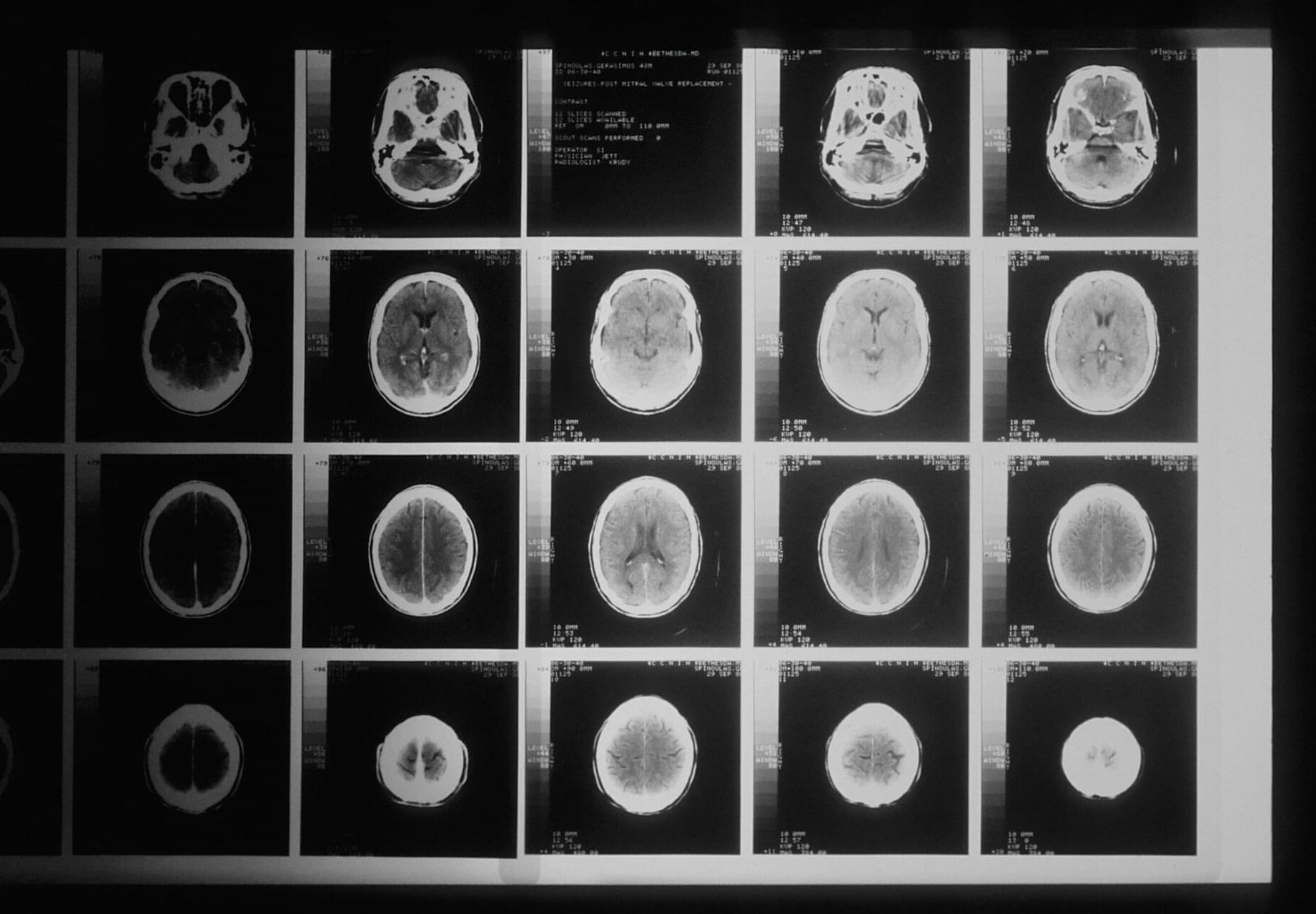

Researchers at Rice University have developed a new machine learning (ML) algorithm that excels at interpreting the “light signatures” (optical spectra) of molecules, materials and disease biomarkers, potentially enabling faster and more precise medical diagnoses and sample analysis.

“Imagine being able to detect early signs of diseases like Alzheimer’s or COVID-19 just by shining a light on a drop of fluid or a tissue sample,” said Ziyang Wang, an electrical and computer engineering doctoral student at Rice who is a first author on a study published in ACS Nano. “Our work makes this possible by teaching computers how to better ‘read’ the signal of light scattered from tiny molecules.”

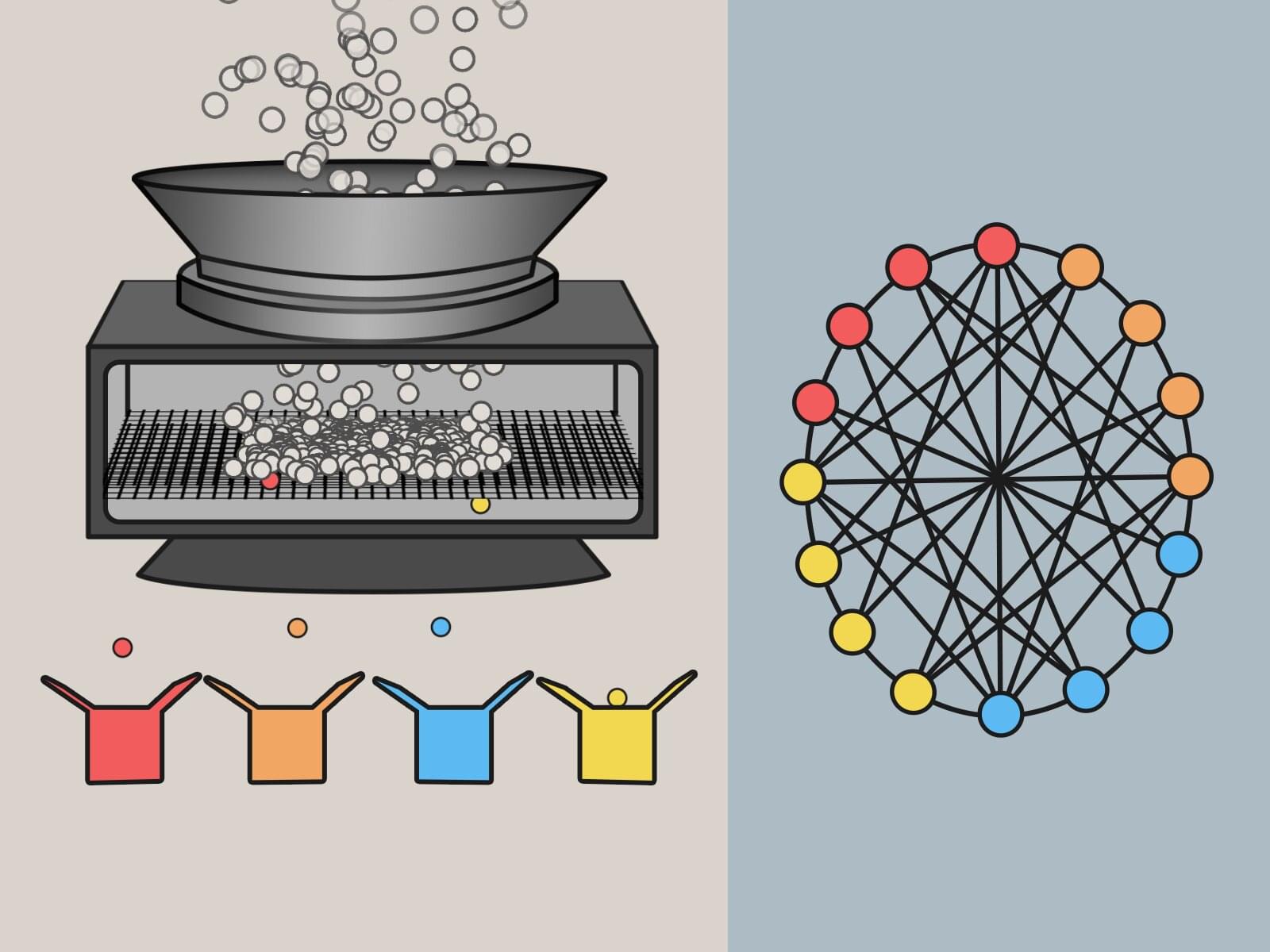

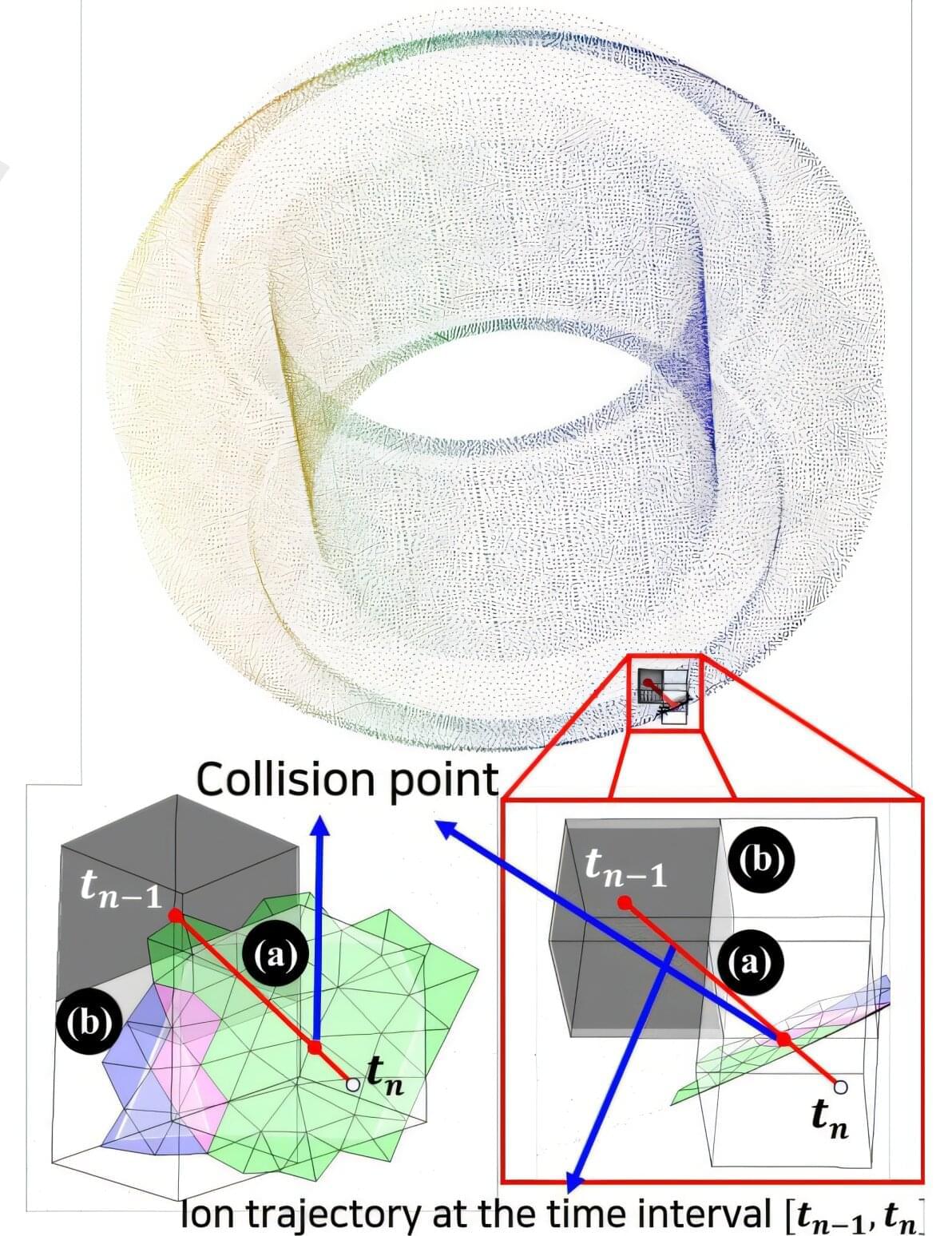

Every material or molecule interacts with light in a unique way, producing a distinct pattern, like a fingerprint. Optical spectroscopy, which entails shining a laser on a material to observe how light interacts with it, is widely used in chemistry, materials science and medicine. However, interpreting spectral data can be difficult and time-consuming, especially when differences between samples are subtle. The new algorithm, called Peak-Sensitive Elastic-net Logistic Regression (PSE-LR), is specially designed to analyze light-based data.