Quantum computers—devices that process information using quantum mechanical effects—have long been expected to outperform classical systems on certain tasks. Over the past few decades, researchers have worked to rigorously demonstrate such advantages, ideally in ways that are provable, verifiable and experimentally realizable.

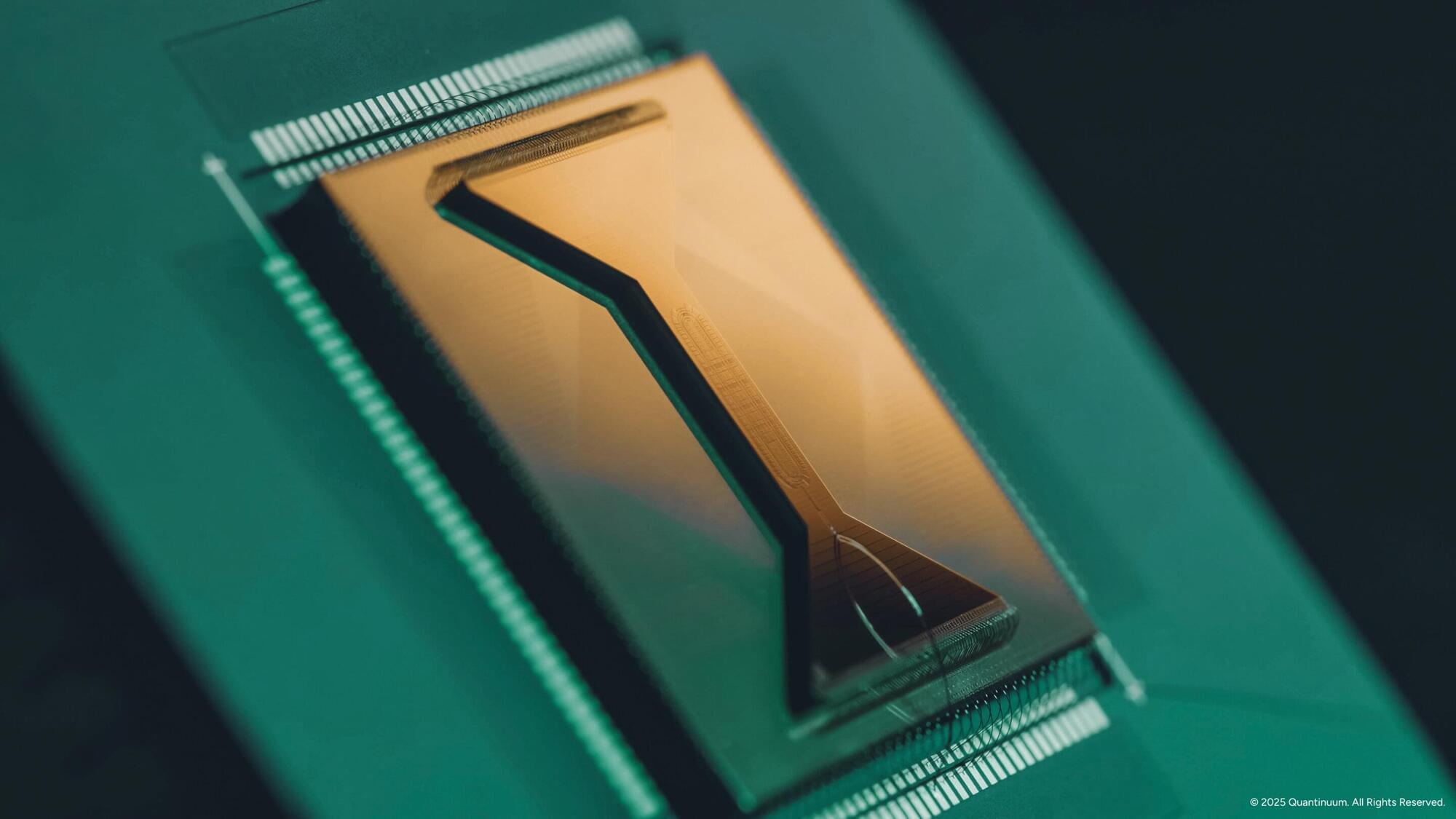

A team of researchers working at Quantinuum in the United Kingdom and QuSoft in the Netherlands has now developed a quantum algorithm that solves a specific sampling task—known as complement sampling—dramatically more efficiently than any classical algorithm. Their paper, published in Physical Review Letters, establishes a provable and verifiable quantum advantage in sample complexity: the number of samples required to solve a problem.

“We stumbled upon the core result of this work by chance while working on a different project,” Harry Buhrman, co-author of the paper, told Phys.org. “We had a set of items and two quantum states: one formed from half of the items, the other formed from the remaining half. Even though the two states are fundamentally distinct, we showed that a quantum computer may find it hard to tell which one it is given. Surprisingly, however, we then realized that transforming one state into the other is always easy, because a simple operation can swap between them.”