By making remarkable breakthroughs in a number of fields, unlocking new approaches to science, and accelerating the pace of science and innovation.

In 2020, Google’s AI team DeepMind announced that its algorithm, AlphaFold, had solved the protein-folding problem. At first, this stunning breakthrough was met with excitement from most, with scientists always ready to test a new tool, and amusement by some. After all, wasn’t this the same company whose algorithm AlphaGo had defeated the world champion in the Chinese strategy game Go, just a few years before? Mastering a game more complex than chess, difficult as that is, felt trivial compared to the protein-folding problem. But AlphaFold proved its scientific mettle by sweeping an annual competition in which teams of biologists guess the structure of proteins based only on their genetic code. The algorithm far outpaced its human rivals, posting scores that predicted the final shape within an angstrom, the width of a single atom. Soon after, AlphaFold passed its first real-world test by correctly predicting the shape of the SARS-CoV-2 ‘spike’ protein, the virus’ conspicuous membrane receptor that is targeted by vaccines.

The success of AlphaFold soon became impossible to ignore, and scientists began trying out the algorithm in their labs. By 2021 Science magazine crowned an open-source version of AlphaFold the “Method of the Year.” Biochemist and Editor-in-Chief H. Holden Thorp of the journal Science wrote in an editorial, “The breakthrough in protein-folding is one of the greatest ever in terms of both the scientific achievement and the enabling of future research.” Today, AlphaFold’s predictions are so accurate that the protein-folding problem is considered solved after more than 70 years of searching. And while the protein-folding problem may be the highest profile achievement of AI in science to date, artificial intelligence is quietly making discoveries in a number of scientific fields.

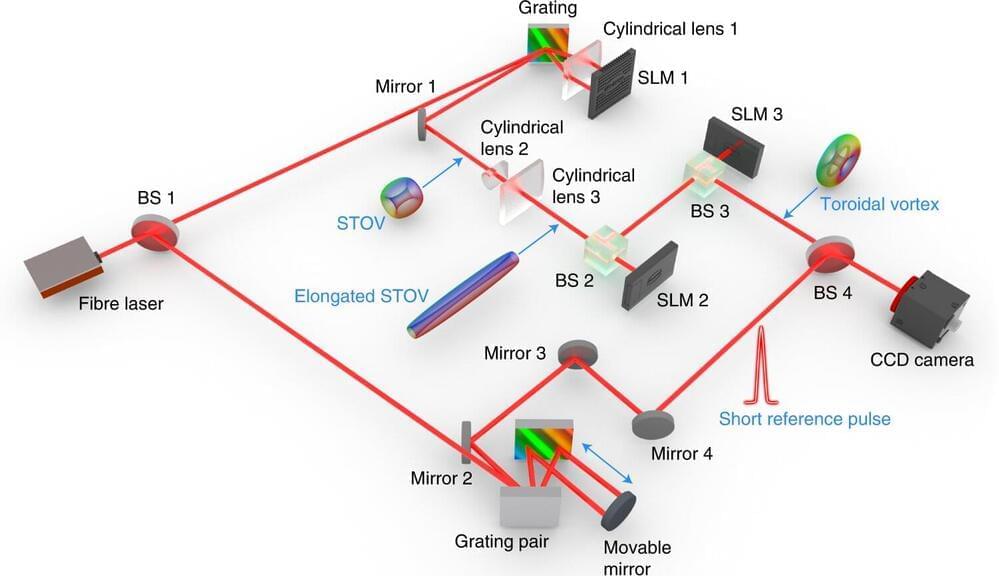

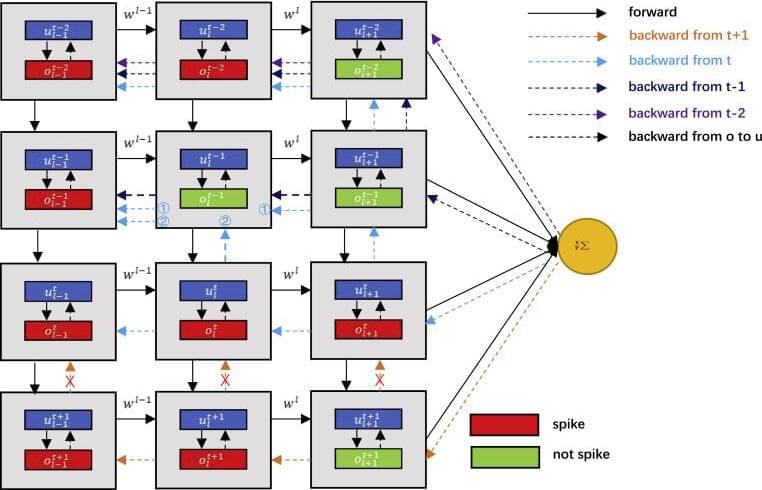

By turbocharging the discovery process and providing scientists with new investigative tools, AI is also transforming how science is done. The technology upgrades research mainstays like microscopes and genome sequencers 0, adding new technical capacities to the instruments and making them more powerful. AI-powered drug design and gravity wave detectors offer scientists new tools to probe and control the natural world. Off the lab bench, AI can also deploy advanced simulation capabilities and reasoning systems to develop real-world models and test hypotheses using them. With manifold impacts stretching the length of the scientific method, AI is ushering in a scientific revolution through groundbreaking discoveries, novel techniques and augmented tools, and automated methods that advance the speed and accuracy of the scientific process.