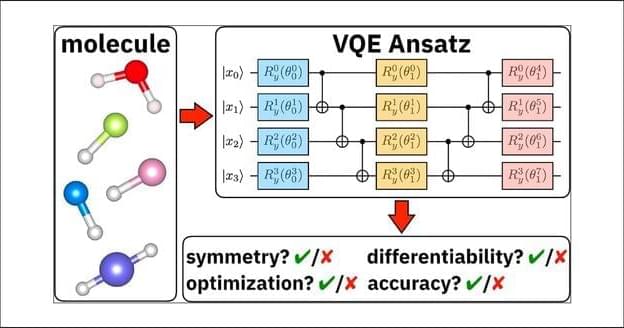

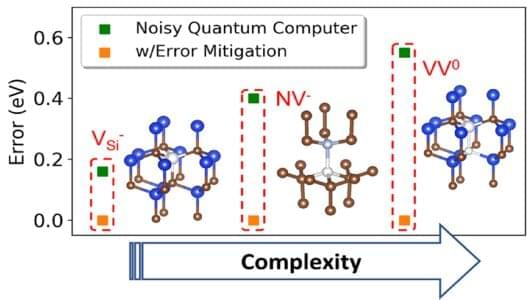

Advances in quantum computation for electronic structure, and particularly heuristic quantum algorithms, create an ongoing need to characterize the performance and limitations of these methods. Here we discuss some potential pitfalls connected with the use of hardware-efficient Ansätze in variational quantum simulations of electronic structure. We illustrate that hardware-efficient Ansätze may break Hamiltonian symmetries and yield nondifferentiable potential energy curves, in addition to the well-known difficulty of optimizing variational parameters. We discuss the interplay between these limitations by carrying out a comparative analysis of hardware-efficient Ansätze versus unitary coupled cluster and full configuration interaction, and of second-and first-quantization strategies to encode Fermionic degrees of freedom to qubits.