Effective compression is about finding patterns to make data smaller without losing information. When an algorithm or model can accurately guess the next piece of data in a sequence, it shows it’s good at spotting these patterns. This links the idea of making good guesses—which is what large language models like GPT-4 do very well —to achieving good compression.

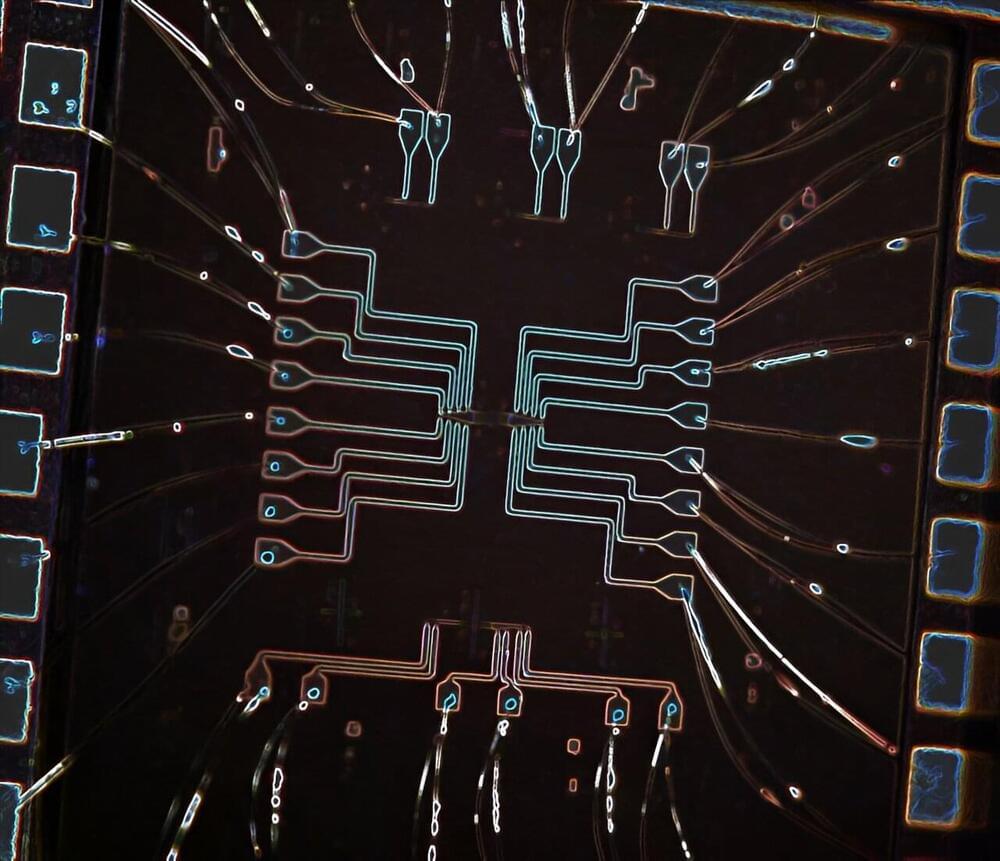

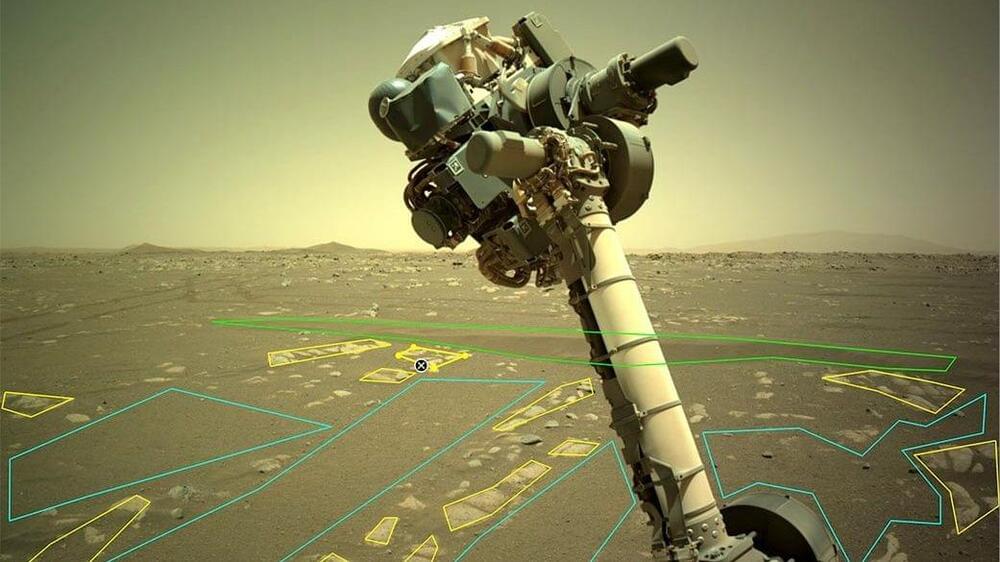

In an arXiv research paper titled “Language Modeling Is Compression,” researchers detail their discovery that the DeepMind large language model (LLM) called Chinchilla 70B can perform lossless compression on image patches from the ImageNet image database to 43.4 percent of their original size, beating the PNG algorithm, which compressed the same data to 58.5 percent. For audio, Chinchilla compressed samples from the LibriSpeech audio data set to just 16.4 percent of their raw size, outdoing FLAC compression at 30.3 percent.

In this case, lower numbers in the results mean more compression is taking place. And lossless compression means that no data is lost during the compression process. It stands in contrast to a lossy compression technique like JPEG, which sheds some data and reconstructs some of the data with approximations during the decoding process to significantly reduce file sizes.