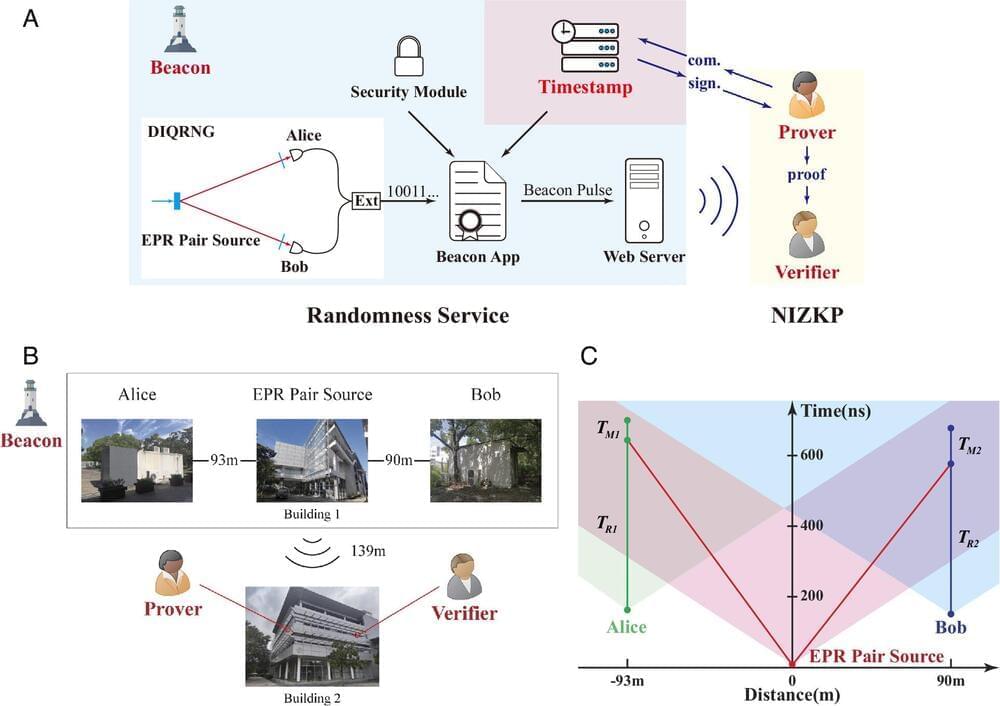

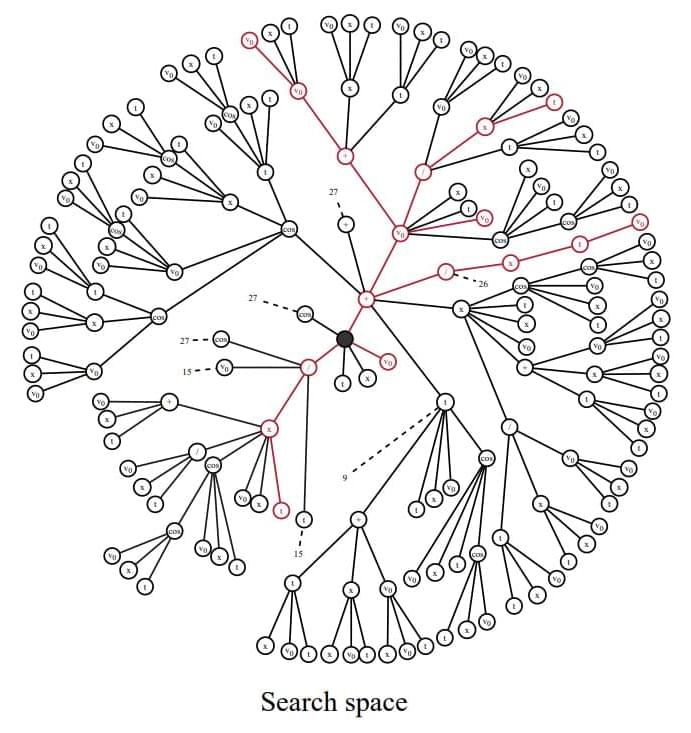

Zero-knowledge proof (ZKP) is a cryptographic tool that allows for the verification of validity between mutually untrusted parties without disclosing additional information. Non-interactive zero-knowledge proof (NIZKP) is a variant of ZKP with the feature of not requiring multiple information exchanges. Therefore, NIZKP is widely used in the fields of digital signature, blockchain, and identity authentication.

Since it is difficult to implement a true random number generator, deterministic pseudorandom number algorithms are often used as a substitute. However, this method has potential security vulnerabilities. Therefore, how to obtain true random numbers has become the key to improving the security of NIZKP.

In a study published in PNAS, a research team led by Prof. Pan Jianwei and Prof. Zhang Qiang from the University of Science and Technology of China (USTC) of the Chinese Academy of Sciences, and the collaborators, realized a set of random number beacon public services with device-independent quantum random number generators as entropy sources and post-quantum cryptography as identity authentication.