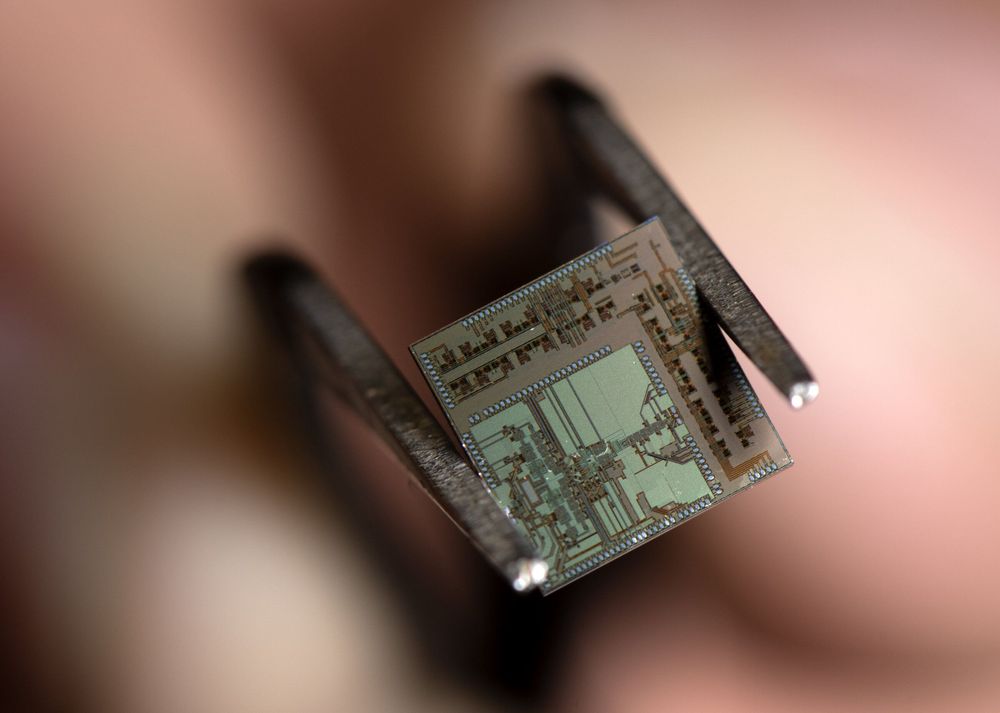

A new wireless transceiver invented by electrical engineers at the University of California, Irvine boosts radio frequencies into 100-gigahertz territory, quadruple the speed of the upcoming 5G, or fifth-generation, wireless communications standard.

Labeled an “end-to-end transmitter-receiver” by its creators in UCI’s Nanoscale Communication Integrated Circuits Labs, the 4.4-millimeter-square silicon chip is capable of processing digital signals significantly faster and more energy-efficiently because of its unique digital-analog architecture. The team’s innovation is outlined in a paper published recently in the IEEE Journal of Solid-State Circuits.

“We call our chip ‘beyond 5G’ because the combined speed and data rate that we can achieve is two orders of magnitude higher than the capability of the new wireless standard,” said senior author Payam Heydari, NCIC Labs director and UCI professor of electrical engineering & computer science. “In addition, operating in a higher frequency means that you and I and everyone else can be given a bigger chunk of the bandwidth offered by carriers.”