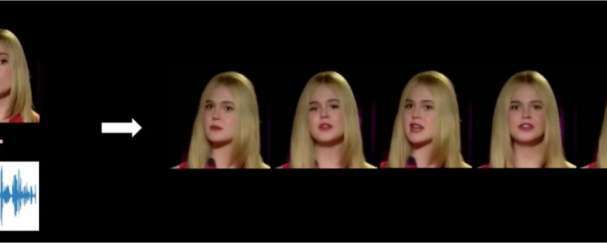

In recent years, computer-generated animations of animals and humans have become increasingly detailed and realistic. Nonetheless, producing convincing animations of a character’s face as it’s talking remains a key challenge, as it typically entails the successful combination of a series of different audio and video elements.

A team of computer scientists at TCS Research in India has recently created a new model that can produce highly realistic talking face animations that integrate audio recordings with a character’s head motions. This model, introduced in a paper presented at ICVGIP 2021, the twelfth Indian Conference on Computer Vision, Graphics and Image Processing, could be used to create more convincing virtual avatars, digital assistants, and animated movies.

“For a pleasant viewing experience, the perception of realism is of utmost importance, and despite recent research advances, the generation of a realistic talking face remains a challenging research problem,” Brojeshwar Bhowmick, one of the researchers who carried out the study, told TechXplore. “Alongside accurate lip synchronization, realistic talking face animation requires other attributes of realism such as natural eye blinks, head motions and preserving identity information of arbitrary target faces.”