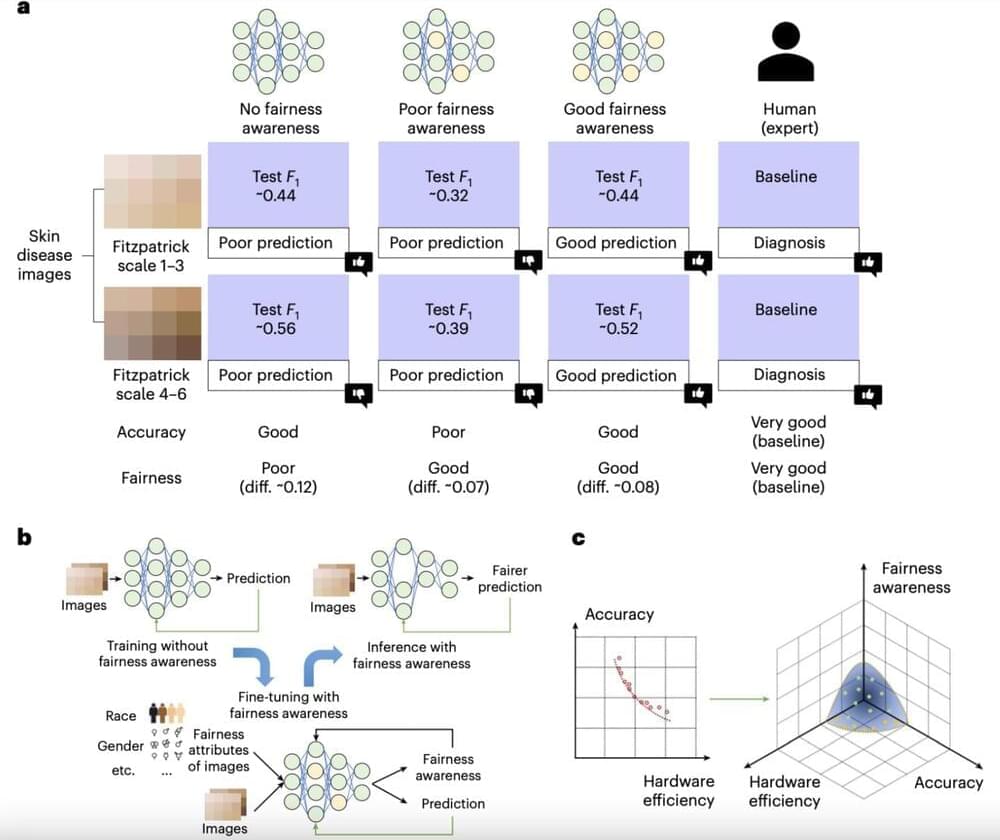

Over the past couple of decades, computer scientists have developed a wide range of deep neural networks (DNNs) designed to tackle various real-world tasks. While some of these models have proved to be highly effective, some studies found that they can be unfair, meaning that their performance may vary based on the data they were trained on and even the hardware platforms they were deployed on.

For instance, some studies showed that commercially available deep learning–based tools for facial recognition were significantly better at recognizing the features of fair-skinned individuals compared to dark-skinned individuals. These observed variations in the performance of AI, in great part due to disparities in the training data available, have inspired efforts aimed at improving the fairness of existing models.

Researchers at University of Notre Dame recently set out to investigate how hardware systems can contribute to the fairness of AI. Their paper, published in Nature Electronics, identifies ways in which emerging hardware designs, such as computing-in-memory (CiM) devices, can affect the fairness of DNNs.