Google presents Reuse Your Rewards.

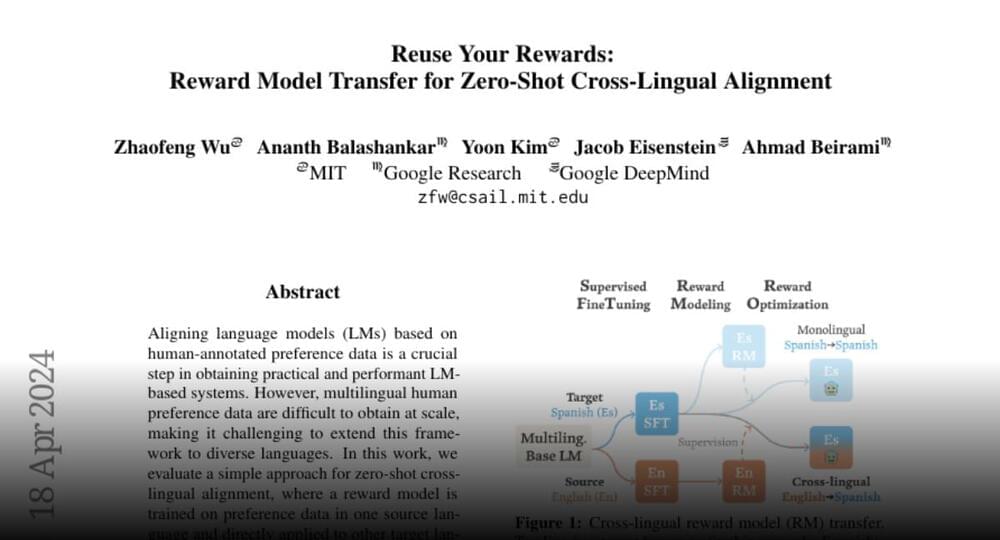

Reward model transfer for zero-shot cross-lingual alignment.

Aligning language models (LMs) based on human-annotated preference data is a crucial step in obtaining practical and performant LM-based systems.

Join the discussion on this paper page.