Sep 7, 2019

AI learns the language of chemistry to predict how to make medicines

Posted by Saúl Morales Rodriguéz in categories: biotech/medical, chemistry, information science, robotics/AI

Researchers have designed a machine learning algorithm that predicts the outcome of chemical reactions with much higher accuracy than trained chemists and suggests ways to make complex molecules, removing a significant hurdle in drug discovery.

University of Cambridge researchers have shown that an algorithm can predict the outcomes of complex chemical reactions with over 90% accuracy, outperforming trained chemists. The algorithm also shows chemists how to make target compounds, providing the chemical “map” to the desired destination. The results are reported in two studies in the journals ACS Central Science and Chemical Communications.

A central challenge in drug discovery and materials science is finding ways to make complicated organic molecules by chemically joining together simpler building blocks. The problem is that those building blocks often react in unexpected ways.

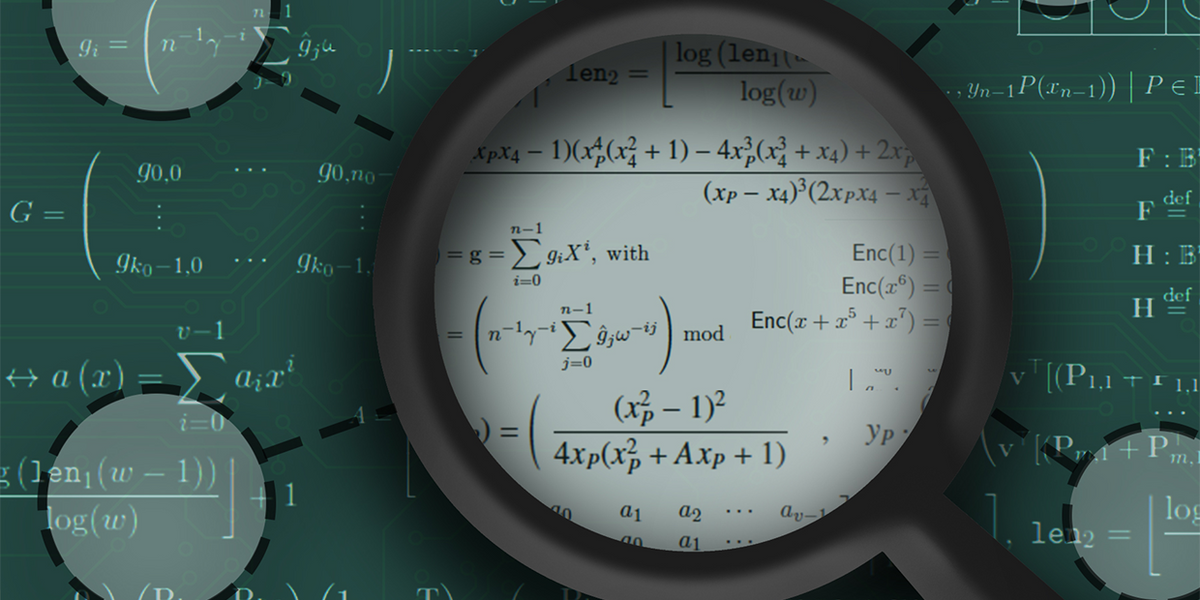

When practical quantum computing finally arrives, it will have the power to crack the standard digital codes that safeguard online privacy and security for governments, corporations, and virtually everyone who uses the Internet. That’s why a U.S. government agency has challenged researchers to develop a new generation of quantum-resistant cryptographic algorithms.

When practical quantum computing finally arrives, it will have the power to crack the standard digital codes that safeguard online privacy and security for governments, corporations, and virtually everyone who uses the Internet. That’s why a U.S. government agency has challenged researchers to develop a new generation of quantum-resistant cryptographic algorithms.

says that mass of a particle determines the energy of the particle at rest. For an electron, its rest energy is known to be 0.511 MeV. For this given amount of energy, it cannot afford to “pack” itself into a size smaller than the size of a nucleus. Classical theory of electromagnetism is not a consistent theory below this distance. However, it is known that the electron is at least ten thousand times smaller than that.

says that mass of a particle determines the energy of the particle at rest. For an electron, its rest energy is known to be 0.511 MeV. For this given amount of energy, it cannot afford to “pack” itself into a size smaller than the size of a nucleus. Classical theory of electromagnetism is not a consistent theory below this distance. However, it is known that the electron is at least ten thousand times smaller than that.