Oct 30, 2021

Precision Medicine Data Dive Shows “Water Pill” Could Potentially Be Repurposed To Treat Alzheimer’s

Posted by Genevieve Klien in categories: biotech/medical, genetics, information science, life extension, neuroscience

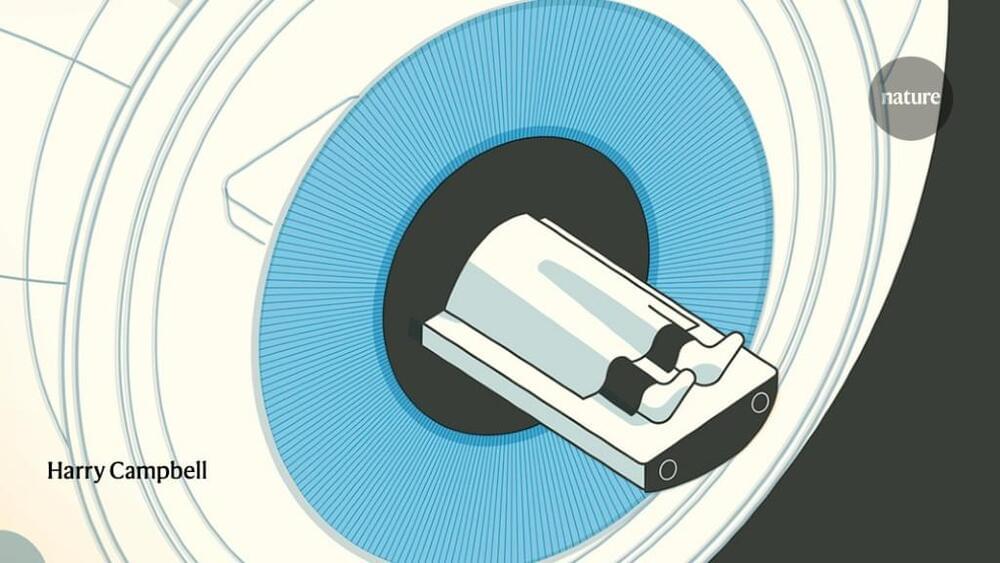

A commonly available oral diuretic pill approved by the U.S. Food and Drug Administration may be a potential candidate for an Alzheimer’s disease treatment for those who are at genetic risk, according to findings published in Nature Aging. The research included analysis showing that those who took bumetanide — a commonly used and potent diuretic — had a significantly lower prevalence of Alzheimer’s disease compared to those not taking the drug. The study, funded by the National Institute on Aging (NIA), part of the National Institutes of Health, advances a precision medicine approach for individuals at greater risk of the disease because of their genetic makeup.

The research team analyzed information in databases of brain tissue samples and FDA-approved drugs, performed mouse and human cell experiments, and explored human population studies to identify bumetanide as a leading drug candidate that may potentially be repurposed to treat Alzheimer’s.

“Though further tests and clinical trials are needed, this research underscores the value of big data-driven tactics combined with more traditional scientific approaches to identify existing FDA-approved drugs as candidates for drug repurposing to treat Alzheimer’s disease,” said NIA Director Richard J. Hodes, M.D.