DALL-E 2 transformed the world of art in 2022.

DALL-E is a system that has been around for years, but its successor, DALL-E 2, was launched this year.

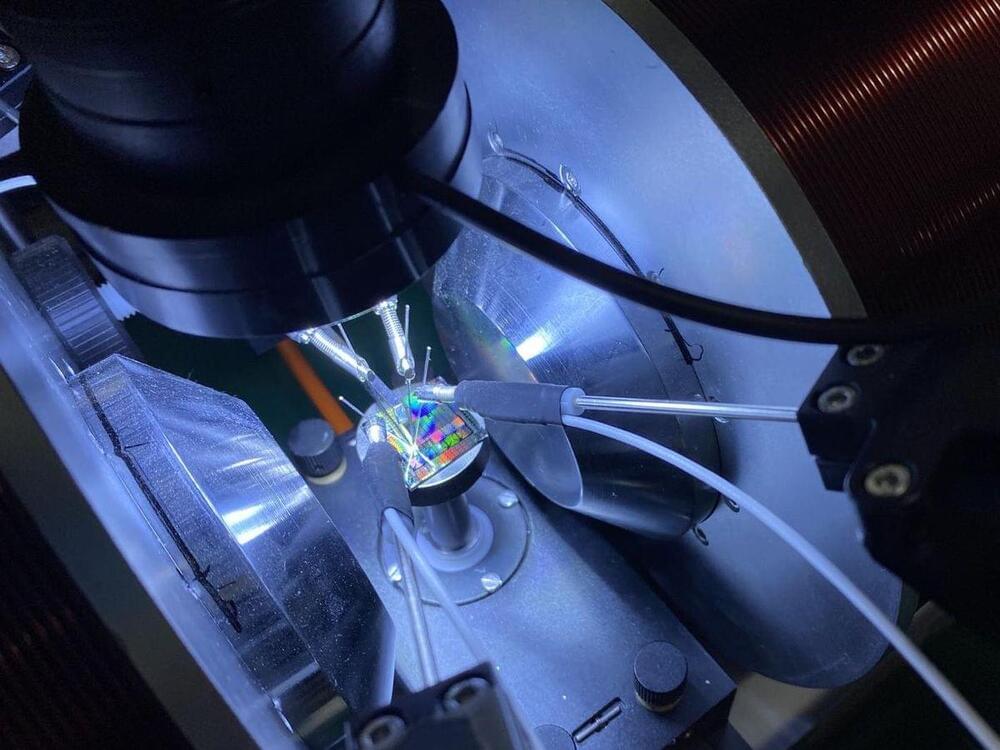

Ibrahim Can/Interesting Engineering.

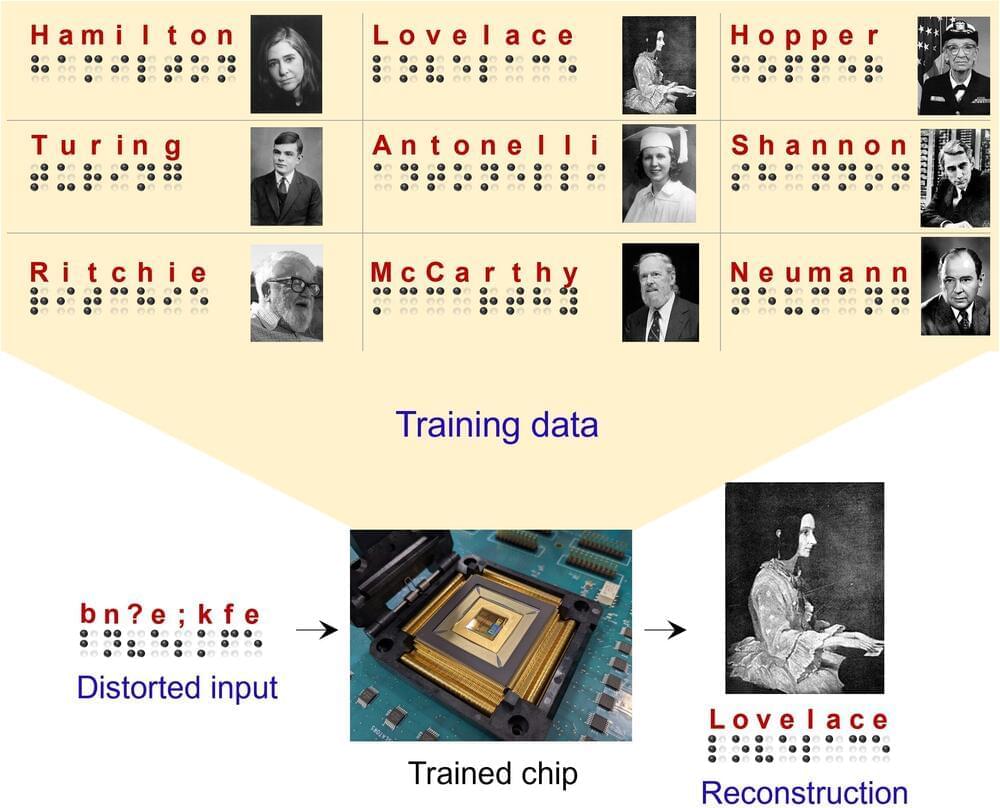

DALL-E and DALL-E 2 are machine-learning models created by OpenAI to produce images from language descriptions. These text-to-image descriptions are known as prompts. The system could generate realistic images just from a description of the scene. DALL-E is a neural network algorithm that creates accurate pictures from short phrases provided by the user. It comprehends language through textual descriptions and from “learning” information provided in its datasets by users and developers.