Apr 27, 2023

What AI Technology Is Doing for Longevity Now

Posted by Shubham Ghosh Roy in categories: biotech/medical, business, cybercrime/malcode, life extension, robotics/AI

In March 2023, MIT Technology Review revealed that Sam Altman, the CEO of OpenAI (ChatGPT), was the mystery investor behind the $180 million investment into stealth startup Retro Biosciences, a biotech company with the ambition of “adding 10 years to the human lifespan.” This investment marks the latest tech entrepreneur expressing their interest in longevity science and a new connection with innovative AI technology.

According to February 2023 reports, AI is continuing to gain traction in healthcare applications. Currently, the market is estimated at $14.6 billion (USD) with a compound annual growth rate (CAGR) of 47.6%, with solutions spread across various healthcare fields, such as patient data and risk analysis, precision medicine, cybersecurity, lifestyle management, and drug discovery.

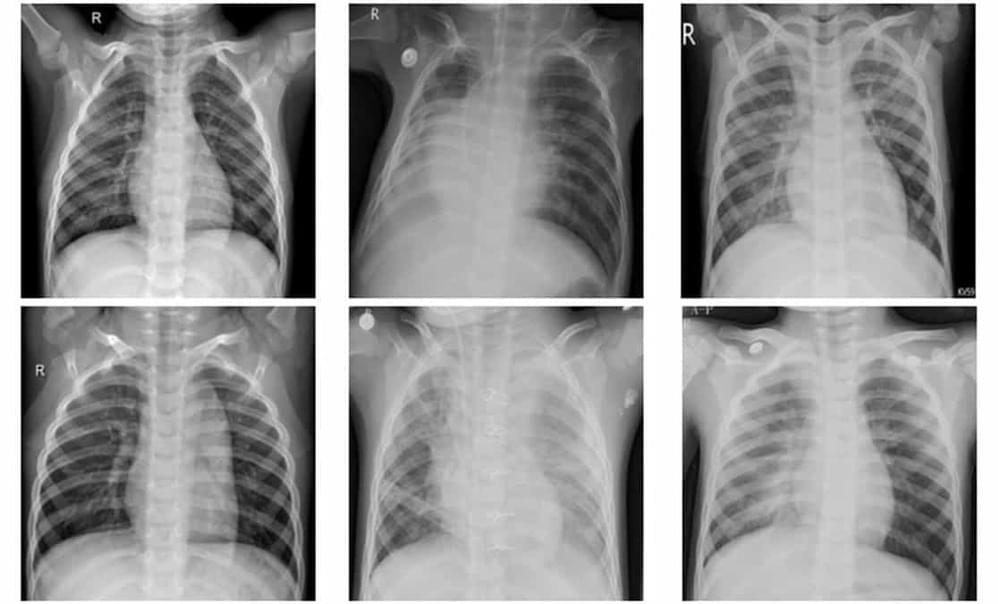

The increasing convergence of AI technology and longevity science is sparking advancements in the sector, with established businesses, start-ups, and researchers utilizing the technology. Most recently, scientists explored how ChatGPT, an AI-based language model, was able to predict Alzheimer’s in 80% of cases when analyzing speech. However, it is not the only implementation.