Nov 21, 2023

A scientist explains an approaching milestone marking the arrival of quantum computers

Posted by Paul Battista in categories: computing, encryption, information science, quantum physics

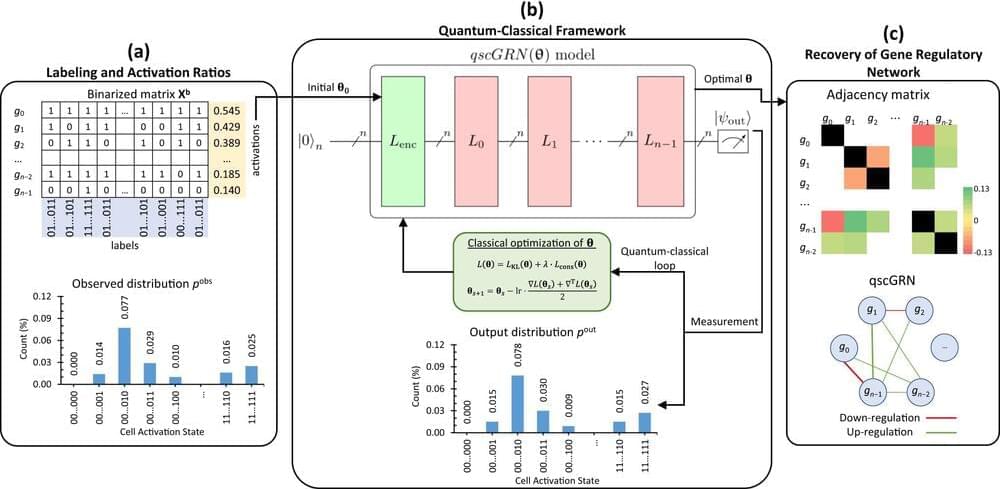

Quantum advantage is the milestone the field of quantum computing is fervently working toward, where a quantum computer can solve problems that are beyond the reach of the most powerful non-quantum, or classical, computers.

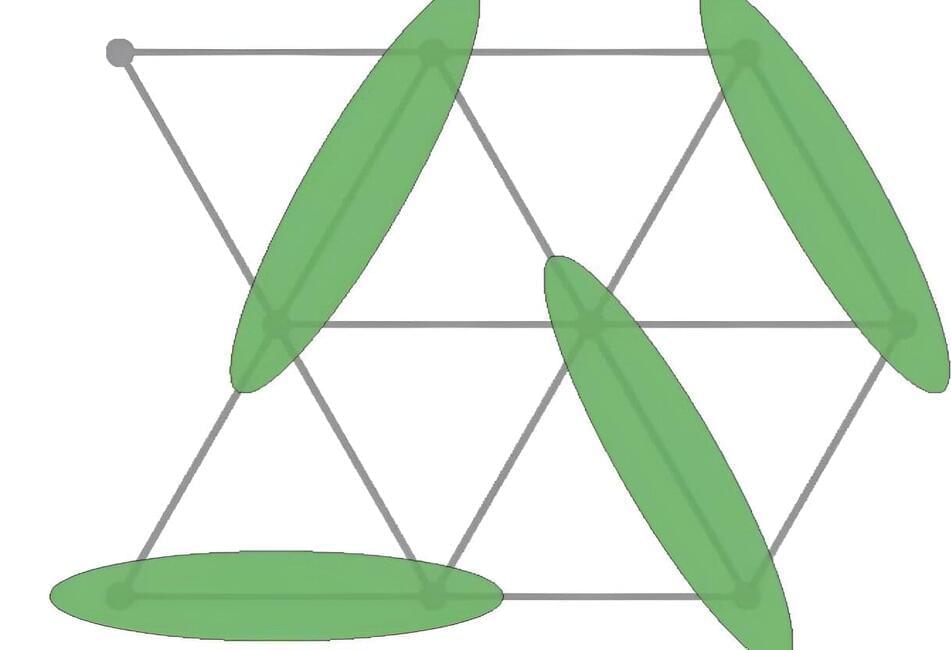

Quantum refers to the scale of atoms and molecules where the laws of physics as we experience them break down and a different, counterintuitive set of laws apply. Quantum computers take advantage of these strange behaviors to solve problems.