From Microsoft.

Towards Modular LLMs by Building and Reusing a Library of LoRAs https://huggingface.co/papers/2405.

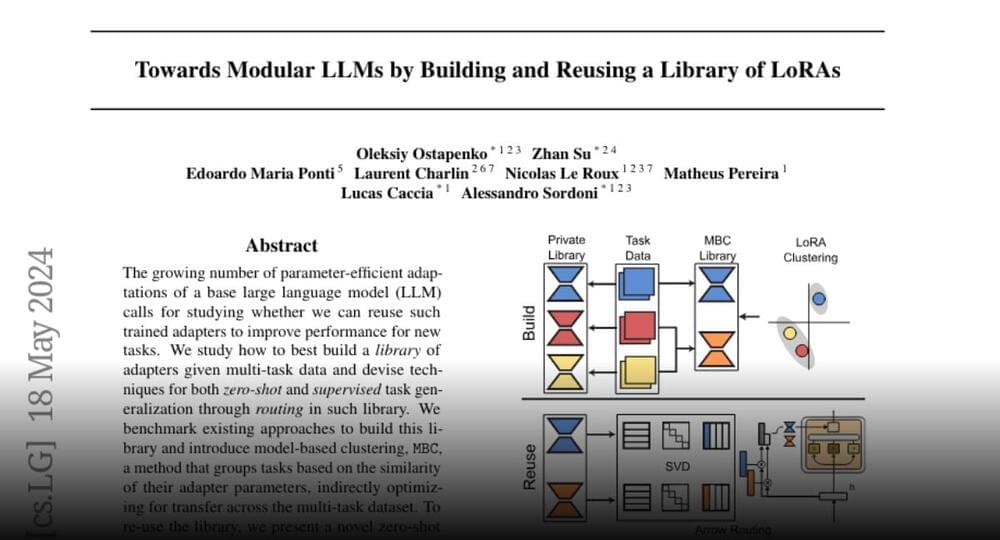

The growing number of parameter-efficient adaptations of a base large language model (LLM) calls for studying whether we can reuse such trained adapters to improve performance for…

Join the discussion on this paper page.

Leave a reply