Using artificial intelligence, physicists have compressed a daunting quantum problem that until now required 100,000 equations into a bite-size task of as few as four equations—all without sacrificing accuracy. The work, published in the September 23 issue of Physical Review Letters, could revolutionize how scientists investigate systems containing many interacting electrons. Moreover, if scalable to other problems, the approach could potentially aid in the design of materials with sought-after properties such as superconductivity or utility for clean energy generation.

“We start with this huge object of all these coupled-together differential equations; then we’re using machine learning to turn it into something so small you can count it on your fingers,” says study lead author Domenico Di Sante, a visiting research fellow at the Flatiron Institute’s Center for Computational Quantum Physics (CCQ) in New York City and an assistant professor at the University of Bologna in Italy.

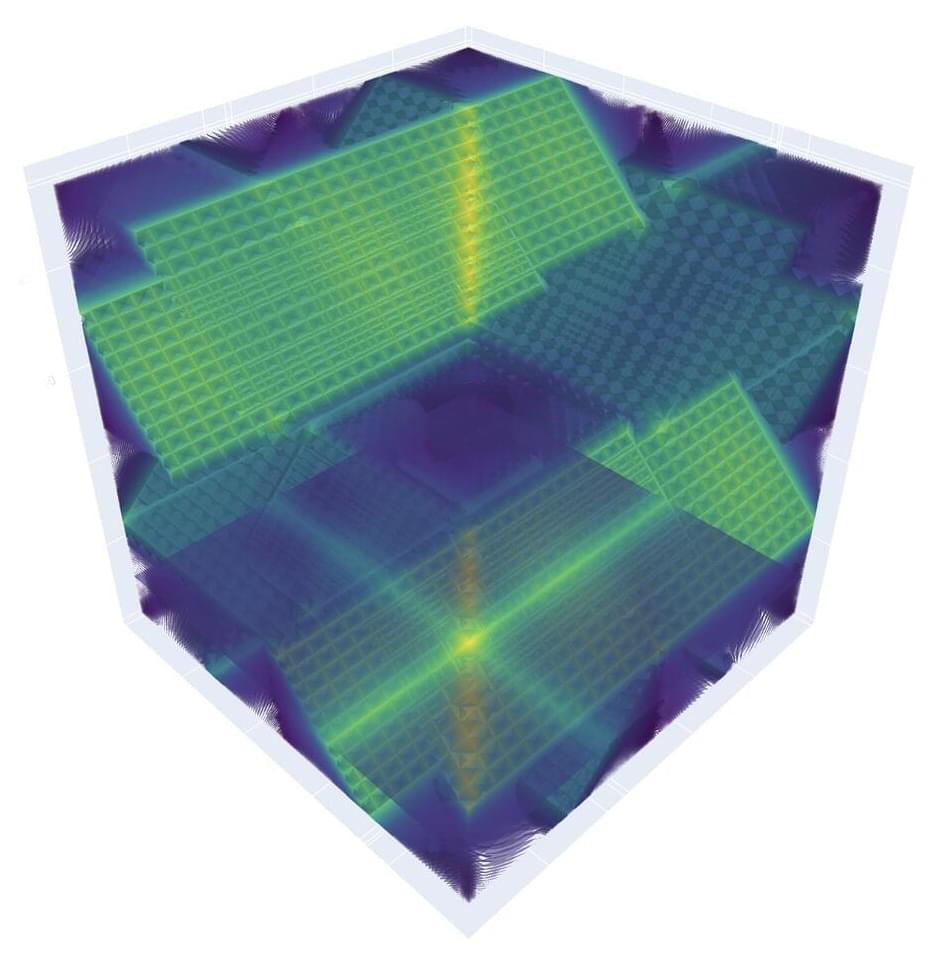

The formidable problem concerns how electrons behave as they move on a gridlike lattice. When two electrons occupy the same lattice site, they interact. This setup, known as the Hubbard model, is an idealization of several important classes of materials and enables scientists to learn how electron behavior gives rise to sought-after phases of matter, such as superconductivity, in which electrons flow through a material without resistance. The model also serves as a testing ground for new methods before they’re unleashed on more complex quantum systems.