Powerful new artificial intelligence models sometimes, quite famously, get things wrong—whether hallucinating false information or memorizing others’ work and offering it up as their own. To address the latter, researchers led by a team at The University of Texas at Austin have developed a framework to train AI models on images corrupted beyond recognition.

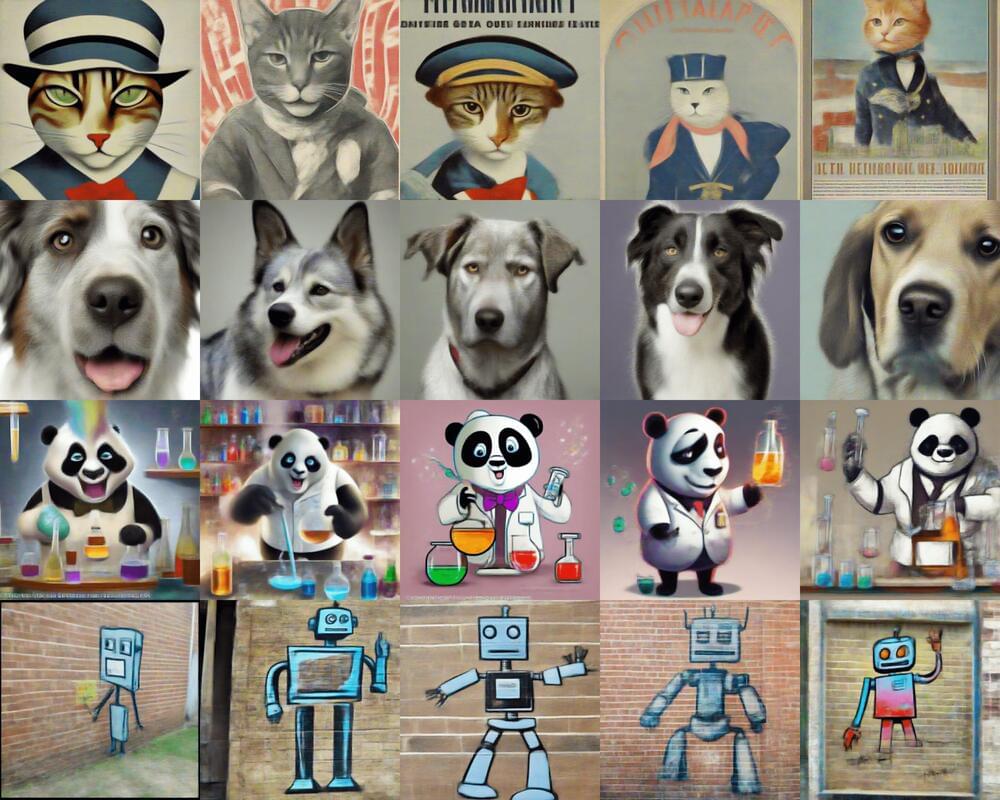

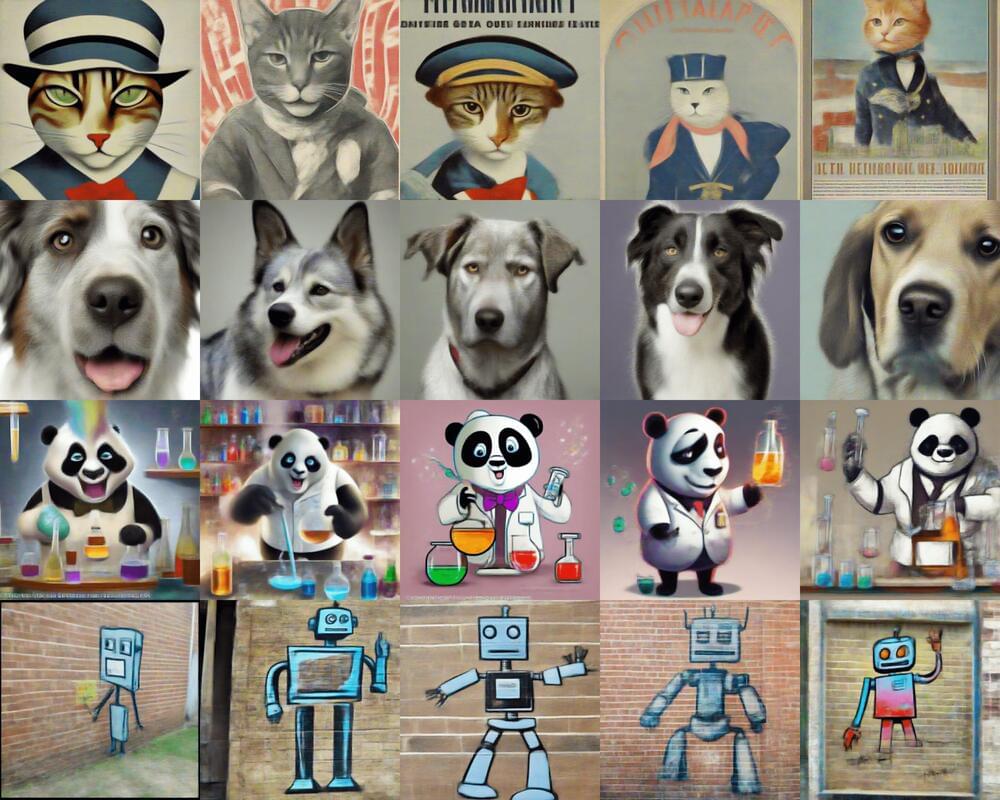

DALL-E, Midjourney and Stable Diffusion are among the text-to-image diffusion generative AI models that can turn arbitrary user text into highly realistic images. All three are now facing lawsuits from artists who allege generated samples replicate their work. Trained on billions of image-text pairs that are not publicly available, the models are capable of generating high-quality imagery from textual prompts but may draw on copyrighted images that they then replicate.

The newly proposed framework, called Ambient Diffusion, gets around this problem by training diffusion models through access only to corrupted image-based data. Early efforts suggest the framework is able to continue to generate high-quality samples without ever seeing anything that’s recognizable as the original source images.