May 21, 2016

Lethal Autonomous Weapons

Posted by Roman Mednitzer in categories: biotech/medical, computing, drones, engineering, geopolitics, robotics/AI, treaties

Biography:

Stuart Russell received his B.A. with first-class honours in physics from Oxford University in 1982 and his Ph.D. in computer science from Stanford in 1986. He then joined the faculty of the University of California at Berkeley, where he is Professor (and formerly Chair) of Electrical Engineering and Computer Sciences and holder of the Smith-Zadeh Chair in Engineering. He is also an Adjunct Professor of Neurological Surgery at UC San Francisco and Vice-Chair of the World Economic Forum’s Council on AI and Robotics. He has published over 150 papers on a wide range of topics in artificial intelligence including machine learning, probabilistic reasoning, knowledge representation, planning, real-time decision making, multitarget tracking, computer vision, computational physiology, and global seismic monitoring. His books include “The Use of Knowledge in Analogy and Induction”, “Do the Right Thing: Studies in Limited Rationality” (with Eric Wefald), and “Artificial Intelligence: A Modern Approach” (with Peter Norvig).

Abstract:

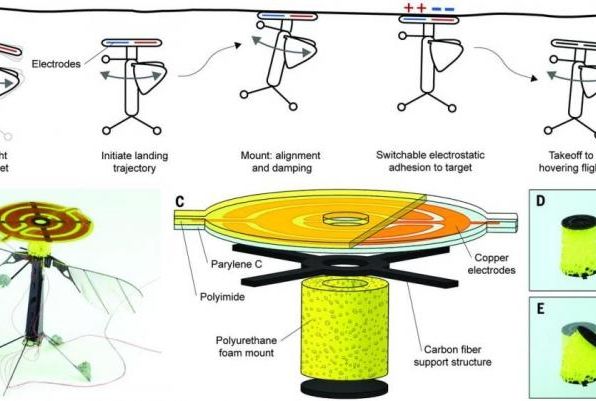

Autonomous weapons systems select and engage targets without human intervention; they become lethal when those targets include humans. LAWS might include, for example, armed quadcopters that can search for and eliminate enemy combatants in a city, but do not include cruise missiles or remotely piloted drones for which humans make all targeting decisions. The artificial intelligence (AI) and robotics communities face an important ethical decision: whether to support or oppose the development of lethal autonomous weapons systems (LAWS).