May 11, 2024

Scientists uncover quantum-inspired vulnerabilities in neural networks: the role of conjugate variables in system attacks

Posted by Dan Breeden in categories: mathematics, quantum physics, robotics/AI

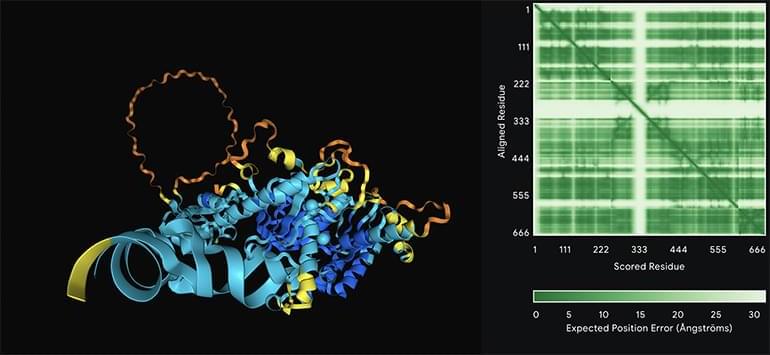

In a recent study merging the fields of quantum physics and computer science, Dr. Jun-Jie Zhang and Prof. Deyu Meng have explored the vulnerabilities of neural networks through the lens of the uncertainty principle in physics. Their work, published in the National Science Review, draws a parallel between the susceptibility of neural networks to targeted attacks and the limitations imposed by the uncertainty principle—a well-established theory in quantum physics that highlights the challenges of measuring certain pairs of properties simultaneously.

The researchers’ quantum-inspired analysis of neural network vulnerabilities suggests that adversarial attacks leverage the trade-off between the precision of input features and their computed gradients. “When considering the architecture of deep neural networks, which involve a loss function for learning, we can always define a conjugate variable for the inputs by determining the gradient of the loss function with respect to those inputs,” stated in the paper by Dr. Jun-Jie Zhang, whose expertise lies in mathematical physics.

This research is hopeful to prompt a reevaluation of the assumed robustness of neural networks and encourage a deeper comprehension of their limitations. By subjecting a neural network model to adversarial attacks, Dr. Zhang and Prof. Meng observed a compromise between the model’s accuracy and its resilience.