May 18, 2023

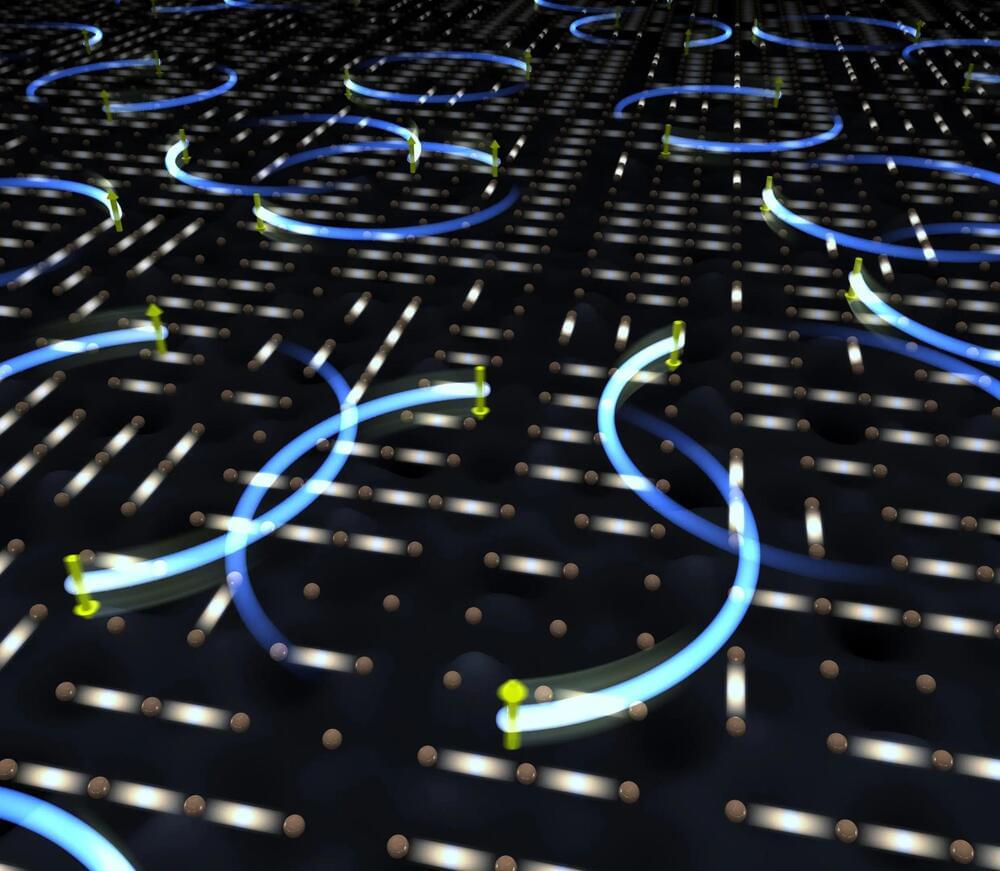

A programmable surface plasmonic neural network to detect and process microwaves

Posted by Saúl Morales Rodriguéz in categories: information science, robotics/AI

AI tools based on artificial neural networks (ANNs) are being introduced in a growing number of settings, helping humans to tackle many problems faster and more efficiently. While most of these algorithms run on conventional digital devices and computers, electronic engineers have been exploring the potential of running them on alternative platforms, such as diffractive optical devices.

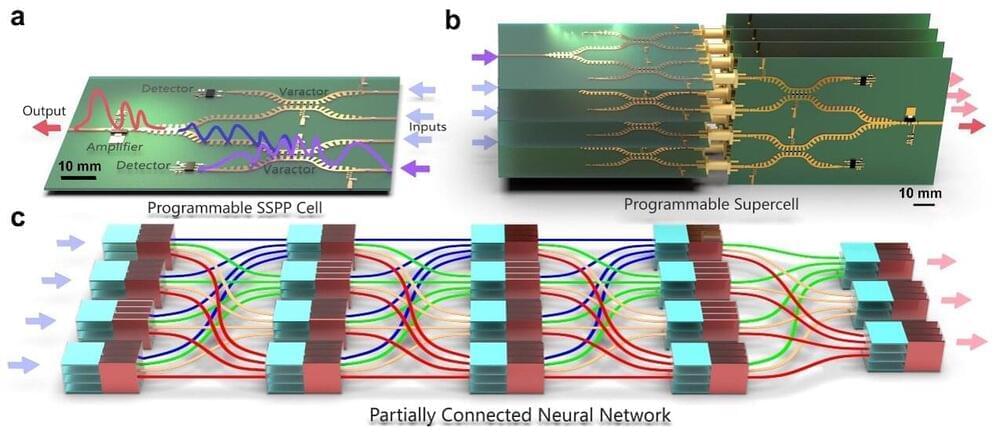

A research team led by Prof. Tie Jun Cui at Southeast University in China has recently developed a new programmable neural network based on a so-called spoof surface plasmon polariton (SSPP), which is a surface electromagnetic wave that propagates along planar interfaces. This newly proposed surface plasmonic neural network (SPNN) architecture, introduced in a paper in Nature Electronics, can detect and process microwaves, which could be useful for wireless communication and other technological applications.

“In digital hardware research for the implementation of artificial neural networks, optical neural networks and diffractive deep neural networks recently emerged as promising solutions,” Qian Ma, one of the researchers who carried out the study, told Tech Xplore. “Previous research focusing on optical neural networks showed that simultaneous high-level programmability and nonlinear computing can be difficult to achieve. Therefore, these ONN devices usually have been limited to specific tasks without programmability, or only applied for simple recognition tasks (i.e., linear problems).”