Jan 23, 2024

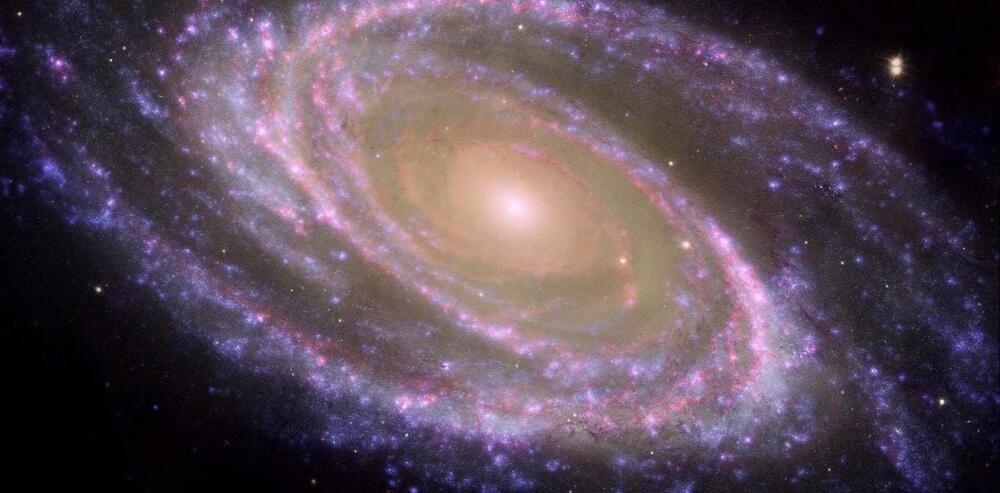

Astrophysicists offer theoretical proof of traversable wormholes in the expanding universe

Posted by Dan Breeden in categories: cosmology, evolution, information science, physics

The expansion of the universe at some stage of evolution is well described by the Friedmann model. It was derived from general relativity a hundred years ago, but it is still considered one of the most important and relevant cosmological models.

RUDN University astrophysicists have now proven the theoretical possibility of the existence of traversable wormholes in the Friedmann universe. The research is published in the journal Universe.

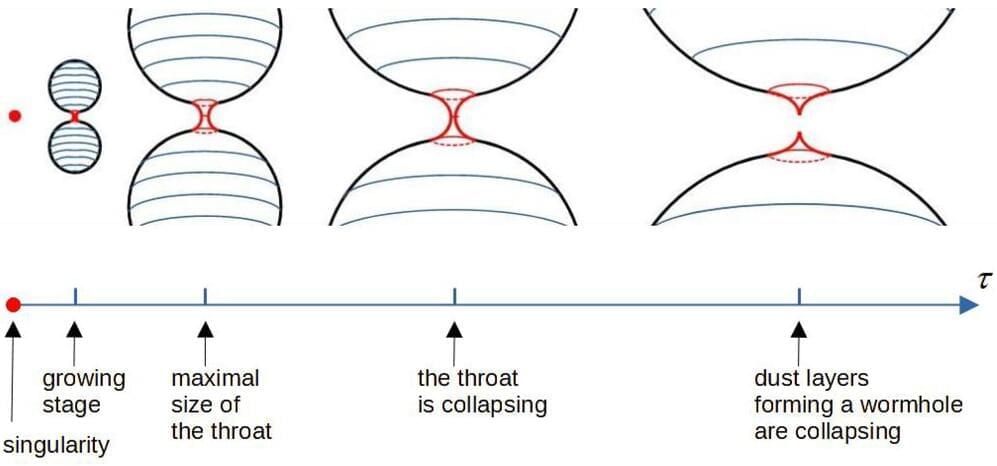

“A wormhole is a type of highly curved geometry. It resembles a tunnel either between distant regions of the same universe or between different universes. Such structures were first discussed in the framework of solutions to the gravitational field equations a hundred years ago. But the wormholes considered then turned out to be non-traversable even for photons—they could not move from one ‘end of the tunnel’ to the other, not to mention going back,” said Kirill Bronnikov, doctor of physical and mathematical sciences, professor of RUDN University.