Archive for the ‘information science’ category: Page 299

Mar 29, 2016

Neuromorphic supercomputer has 16 million neurons

Posted by Klaus Baldauf in categories: information science, neuroscience, robotics/AI, supercomputing

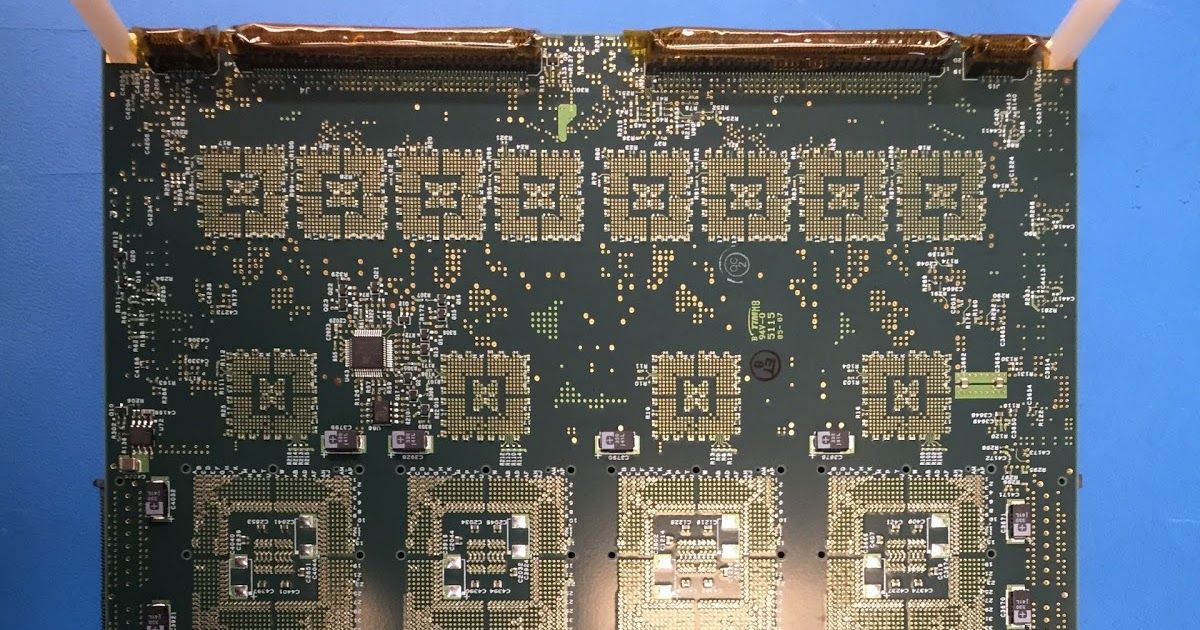

Today, Lawrence Livermore National Lab (LLNL) and IBM announced the development of a new Scale-up Synaptic Supercomputer (NS16e) that highly integrates 16 TrueNorth Chips in a 4×4 array to deliver 16 million neurons and 256 million synapses. LLNL will also receive an end-to-end software ecosystem that consists of a simulator; a programming language; an integrated programming environment; a library of algorithms as well as applications; firmware; tools for composing neural networks for deep learning; a teaching curriculum; and cloud enablement.

The $1 million computer has 16 IBM microprocessors designed to mimic the way the brain works.

IBM says it will be five to seven years before TrueNorth sees widespread commercial use, but the Lawrence Livermore test is a big step in that direction.

Continue reading “Neuromorphic supercomputer has 16 million neurons” »

Mar 28, 2016

DARPA Announces Next Grand Challenge — Spectrum Collaboration Challenge

Posted by Karen Hurst in categories: information science, internet, military, mobile phones, robotics/AI

DARPA’s new “Spectrum Collaboration Challenge” with a $2million prize for who can motivate a machine learning approach to dynamically sharing the RF Spectrum.

WASHINGTON, March 28, 2016 /PRNewswire-iReach/ — On March 23rd, 2016 DARPA announced its next Grand Challenge at the International Wireless Conference Expo in Las Vegas, Nevada. Program Manager, Paul Tilghman of DARPA’s Microsystems Technology Office (MTO), made the announcement to industry leaders following the conferences Dynamic Spectrum Sharing Summit. The challenge will motivate a machine learning approach to dynamically sharing the RF Spectrum and has been named the “Spectrum Collaboration Challenge.” A top prize of $2million dollars has been announced.

While mostly transparent to the typical cell phone or Wi-Fi user, the problem of spectrum congestion has been a long standing issue for both the commercial sector and Department of Defense. The insatiable appetite for wireless connectivity over the last 30 years has grown at such a hurried pace that within the RF community the term spectrum scarcity has been coined. RF bandwidth, the number of frequencies available to communicate information over, is a relatively fixed resource, and advanced communication systems like LTE and military communications systems consume a lot of it. As spectrum planners prepare for the next big wave of connected devices, dubbed the Internet of Things, they wonder where they will find the spectrum bandwidth they need to support these billions of new devices. Equally challenging, is the military’s desire to connect every soldier on the battlefield, while using these very same frequencies.

Continue reading “DARPA Announces Next Grand Challenge — Spectrum Collaboration Challenge” »

Mar 26, 2016

Space Innovation Congress

Posted by Karen Hurst in categories: biotech/medical, food, government, information science, satellites

The is a Space Technology Conference and Exhibition, taking place in London on 7–8 April 2016. It is set to showcase the most cutting edge technologies and uses of Space Technology providing insight from over 50 speakers sharing their unparalleled industry knowledge and real-life experiences.

This year’s Space Innovation Congress will be highlighting the most innovative advancements in Space technology and will look at how these are being applied to many industry verticals from farming to banking, and the practical case studies that are coming out of these projects.

With user cases with dedicated tracks covering the entire Space exploration and Earth observation ecosystems: Satellites, Big data, Crop monitoring, Space debris, Maritime surveillance, Space weather and its impact on banking systems, Biomedical, Commercial space collaboration and Telecoms.

Mar 24, 2016

Quantum computing breakthrough paves way for ultra-powerful machines

Posted by Andreas Matt in categories: computing, information science, quantum physics

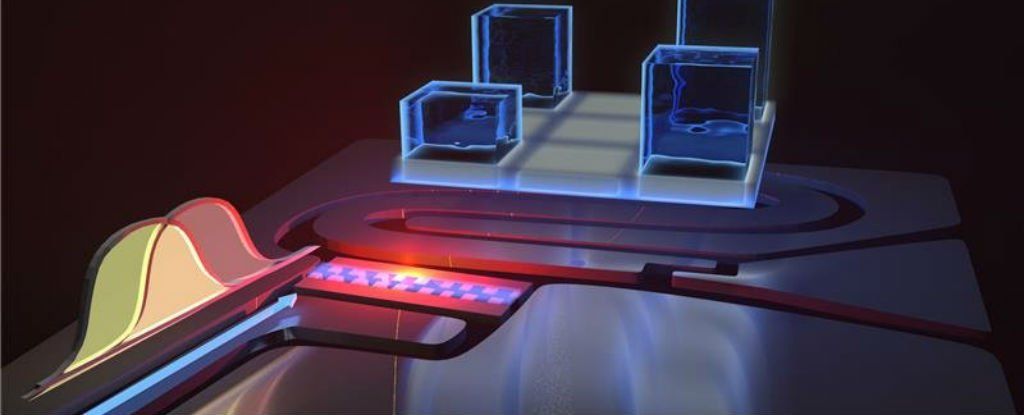

A crucial hurdle in the development of ultra-powerful quantum computers has been overcome through the development of the world’s first programmable system that can be scaled.

Researchers at the University of Maryland College Park built a quantum computer module that can be linked to other modules to perform simultaneous quantum algorithms.

“Quantum computers can solve certain problems more efficiently than any possible conventional computer,” states a paper published this week that details the researchers’ findings. “Small quantum algorithms have been demonstrated in multiple quantum computing platforms, many specifically tailored to hardware to implement a particular algorithm or execute a limited number of computational paths.

Continue reading “Quantum computing breakthrough paves way for ultra-powerful machines” »

Mar 21, 2016

Treating disease at stage zero

Posted by Klaus Baldauf in categories: bioengineering, biotech/medical, health, information science, nanotechnology

It sounds really obvious, but hospitals aren’t for healthy people. The world’s entire health system is really there to react once people get ill. If doctors are able to catch an illness at stage one that’s great, but if it reaches stage three or four there’s often not that much that can be done. So what if we could treat patients at stage zero and predict the likelihood of contracting diseases? We could then get treatment to people who need it much earlier and take preventative steps to avoid illness altogether.

Currently, when we think of monitoring in healthcare we’re usually referring to monitoring patients’ reactions to drugs or treatments, but this is changing. No amateur runner’s uniform is complete these days without a Fitbit or some kind of analytics tool to monitor progress, so the idea of monitoring the healthy is becoming ingrained in the public’s consciousness. But Fitbits only scrape the surface of what we can do. What if the data from fitness trackers could be combined with medical records, census data and the details of supermarket loyalty cards to predict the likelihood of contracting a particular disease?

With big data we can move from reacting to predicting, but how do we move beyond just making predictions; how do we prevent disease from occurring altogether? Up until now all of our monitoring technology has been located outside of the body, but nano-sized entities made of DNA could one day patrol the body, only acting when they come into contact with specific cells – cancer cells, for example. The technology that would turn tiny machines – roughly the size of a virus – into molecular delivery trucks that transport medication is already being worked on by bioengineers. If this kind of technology can be used to treat cancer, without needing to release toxic agents into the body, can the same technology be inserted into a healthy person and lie in wait for the opportunity to fight disease on its host’s behalf?

Mar 18, 2016

New quantum computer device takes advantage of a loophole in causality

Posted by Shailesh Prasad in categories: computing, information science, mobile phones, quantum physics

Researchers in Finland have figured out a way to reliably make quantum computers — technology that’s tipped to revolutionise computing in the coming years — even more powerful. And all they had to do was throw common sense out the window.

You’re almost certainly reading this article on a classical computer — which includes all phones, laptops, and tablets — meaning that your computer can only ever do one thing at a time. It reads one bit, then the next bit, then the next bit, and so on. The reading is lightning fast and combines millions or billions or trillions of bits to give you what you want, but the bits are always read and used in order.

So if your computer searches for the solution to a problem, it tries one answer (a particular batch of ones and zeros), checks how far the result is from the goal, tries another answer (a different batch), and repeats. For complicated problems, that process can take an incredibly long time. Sometimes, that’s good. Very clever multiplication secures your bank account, and faster or more efficient equation-solvers put that in jeopardy.

Continue reading “New quantum computer device takes advantage of a loophole in causality” »

Mar 17, 2016

Rapid Superconducting Memory Cell Control System Developed

Posted by Karen Hurst in categories: computing, information science, materials, quantum physics

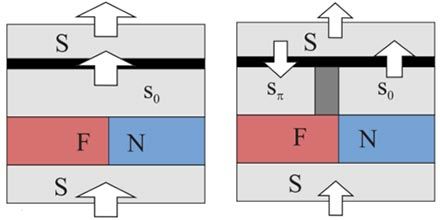

“With the operational function that we have proposed in these memory cells, there will be no need for time-consuming magnetization and demagnetization processes. This means that read and write operations will take only a few hundred picoseconds, depending on the materials and the geometry of the particular system, while conventional methods take hundreds or thousands of times longer than this,” said the study author Alexander Golubov, the head of Moscow Institute of Physics and Technology (MIPT)’s Laboratory of Quantum Topological Phenomena in Superconducting Systems.

Golubov and colleagues at Moscow State University have proposed creating basic memory cells based on quantum effects in superconductor “sandwiches.” Superconductors were predicted in the 1960s by the British physicist Brian Josephson. The electrons in these “sandwiches,” called “Josephson junctions,” are able to tunnel from one layer of a superconductor to another, passing through the dielectric like balls passing through a perforated wall.

Today, Josephson junctions are used both in quantum devices and conventional devices. For example, superconducting qubits are used to build the D-wave quantum system, which is capable of finding the minima of complex functions using the quantum annealing algorithm. There are also ultra-fast analogue-to-digital converters, devices to detect consecutive events, and other systems that do not require fast access to large amounts of memory. There have also been attempts to use the Josephson Effect to create ordinary processors. An experimental processor of this type was created in Japan in the late 1980s. In 2014, the research agency IAPRA resumed its attempts to create a prototype of a superconducting computer.

Continue reading “Rapid Superconducting Memory Cell Control System Developed” »

Mar 17, 2016

We should be more afraid of computers than we are – video

Posted by Scott Davis in categories: computing, food, information science, robotics/AI

Specifically, artificially intelligent computers…

As sophisticated algorithms can complete tasks we once thought impossible, computers are seeming to become a real threat to humanity. Whether they decide to pulp us into human meat paste, or simply make our work completely unnecessary, argues technology reporter Alex Hern, we should be afraid of computers.

Mar 16, 2016

Using machine learning to rationally design future electronics materials

Posted by Sean Brazell in categories: computing, information science, materials, particle physics, robotics/AI, singularity, solar power, sustainability

Even if we don’t create a true AI for a thousand years, these algorithms, pared with our exponentially increasing computing power, could have much of the same effect on our civilization as the more traditional, AI-centric type Singularity. Very, very soon.

Replacing inefficient experimentation, UConn researchers have used machine learning to systematically scan millions of theoretical compounds for qualities that would make better materials for solar cells, fibers, and computer chips.

Continue reading “Using machine learning to rationally design future electronics materials” »