Oct 11, 2016

Caverlee, Hu receive DARPA grant to fill in the gaps of spatial-temporal datasets

Posted by Karen Hurst in category: information science

The Defense Sciences Office at the Defense Advanced Research Projects Agency (DARPA) has awarded Dr. James Caverlee and Dr. Xia “Ben” Hu a Next Generation Social Science (NGS2) grant to complete their collaborative research project, HELIOS, named after the Greek god with the ability to see the invisible.

The Defense Sciences Office at the Defense Advanced Research Projects Agency (DARPA) has awarded Dr. James Caverlee and Dr. Xia “Ben” Hu a Next Generation Social Science (NGS2) grant to complete their collaborative research project, HELIOS, named after the Greek god with the ability to see the invisible.

Along with being a part of the Texas A&M Engineering Experiment Station’s (TEES) Center for Digital Libraries, Caverlee is an associate professor and Hu is an assistant professor in the Department of Computer Science and Engineering at Texas A&M University.

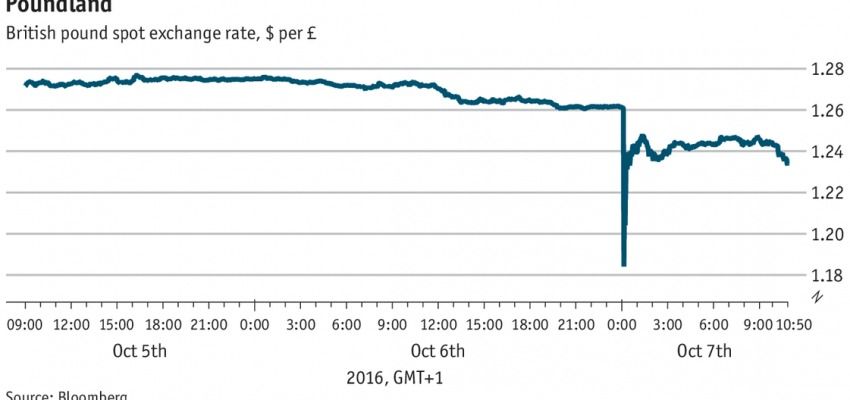

The HELIOS project aims to create new computational methods and algorithms to fill in the gaps of rapidly evolving spatial-temporal datasets, which are datasets that measure both space and time. These types of datasets are generally missing information, which prohibit accurate assessments of time and location.