Apr 10, 2017

Billionaire investor to accelerate research in artificial intelligence in healthcare

Posted by Steve Hill in categories: biotech/medical, business, information science, life extension, robotics/AI

Interest in rejuvenation biotechnology is growing rapidly and attracting investors.

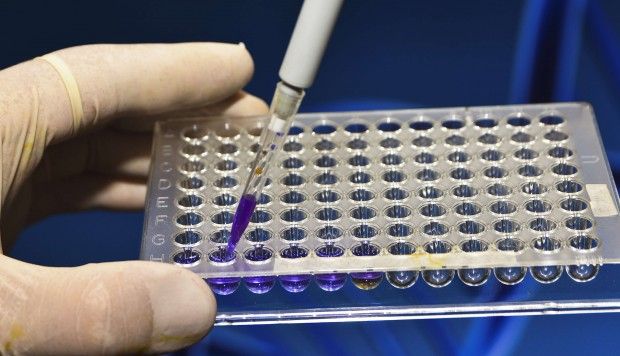

- Jim Mellon has made an investment in Insilico Medicine to enable the company to validate the many molecules discovered using deep learning and launch multi-modal biomarkers of human aging

Monday, April 10, 2017, Baltimore, MD — Insilico Medicine, Inc, a big data analytics company applying deep learning techniques to drug discovery, biomarker development, and aging research today announced that it has closed an investment from the billionaire biotechnology investor Jim Mellon. Proceeds will be used to perform pre-clinical validation of multiple lead molecules developed using Insilico Medicine’s drug discovery pipelines and to advance research in deep learned biomarkers of aging and disease.