Feb 23, 2020

RAFT 2035: Roadmap to Abundance, Flourishing, and Transcendence, by 2035 by David Wood

Posted by Mike Diverde in categories: biotech/medical, drones, information science, nanotechnology, robotics/AI

I’ve been reading an excellent book by David Wood, entitled, which was recommended by my pal Steele Hawes. I’ve come to an excellent segment of the book that I will quote now.

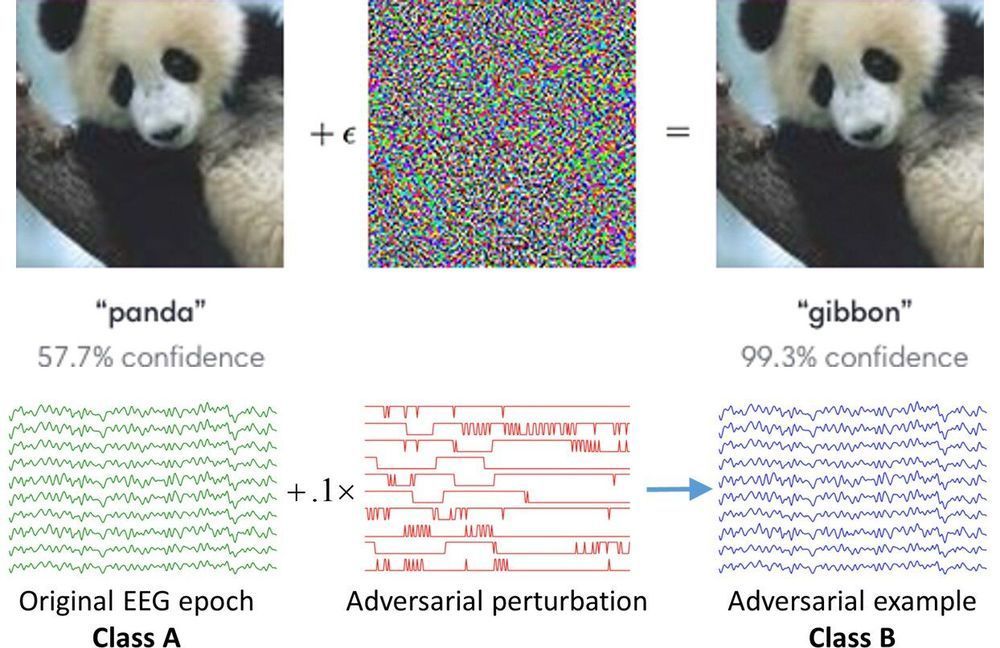

“One particular challenge that international trustable monitoring needs to address is the risk of more ever powerful weapon systems being placed under autonomous control by AI systems. New weapons systems, such as swarms of miniature drones, increasingly change their configuration at speeds faster than human reactions can follow. This will lead to increased pressures to transfer control of these systems, at critical moments, from human overseers to AI algorithms. Each individual step along the journey from total human oversight to minimal human oversight might be justified, on grounds of a balance of risk and reward. However, that series of individual decisions adds up to an overall change that is highly dangerous, given the potential for unforeseen defects or design flaws in the AI algorithms being used.”

The fifteen years from 2020 to 2035 could be the most turbulent of human history. Revolutions are gathering pace in four overlapping fields of technology: nanotech, biotech, infotech, and cognotech, or NBIC for short. In combination, these NBIC revolutions offer enormous new possibilities: enormous opportunities and enormous risks.