Jan 25, 2022

Studying the big bang with artificial intelligence

Posted by Genevieve Klien in categories: cosmology, information science, mathematics, particle physics, quantum physics, robotics/AI

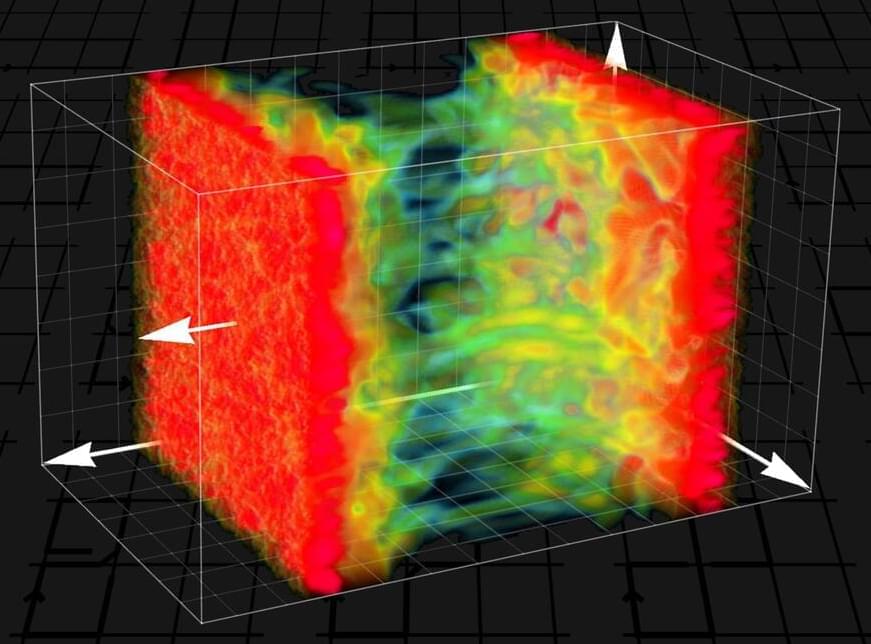

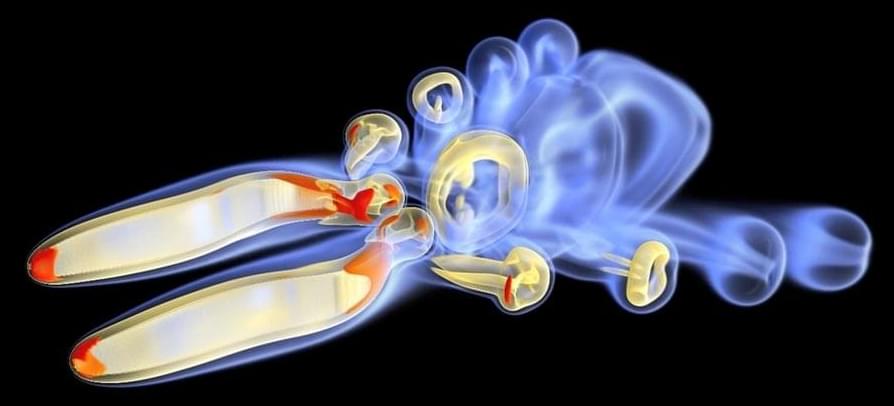

It could hardly be more complicated: tiny particles whir around wildly with extremely high energy, countless interactions occur in the tangled mess of quantum particles, and this results in a state of matter known as “quark-gluon plasma”. Immediately after the Big Bang, the entire universe was in this state; today it is produced by high-energy atomic nucleus collisions, for example at CERN.

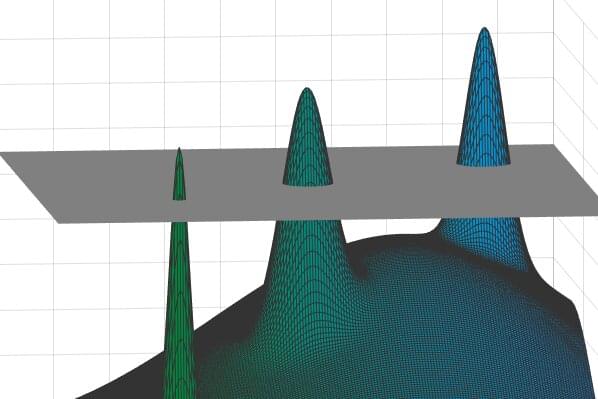

Such processes can only be studied using high-performance computers and highly complex computer simulations whose results are difficult to evaluate. Therefore, using artificial intelligence or machine learning for this purpose seems like an obvious idea. Ordinary machine-learning algorithms, however, are not suitable for this task. The mathematical properties of particle physics require a very special structure of neural networks. At TU Wien (Vienna), it has now been shown how neural networks can be successfully used for these challenging tasks in particle physics.