Here’s an interesting thought experiment. Pretend that you took all words at their literal interpretation, all of the time, wherever you went, and acted upon those words strictly and accordingly. For example, suppose you are driving in your car and perchance see a billboard that is touting the message that you should eat at Joe’s Pizzeria, accessible at the next exit up ahead. The normal course of events would be that you would consult your stomach to ascertain whether you are hungry. Furthermore, if you were hungry, the next question is whether you want pizza. Upon deciding that maybe you do want pizza, the next aspect would be whether you want to take the upcoming exit and eat at Joe’s Pizzeria since you might have in mind some other pizza eatery instead. But, none of those sensible and reasonable ideas rattle around in your noggin. We have agreed that you are going to take everything in a meticulously literal way. By gosh, the billboard instructed you to go eat at Joe’s Pizzeria, so that’s what you are going to do. Come heck or high water, you will take the next exit and you will drive straight to that pizzeria and you will order yourself a juicy hot pizza. This might work out okay and you’ll be happy that you obediently abided by the wording of the billboard. Perhaps though this side trip has made you late for work. Your boss won’t especially appreciate that you opted to be tardy because you just had to get a slice of pizza. Ouch, your boss fires you the moment you proffer such a lame excuse.

Full Story:

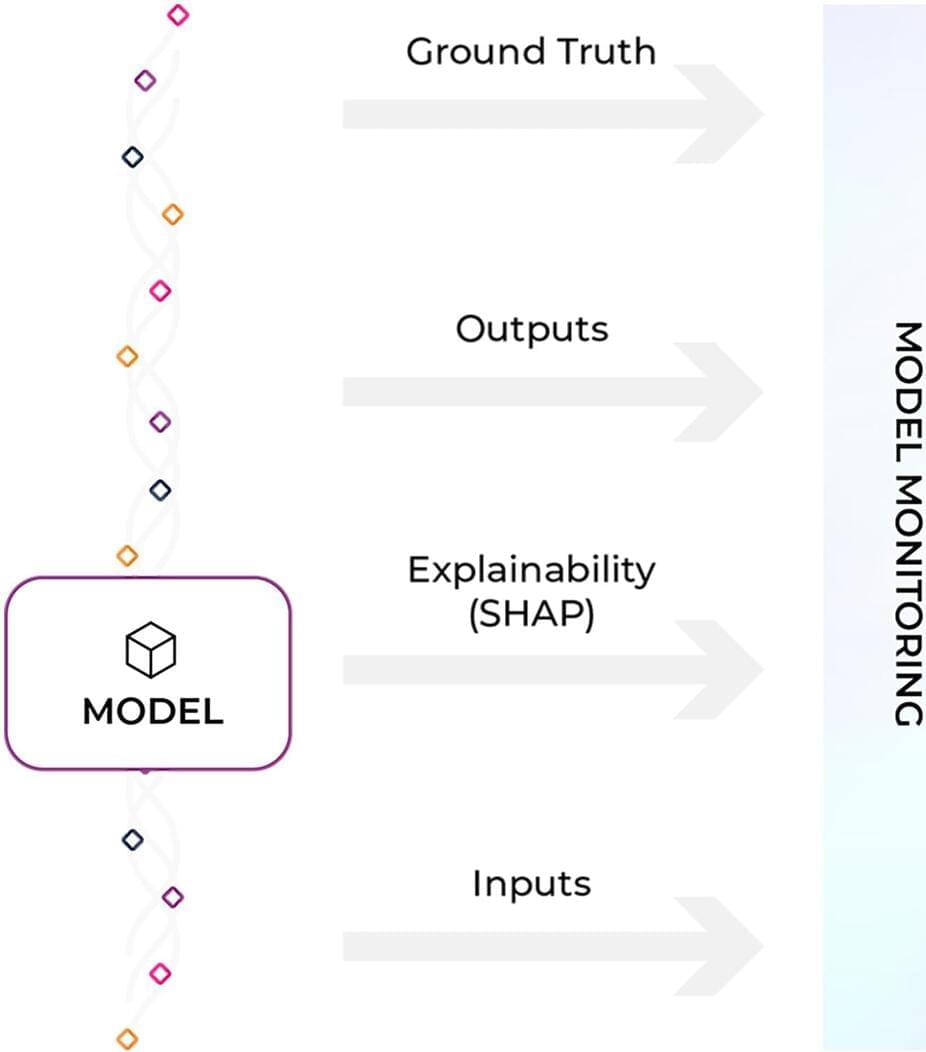

Existing AI and Machine Learning is stuck at doing literal interpretation and lacks any common-sense, which bodes for great concerns and especially when it comes to the advent of self-driving cars.

Continue reading “Strict Literal Interpretation Is A Hardheaded Drawback Of Machine Learning And Likewise Bad For AI Self-Driving Cars” »