Oct 24, 2023

Meet Rhysida, a New Ransomware Strain That Deletes Itself

Posted by Shubham Ghosh Roy in category: cybercrime/malcode

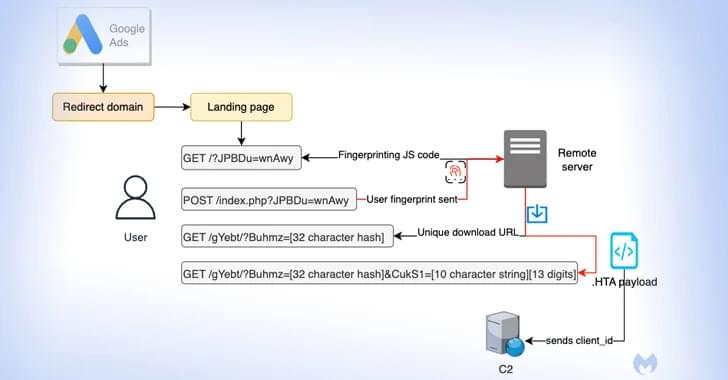

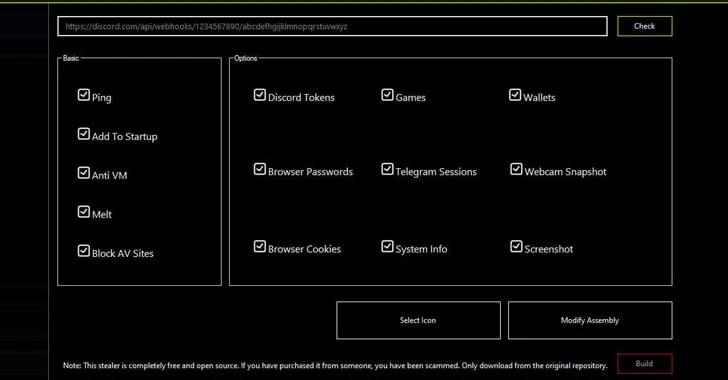

Operating since last May, an emerging ransomware strain called Rhysida was deployed along with new stealer malware called Lumar for a potent new one-two punch against Brazil’s popular PIX payment system users.

Researchers from Kaspersky reported Rhysida is functioning as a ransomware-as-a-service (RaaS) operation with a demonstrated ability to quickly evolve.

“ It stands out for its unique self-deletion mechanism and compatibility with pre-Windows 10 versions of Microsoft. Written in C++ and compiled with MinGW and shared libraries, Rhysida showcases sophistication in its design,” Kaspersky said in its findings about the group. “While relatively new, Rhysida faced initial configuration challenges with its onion server, revealing a group’s rapid adaptation and learning curve.”