Oct 15, 2016

Google’s AI can now learn from its own memory independently

Posted by Shane Hinshaw in categories: computing, robotics/AI

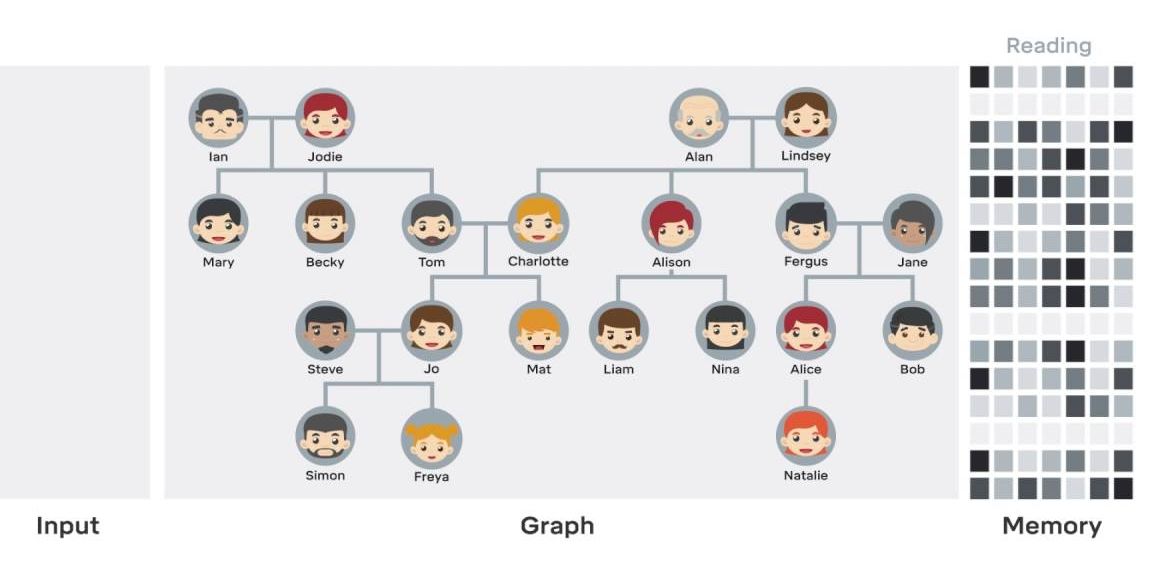

The DeepMind artificial intelligence (AI) being developed by Google’s parent company, Alphabet, can now intelligently build on what’s already inside its memory, the system’s programmers have announced.

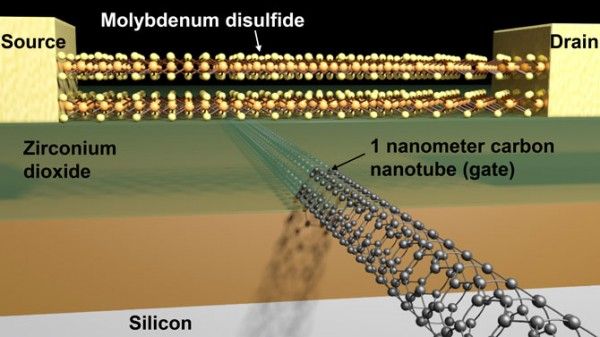

Their new hybrid system – called a Differential Neural Computer (DNC) – pairs a neural network with the vast data storage of conventional computers, and the AI is smart enough to navigate and learn from this external data bank.

Continue reading “Google’s AI can now learn from its own memory independently” »