Sep 1, 2023

Google Launches Tool That Detects AI Images In Effort To Curb Deepfakes

Posted by Gemechu Taye in categories: business, cybercrime/malcode, robotics/AI

Fake images and misinformation in the age of AI are growing. Even in 2019, a Pew Research Center study found that 61% of Americans said it is too much to ask of the average American to be able to recognize altered videos and images. And that was before generative AI tools became widely available to the public.

AdobeADBE +0.5% shared August 2023 statistics on the number of AI-generated images created with Adobe Firefly reaching one billion, only three months after it launched in March 2023.

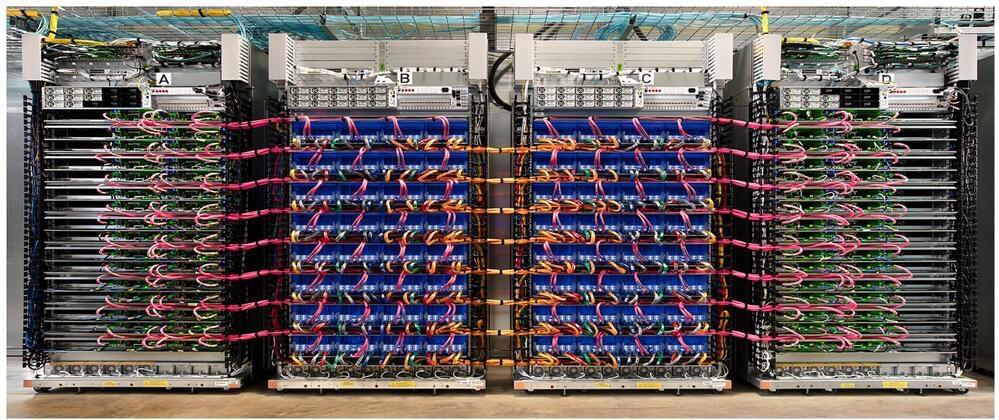

In response to the increasing use of AI images, Google Deep Mind announced a beta version of SynthID. The tool will watermark and identify AI-generated images by embedding a digital watermark directly into the pixels of an image that will be imperceptible to the human eye but detectable for identification.

Continue reading “Google Launches Tool That Detects AI Images In Effort To Curb Deepfakes” »